Diskover Configuration and Administration Guide

For version 2.2.x or previous

This guide is intended for Service Professionals and System Administrators.

Introduction

Overview

Diskover Data is a web-based platform that provides single-pane viewing of distributed digital assets. It provides point-in-time snapshot indexes of data fragmented across cloud and on-premise storage spread across an entire organization. Users can quickly and easily search across company files. Diskover is a data management application for your digital filing cabinet, providing powerful granular search capabilities, analytics, file-based workflow automation, and ultimately enables companies to scale their business and be more efficient at reducing their operating costs.

For more information, please visit diskoverdata.com

Diskover Use Cases

Diskover addresses unstructured data stored across various storage repositories. Data curation encompasses the manual and automated processes needed for principled and controlled data creation, maintenance, cleanup, and management, together with the capacity to add value to data.

System Administrators

The use case for System Administrators is often centered around data cleanup, data disposition, ensuring data redundancy, and automating data. System Administrators are often tasked with controlling costs associated with unstructured data.

Line of Business Users

The use cases for Line of Business users are often centered around adding value to data, finding relevant data, correlating, analyzing, taking action on data sets, and adding business context to data.

Approved AWS Technology Partner

Diskover Data is an official AWS Technology Partner. Please note that AWS has renamed Amazon Elasticsearch Service to Amazon OpenSearch Service. Most operating and configuration details for OpenSearch Service should also be applicable to Elasticsearch..

Document Conventions

Easy Code Snippets Copy/Paste

Throughout this document, all the lines displayed in a different font are designed for users to copy to a clipboard using the copy icon on the far right and paste directly into a terminal session:

code snippet - use icon on the far right to copy me

Proposed Actions

🔴 All proposed action items are preceded by a red dot.

Important Notes and Warnings

🟨 All important notes and warnings are preceded by a yellow square.

Restricted Diskover Editions

The appropriate label(s) will be displayed for features and/or sections of the guide that apply to specific Diskover editions.

User Roles and Authentication

This chapter discusses setting up authenticated user access to Diskover-Web.

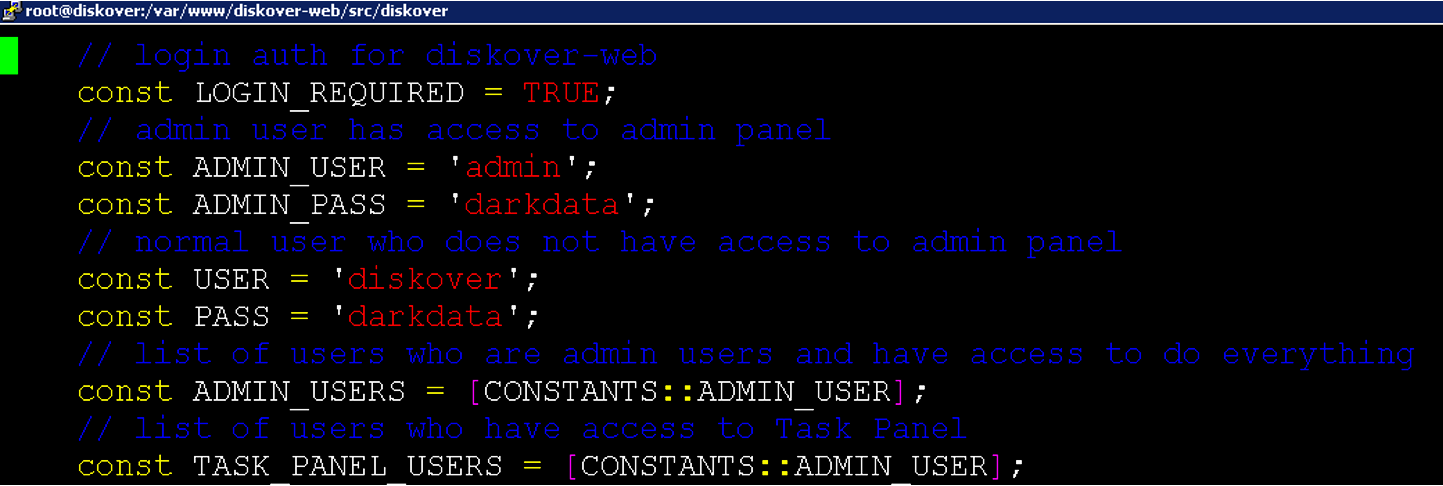

Local User Configuration

Diskover-Web currently has two local users: 1) admin, and 2) data user. To change the login details for the two sets of users:

vim /var/www/diskover-web/src/diskover/Constants.php

Note: The passwords stored in the web config file (Constants.php) are only used as the default initial passwords when first logging in to Diskover-Web. On first login, you will be asked to change the password, and the password will be stored and encrypted in

sqlite db, and the default password in web config will no longer be used.

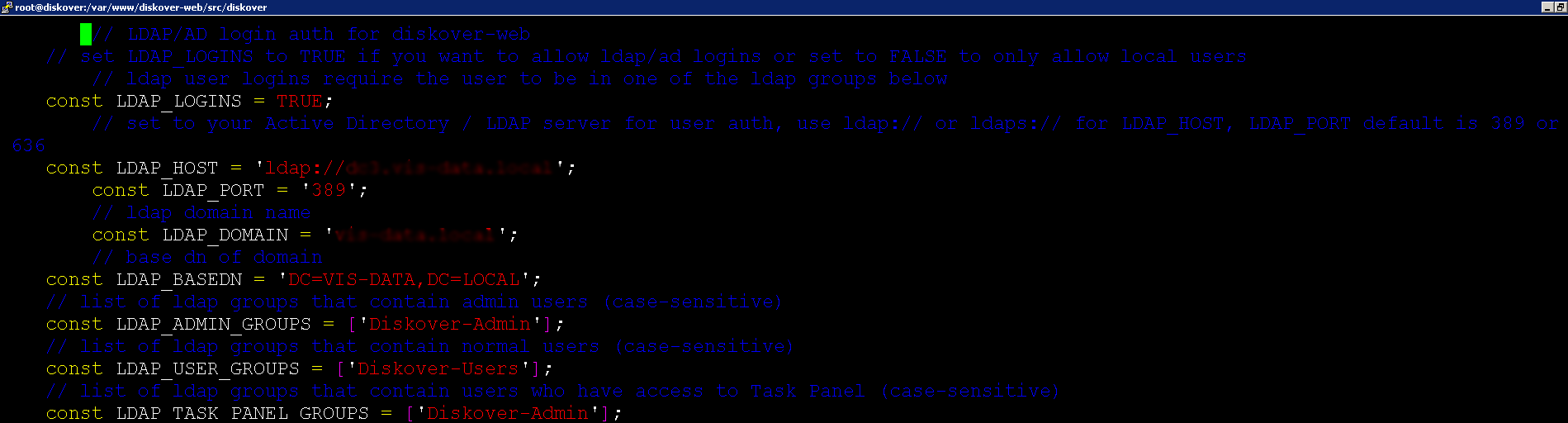

LDAP/Active Directory Authentication

Diskover-Web supports authenticating users from Active Directory over Lightweight Directory Access Protocol (LDAP). LDAP integration can be used to authenticate users against a Microsoft Domain Controller (DC).

🔴 To configure AD / LDAP login authentication:

vim /var/www/diskover-web/src/diskover/Constants.php

🔴 The following information is required to configure LDAP authentication:

| FIELD | DESCRIPTION |

|---|---|

| LDAP_LOGINS | Set to TRUE to enable and use ldap logins |

| LDAP_HOST | The full LDAP URI, ex: ldap://dc.domain.com:389 or ldaps://dc.domain.com:636 for SSL encryption Note: You can also provide multiple LDAP-URIs separated by a space as one string. |

| LDAP_PORT | Ex: 389 or 636 |

| LDAP_DOMAIN | The LDAP domain name, ex: domain.com |

| LDAP_BASEDN | The LDAP base dn of domain, ex: dc=DOMAIN,dc=COM |

🔴 At least three AD groups should be established for Diskover and set in web config. Note that at login, the ad/ldap user will be checked if they are in one of the ad/ldap groups below. If they are not in any of these groups, they will be denied access to log in.

| GROUP | DESCRIPTION |

|---|---|

| LDAP_ADMIN_GROUPS | To add admin group |

| LDAP_USER_GROUPS | To add user group |

| LDAP_TASK_PANEL_GROUPS | To add task panel group |

Okta Authentication

Diskover-Web supports authenticating/authorizing users using Okta Identity.

Note: This section does not cover adding an application to the Okta admin page. You will need to first add an Oauth application (Web app) to your Okta admin page for Diskover-Web

🔴 To configure Okta logins:

vim /var/www/diskover-web/src/diskover/Constants.php

🔴 The following information is required to configure Okta authentication/authorization:

| FIELD | DESCRIPTION |

|---|---|

| OAUTH2_LOGINS | Set to TRUE to enable and use Okta Oauth2 login Note: When using Oauth2 login, local and ldap login is not used |

| OAUTH2_CLIENT_ID | Your Okta Oauth2 application client id |

| OAUTH2_CLIENT_SECRET | Your Okta Oauth2 application client secret |

| OAUTH2_REDIRECT_URI | Your Okta Oauth2 login redirect URI, ex: https://diskover.domain.com/login.php?callbackNote: login.php page handles the redirect URI when using callback parameter |

| OAUTH2_LOGOUT_REDIRECT_URI | Your Okta Oauth2 post logout redirect URI, ex: https://diskover.domain.com/ |

| OAUTH2_AUTH_ENDPOINT | Your Okta Oauth2 API Authorization Server Issuer URI authorization endpoint, ex: https://diskover.domain.com/oauth2/default/v1/authorize |

| OAUTH2_TOKEN_ENDPOINT | Your Okta Oauth2 API Authorization Server Issuer URI token endpoint, ex: https://diskover.domain.com/oauth2/default/v1/token |

| OAUTH2_LOGOUT_ENDPOINT | Your Okta Oauth2 API Authorization Server Issuer URI logout endpoint, ex: https://diskover.domain.com/oauth2/default/v1/logout |

| OAUTH2_API_TYPE | Oauth2 API Type, types are Okta or Azure (Graph API), set this to Okta |

| OAUTH2_API_URL_BASE | Your Okta Oauth2 API URL for getting user/group info, ex: https://diskover.domain.com/api/v1/ |

| OAUTH2_API_TOKEN | Your Okta Oauth2 API Token |

🔴 At least two Okta Oauth2 groups should be established for Diskover and set in web config. Note that at login, the Okta Oauth2 user will be checked if they are in one of the following Okta Oauth2 groups.

| GROUP | DESCRIPTION |

|---|---|

| OAUTH2_ADMIN_GROUPS | To add admin group |

| OAUTH2_TASK_PANEL_GROUPS | To add task panel group |

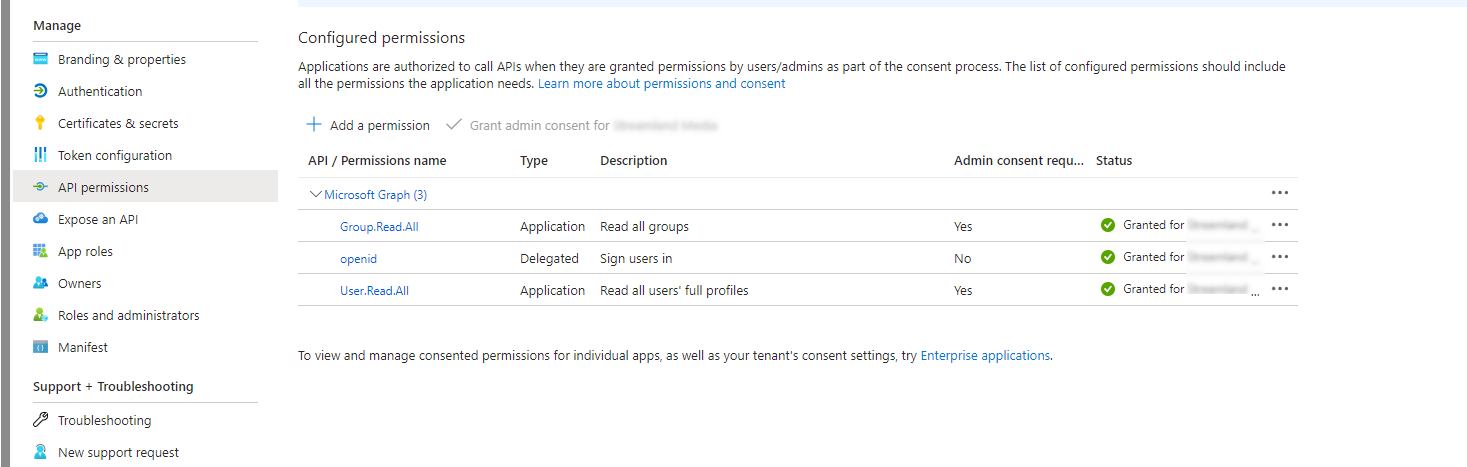

Azure AD Oauth2 OIDC SSO Authentication

Diskover-Web supports authenticating/authorizing users using Azure Active Directory OIDC SSO.

🔴 Set up an App Registration in Azure with the following API Permissions:

🔴 To configure Oauth2 logins:

vim /var/www/diskover-web/src/diskover/Constants.php

🔴 The following information is required to configure Azure Oauth2 OIDC SSO authentication/authorization:

| FIELD | DESCRIPTION |

|---|---|

| OAUTH2_LOGINS | Set to TRUE to enable and use Azure oauth2 login Note: When using Oauth2 login, local and ldap login is not used |

| OAUTH2_CLIENT_ID | Your Azure Oauth2 application client ID |

| OAUTH2_CLIENT_SECRET | Your Azure Oauth2 application client secret |

| OAUTH2_REDIRECT_URI | Your Azure Oauth2 login redirect URI, ex: https://diskover.domain.com/login.php?callbackNote: login.php page handles the redirect URI when using callback parameter |

| OAUTH2_LOGOUT_REDIRECT_URI | Your Azure Oauth2 post logout redirect URI, ex: https://diskover.domain.com/ |

| OAUTH2_AUTH_ENDPOINT | Your Azure Oauth2 API Authorization Server Issuer URI authorization endpoint, ex: https://diskover.domain.com/oauth2/default/v1/authorize |

| OAUTH2_TOKEN_ENDPOINT | Your Azure Oauth2 API Authorization Server Issuer URI token endpoint, ex: https://diskover.domain.com/oauth2/default/v1/token |

| OAUTH2_LOGOUT_ENDPOINT | Your Azure Oauth2 API Authorization Server Issuer URI logout endpoint, ex: https://diskover.domain.com/oauth2/default/v1/logout |

| OAUTH2_API_TYPE | Oauth2 API Type, types are Okta or Azure (Graph API), set this to Azure |

| OAUTH2_API_URL_BASE | Your Azure Oauth2 API URL for getting user/group info, ex: https://diskover.domain.com/api/v1/ |

🔴 At least two Azure Oauth2 groups should be established for Diskover and set in web config. Note that at login, the Azure Oauth2 user will be checked if they are in one of these above Azure Oauth2 groups.

| GROUP | DESCRIPTION |

|---|---|

| OAUTH2_ADMIN_GROUPS | To add admin group |

| OAUTH2_TASK_PANEL_GROUPS | To add task panel group |

Restricting Visibility and Access

Diskover-Web uses multiple levels to limit Elasticsearch index and directory visibility and access:

1) Index mappings can be configured and set to control what indices groups and users are allowed to see. Excluded dirs and ES search query can also be added to index mappings for more granular control.

2) AD/LDAP and Oauth2 group directory permissions.

3) Unix directory permissions.

🔴 Visibility can be limited by users/groups to specific indexes or branches within a given index. To limit index visibility by users/groups:

vim /var/www/diskover-web/src/diskover/Constants.php

// group/user index mappings

// controls what indices and paths groups/users are allowed to view

// enable index mappings, set to TRUE or FALSE

const INDEX_MAPPINGS_ENABLED = FALSE;

// index_patterns key is a list of index names user/group is allowed access to view

// index_patterns_exclude key is a list of index names user/group is not allowed to view

// index pattern wildcards * and ? are allowed, example diskover-* or diskover-indexname-*

// to not exclude any indices/dirs, use empty list [] for index_patterns_exclude, excluded_dirs, and excluded_query

// excluded_dirs use absolute paths and are recursive, example /top_path/dir_name

// excluded_query uses ES query string including regular expression syntax

// group/user names, excluded_dirs, and excluded_query are case-sensitive

// group/user name wildcards * and ? are allowed

const INDEX_MAPPINGS = [

CONSTANTS::ADMIN_USER => [

[

'index_patterns' => [

'diskover-*'

],

'index_patterns_exclude' => [],

'excluded_dirs' => [],

// allow access to projectA directory only in /mnt/stor1/projects

'excluded_query' => ['((parent_path:\/mnt\/stor1\/projects AND name:/project[^A]/) OR parent_path:/\/mnt\/stor1\/projects\/project[^A]*/)']

]

],

CONSTANTS::USER => [

['index_patterns' => ['diskover-*'], 'index_patterns_exclude' => [], 'excluded_dirs' => [], 'excluded_query' => []]

],

'diskover-admins' => [

['index_patterns' => ['diskover-*'], 'index_patterns_exclude' => [], 'excluded_dirs' => [], 'excluded_query' => []]

],

'diskover-users' => [

['index_patterns' => ['diskover-*'], 'index_patterns_exclude' => [], 'excluded_dirs' => [], 'excluded_query' => []]

],

'diskover-powerusers' => [

['index_patterns' => ['diskover-*'], 'index_patterns_exclude' => [], 'excluded_dirs' => [], 'excluded_query' => []]

]

];

🔴 Visibility can also be limited by AD/LDAP and Oauth2 group permissions. To limit index visibility by AD/LDAP or Oauth2 group membership:

vim /var/www/diskover-web/src/diskover/Constants.php

// AD/ldap group permission filtering

// controls if files/directories get fitered based on AD/ldap groups membership of the user logged in

// local users admin and diskover always see all directories in the index

// aws s3 indices are not filtered

// enable ldap filtering, set to TRUE or FALSE

const LDAP_FILTERING_ENABLED = TRUE;

// AD/ldap groups that are excluded from filtering

// if a user is a member of one of these groups, they will see all files/directories

// group names are case-sensitive

const LDAP_GROUPS_EXCLUDED = ['diskover-admins', 'diskover-powerusers'];

// Oauth2 SSO group permission filtering

// controls if files/directories get fitered based on Oauth2 SSO groups membership of the user logged in

// local users admin and diskover always see all directories in the index

// aws s3 indices are not filtered

// Diskover Pro license required

// enable Oauth2 filtering, set to TRUE or FALSE

const OAUTH2_FILTERING_ENABLED = FALSE;

// Oauth2 SSO groups that are excluded from filtering

// if a user is a member of one of these groups, they will see all files/directories

// group names are case-sensitive

const OAUTH2_GROUPS_EXCLUDED = ['diskover-admins', 'diskover-powerusers'];

// lower case group names when filtering

const GROUPNAME_FILTERING_LOWERCASE = FALSE;

🔴 To limit index visibility by Unix file permissions:

Note: To use Unix permissions filtering, you will need to enable and use the Unix Permissions plugin when indexing, for both file and directory.

vim /var/www/diskover-web/src/diskover/Constants.php

// use UNIXPERMS_FILTERING_STRING as well as group membership to determine filtering

const UNIXPERMS_FILTERING_ENABLED = TRUE;

// unix perms filtering ES search string

// could also use other fields besides unix_perms such as owner, group, etc

//const UNIXPERMS_FILTERING_STRING = 'owner:root AND group:root AND unix_perms:755'

const UNIXPERMS_FILTERING_STRING = 'unix_perms:/..[57]/';

Restricting Diskover-Web API Access

Enable HTTP Basic Authentication

You can turn on HTTP Basic Auth for the Diskover-Web API. This will make it required to use a username and password to access the API.

🔴 Enable API auth and set a username and password:

vim /var/www/diskover-web/src/diskover/Constants.php

// HTTP Basic Auth for REST API

// api authentication, set to TRUE to enable or FALSE to disable

const API_AUTH_ENABLED = TRUE;

// api username and password

const API_USER = 'diskoverapi';

const API_PASS = 'apisecret';

🔴 The API password API_PASS in web config is only used as a default password and this password needs to be changed. To login to Diskover-Web as admin, go to the Settings page and scroll to the API Password section and click change password. Set a new password and the password will be securely stored in sqlite db.

Note: When changing API Auth settings, remember to update diskoverd task worker daemon config to use the new auth settings.

Restricting API Access By LDAP/AD login

You can use LDAP/AD logins using HTTP Basic Auth for the Diskover-Web API.

🔴 Enable API LDAP auth:

vim /var/www/diskover-web/src/diskover/Constants.php

// LDAP/AD Auth for REST API

// api ldap/ad authentication, set to TRUE to enable or FALSE to disable

const API_AUTH_LDAP_ENABLED = TRUE;

Note: When enabling API LDAP auth, config index/ldap filter settings are used, see Restricting Visibility and Access.

Restricting API Access By Host/IP

🔴 To limit API access to certain hosts or networks, you can add an additional location block with allow/deny rules to your Diskover-Web NGINX config /etc/nginx/conf.d/diskover-web.conf.

vi /etc/nginx/conf.d/diskover-web.conf

The NGINX location block below needs to go above the other location block that starts with:

location ~ \.php(/|$) {

🔴 Change 1.2.3.4 to the IP address you want to allow access to the API. You can add additional lines if you want to allow more hosts/networks to access the API. The deny all line needs to come after all allow lines:

location ~ /api\.php(/|$) {

allow 1.2.3.4;

deny all;

fastcgi_split_path_info ^(.+\.php)(/.+)$;

set $path_info $fastcgi_path_info;

fastcgi_param PATH_INFO $path_info;

try_files $fastcgi_script_name =404;

fastcgi_pass unix:/var/run/php-fpm/php-fpm.sock;

#fastcgi_pass 127.0.0.1:9000;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

fastcgi_read_timeout 900;

fastcgi_buffers 16 16k;

fastcgi_buffer_size 32k;

}

🔴 Restart NGINX:

systemctl restart nginx

🔴 Then verify you can access API with curl or web browser on an allowed host:

curl http://<diskover-web-host>:<port>/api.php

🔴 You should see this:

{

"status": true,

"message": {

"version": "diskover REST API v2.0-b.3",

"message": "endpoint not found"

}

}

Others will now be blocked with a 403 forbidden http error page.

Path Translation

The path translation feature is designed to support heterogenous client environments like Windows, MacOS, and Linux. The path for each client to access or locate a file or directory will vary depending on client operation system. For example, Linux operating systems use the backslash slash \ and Windows operating systems use a forward slash /. Path translation provides the capability to translate paths within Diskover to appropriate the client’s operating system. The following describes two mechanisms for translating paths within Diskover.

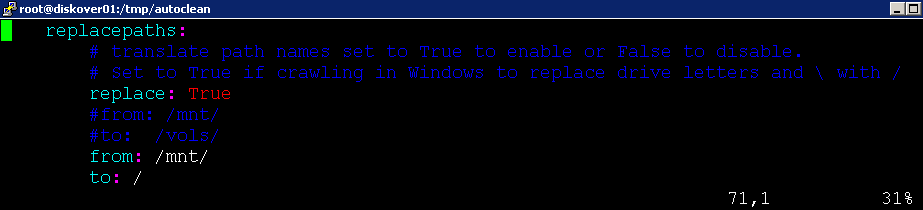

Translating Paths Stored in Elasticsearch Index

To translate paths that get stored within the Elasticsearch document, for example removing /mnt from a path like /mnt/isilon1

🔴 Open a terminal session:

vi /root/.config/diskover/config.yaml

🔴 Set replace: to True

🔴 Configure desired from: and to:

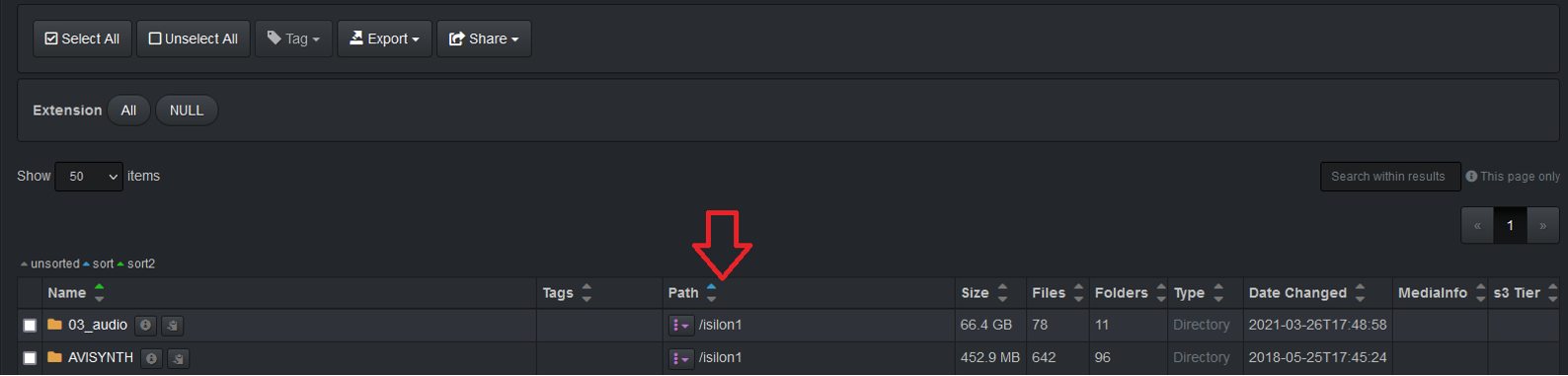

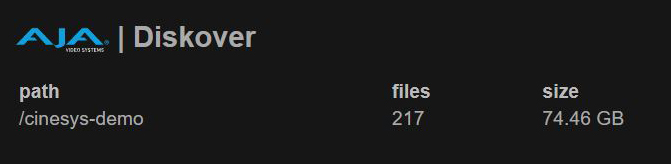

Which results in the following path displayed within the Diskover-Web user interface:

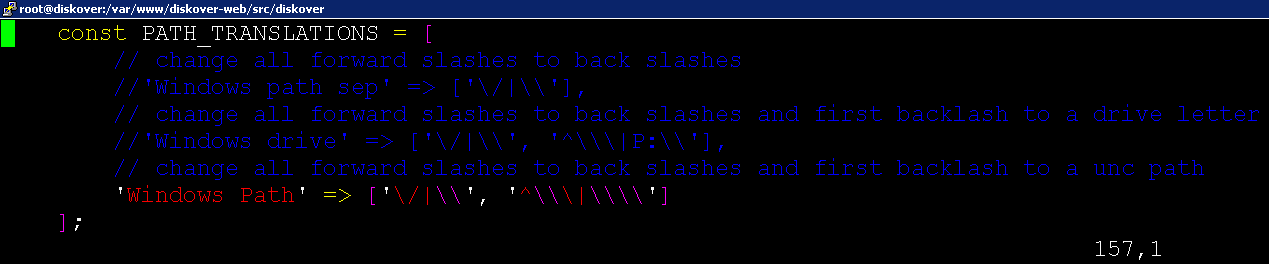

Path Translation in Diskover-Web for Copy/Paste

To set client profiles that get translated when a user copies a path within the Diskover-Web user interface.

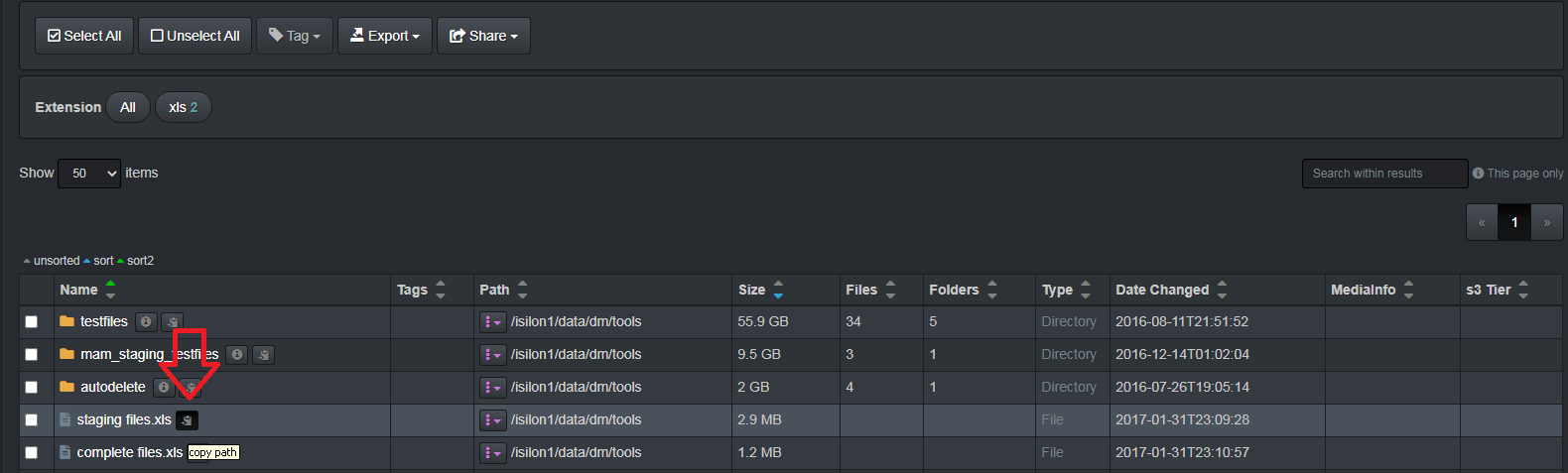

🔴 For example, to translate from /isilon1/data/dm/tools/staging files.xls to \\isilon1\data\dm\tools\staging files.xls

vi /var/www/diskover-web/src/diskover/Constants.php

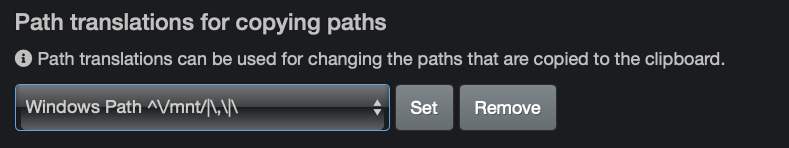

🔴 Configure client profile within the Diskover-Web user interface under the Settings page:

🔴 Copy a path within the Diskover-Web user interface for testing:

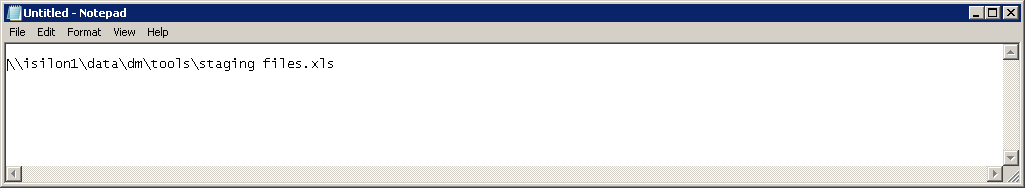

🔴 Resulting path within clipboard:

Top Paths

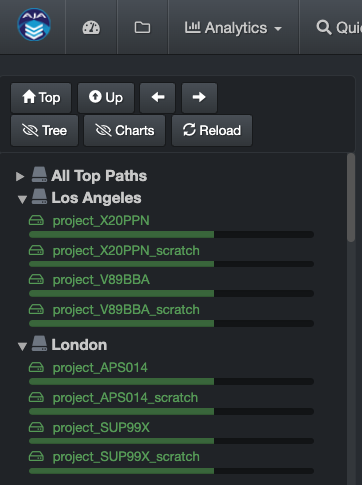

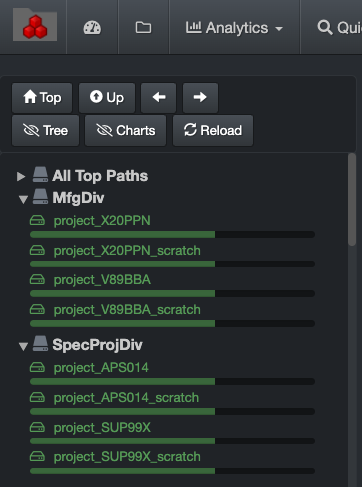

By default, users will see a list of all volumes indexed by Diskover in the left pane of the user interface. You can however create Top Paths to organize your volumes (by location, project, etc.).

Here are two examples. Note that the first collapsible option will always be All Top Paths and will list all your repositories. The collapsible choices/labels after that are customizable.

🔴 Open the TOPPATH_TREE constants.php file:

/var/www/diskover-web/src/diskover/Constants.php

🔴 Edit the file and change for your storage locations, the example below using tokyo_stor and van_stor*:

// top path collapsible tree/menu

// top path tree displayed in search file tree and top path drop down menu in nav bar

// default is have all top paths under All tree

// set to [] to use defaults

// uses php's preg_match for reg exp of top paths

// Example to set /mnt/tokyo_stor* to be nested under Tokyo and /mnt/van_stor* to be nested under Vancouver:

// const TOPPATH_TREE = [ 'Tokyo' => ['/\/mnt\/tokyo_stor*/'], 'Vancouver' => ['/\/mnt\/van_stor*/'] ];

const TOPPATH_TREE = [ 'Stor' => ['/\/mnt\/tokyo_stor*/', '/\/mnt\/van_stor*/']];

🔴 To organize and edit the top path labels:

// alternate top path labels to display in search file tree and top path drop down menu in nav bar

// default is to display the basename of top path directory

// set to [] to use defaults

// Example to set /mnt/stor1 to vol1 label and /mnt/stor2 to vol2 label:

// const TOPPATH_LABELS = ['/mnt/stor1' => 'vol1', '/mnt/stor2' => 'vol2'];

const TOPPATH_LABELS = [];

Tags

One of the powerful features of Diskover is the ability to add business context to the index of files. Business context enables:

- Increased findability and searchability based on one or more combinations of fields, for example, name and tag value.

- More informed and accurate data curation decisions.

- Ability to build an approval process (or RACI model) for data curation decisions.

- Reporting aligned to business purpose change reports from “disk language” of size, age, extension, etc. to “business language” of projects, clients, status, etc.

Methods for Tags Application

The following sections describes the various methods for tags application within Diskover. You will learn about:

- Autotagging.

- Tags application via Diskover API.

- Tags application via Harvest Plugins.

- Manual tagging.

Tag Application via Autotag

Tags can be applied automatically via a series of rules applied to directories or files. The rules can be very powerful based on a combination of file name, extension, path, age, size, etc.

- Auto-tagging rules can be found in the

diskover_autotagconfig file for tagging files and directories. - Auto-tagging can also be done during a crawl by enabling autotag in diskover config and setting rules in the diskover config file.

- All tags are stored in the

tagsfield in the index - there is no limit to the number of tags.

Autotag During Indexing

To enable tags to be applied during the index process, autotagging must be enabled and a series of rules configured to determine what tags to apply and under what conditions.

🔴 Set autotag to True and define tagging rules.

vim /root/.config/diskover/config.yaml

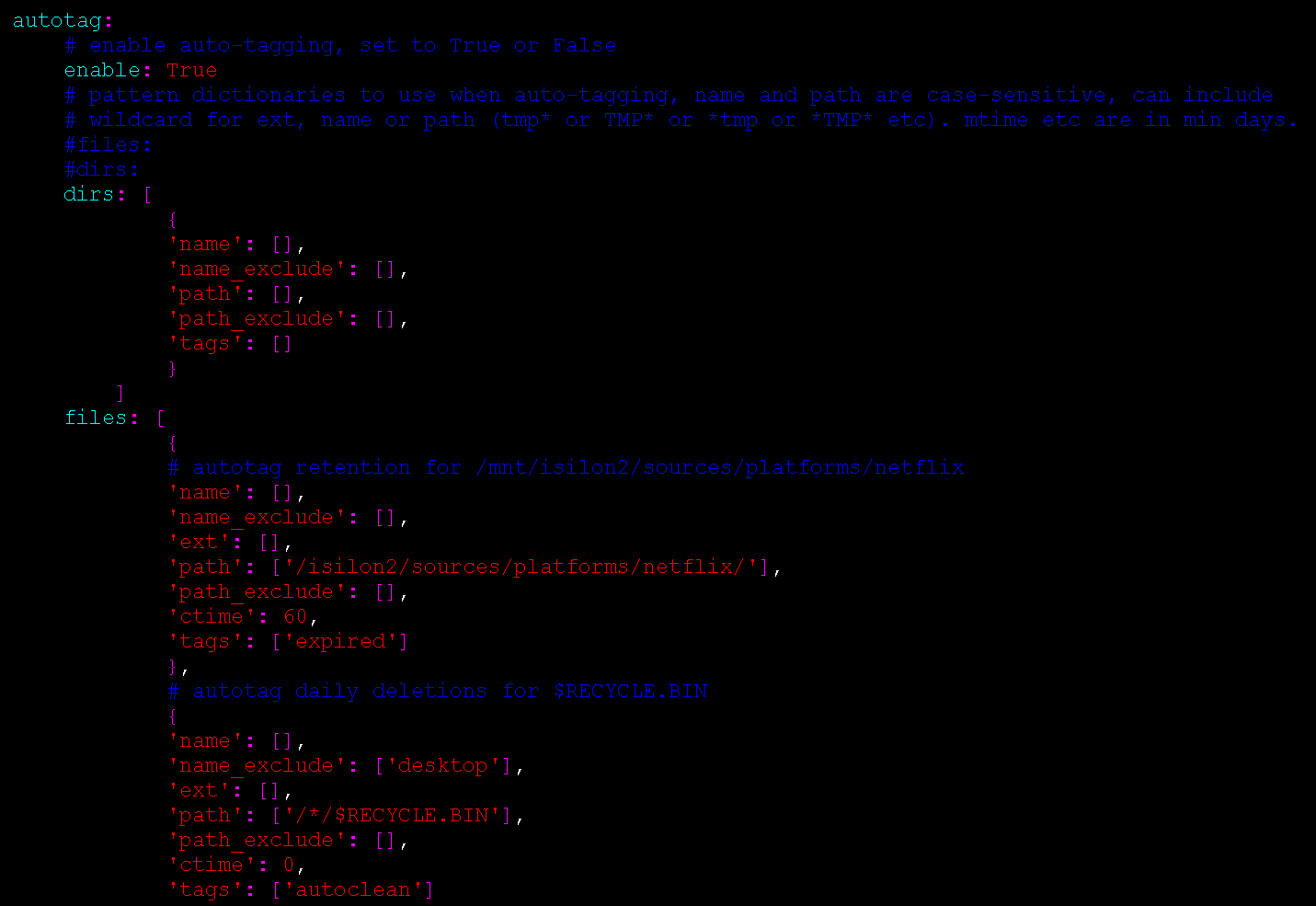

In the following example, the rules will:

- Apply the expired tag to files in the netflix folder with a ctime (change time) 60 days or older

- Apply the autoclean tag to files in $RECYCLE.BIN folder

files: [

{

# autotag retention for /mnt/isilon2/sources/platforms/netflix

'name': [],

'name_exclude': [],

'ext': [],

'path': ['^/isilon2/sources/platforms/netflix'],

'path_exclude': [],

'ctime': 60,

'mtime': 0,

'atime': 0,

'tags': ['expired']

},

# autotag daily deletions for $RECYCLE.BIN

{

'name': [],

'name_exclude': ['desktop.ini'],

'ext': [],

'path': ['^/*/$RECYCLE.BIN'],

'path_exclude': [],

'ctime': 0,

'mtime': 0,

'atime': 0,

'tags': ['autoclean']

}

]

Autotag Keys:

name: a list of file/directory names (case-sensitive)name_exclude: a list of file/directory names to exclude (case-sensitive)ext: a list of file extensions (without the .) (use lowercase - all file extensions are stored as lowercase in the index)path: a list of paths (parent_path field, case-sensitive)path_exclude: a list of paths to exclude (parent_path field, case-sensitive)ctime: change time at least this number or older (days)mtime: modified time at least this number or older (days)atime: access time at least this number or older (days)tags: a list of tags to apply if the item matches

Note: name, name_exclude, path, path_exclude, and ext all use Python re.search (regular expression). All list items should be in quotes and separated by comma

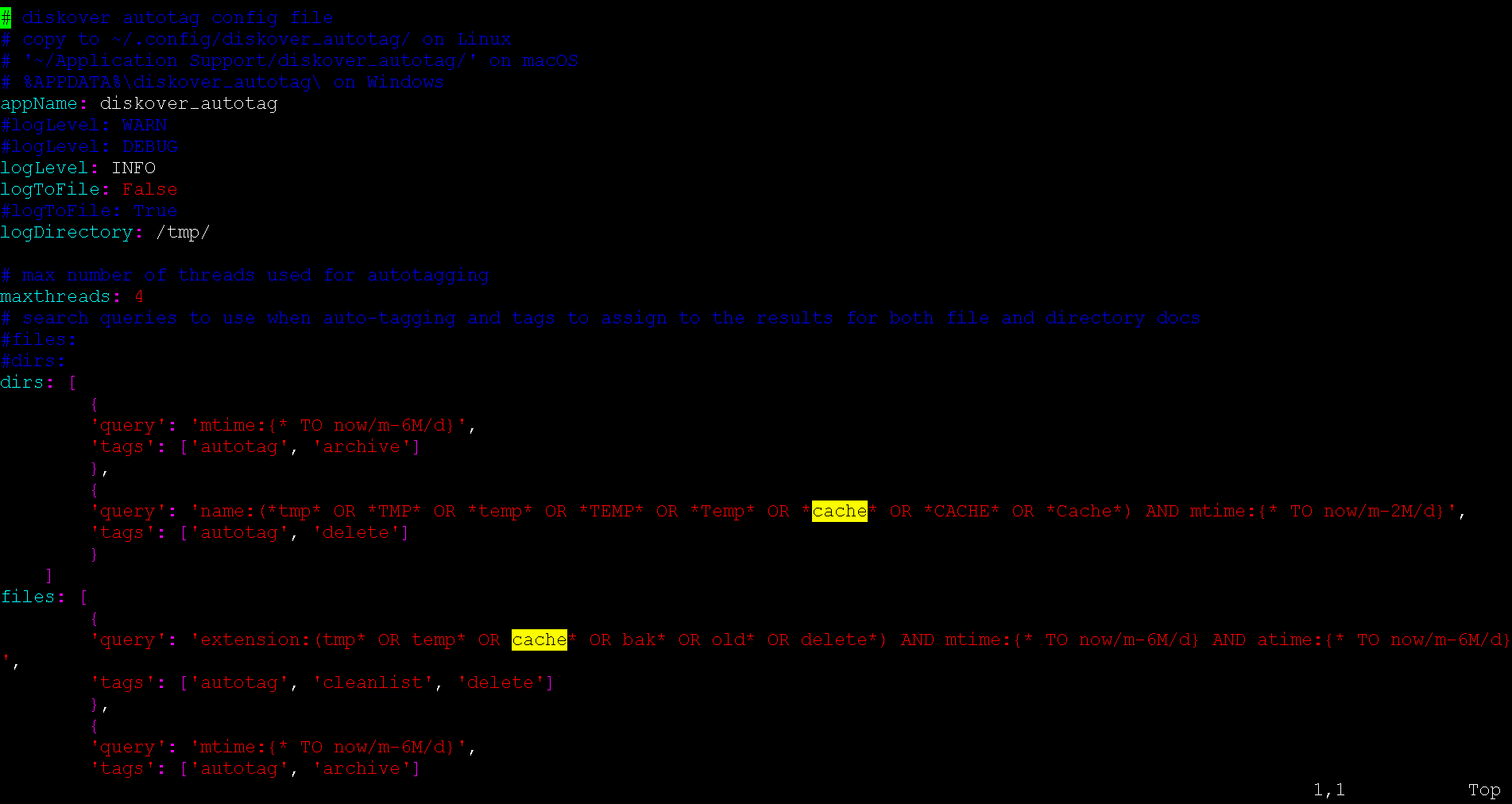

Autotag an Existing Index (Post Index Process)

Tag application can be executed via a shell to an existing index (post actual index process).

🔴 Check that you have the config file in ~/.config/diskover_autotag/config.yaml, if not, copy from the default config folder in configs_sample/diskover_autotag/config.yaml.

🔴 To configure post index autotag rules:

vi /root/.config/diskover_autotag/config.yaml

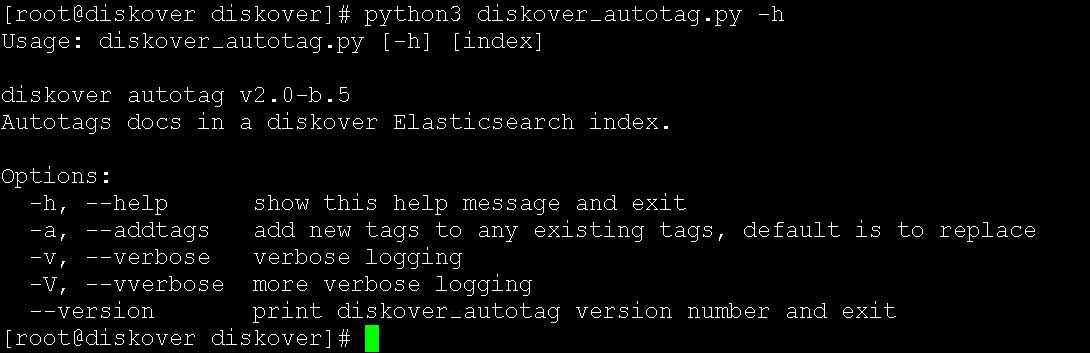

🔴 Run manually from shell:

cd /opt/diskover

🔴 Post indexing plugins are located in plugins_postindex/ directory.

python3 diskover-autotag.py diskover-<indexname>

🔴 Usage:

python3 diskover_autotag.py -h

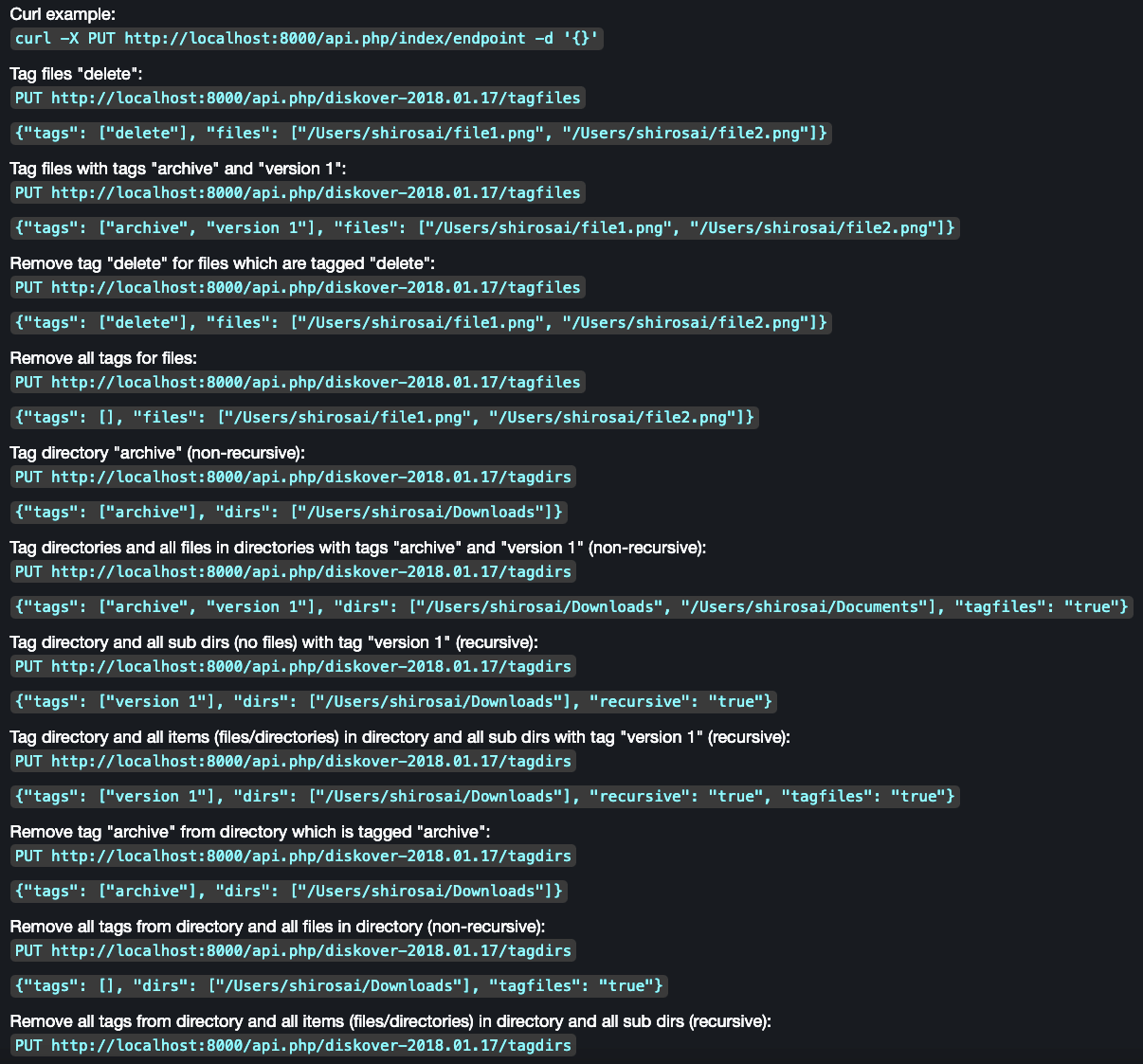

Tag Application via Diskover API

Tags can be applied via the Diskover API. Updating file/directory tags is accomplished with the PUT method. A JSON object is required in the body of PUT method. The call returns the status and number of items updated.

Examples of tag application that can be found in the Diskover-Web user interface under gear icon > Help page, as well as in the Diskover SDK and API Guide.

Tag Application via Harvest Plugins

Harvest plugins are typically designed to:

- Harvest metadata from file headers, for example the media info plugin .

- Correlate the Diskover index to some other business application, for example an order management or scheduling system.

These plugins typically run a) during index process, or b) on a scheduled basis. Harvest plugins designed to correlate various systems or applications typically use a key identifier within a directory/filename (example: research grant ID) to harvest additional metadata from another database (for example: Primary Investigator for specific grant ID). Therefore, tags will be reapplied if a key identifier and connection to external database exists at time of re-index/harvest.

Tag Application via Manual Processes

In general, manual processes are a) difficult to scale, and 2) prone to inconsistencies. Therefore, careful consideration must be applied when determining when to use manual tag application. Ideally, manual tags should be used sparingly or as part of a workflow “approval” or RACI model. The following outlines sample tag configuration when used as part of workflow approval processes.

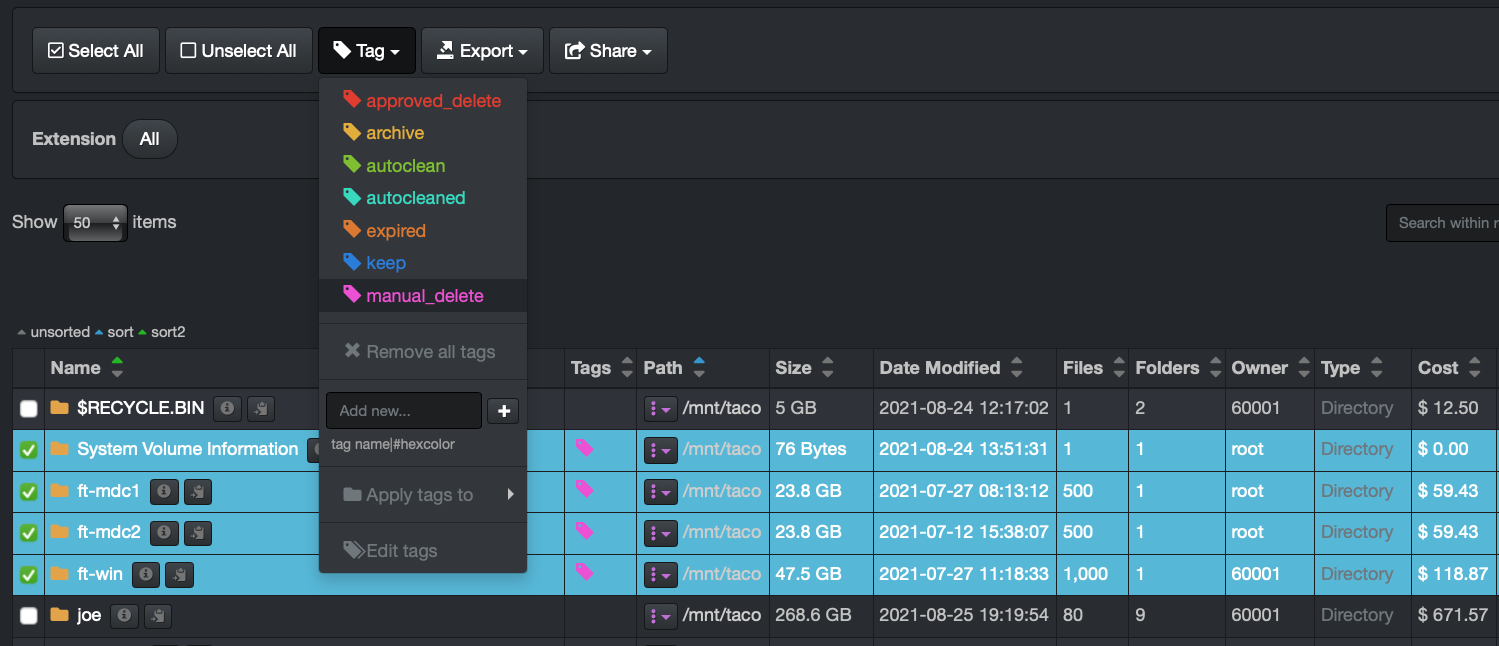

Apply a Tag Manually

From the file search page > select one or multiple files and/or directories > select the Tag drop-down list > apply a tag.

Note: A file or directory can be assigned several tags.

Removal of a Single Tag Manually

Basically, redo the same steps as above > from the file search page > select one or multiple files and/or directories with the specific tag that you want to remove > select the Tag drop-down list > select the tag you want to remove.

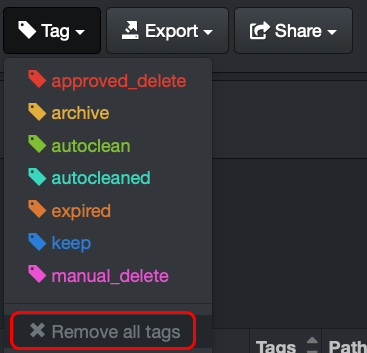

Removal of All Tags Manually

From the file search page > select one or multiple files and/or directories with any tag(s) that you want to remove > select the Tag drop-down list > select Remove all tags.

WARNING! This operation cannot be undone.

Tags Migration via Tag Copier Plugin | Copying from One Index to Another

The Diskover indexing process creates a new index or point in time snapshot of the volume at time of index. Tags that are applied during the indexing process via autotag rules will be automatically re-applied based on the configuration rules in the configuration file.

However, the Diskover indexer has no knowledge of tags applied outside of the indexing process, those tags that have been applied: a) manually, b) via Diskover API, or c) via plugins thru the API. Therefore, these tags must be migrated from one index to the next.

Please refer to our dedicated chapter Tag Copier Plugin for more information.

Tag Migration / Copy from Previous Index via Shell

The following describes how to initial a tag migration/copy from a shell.

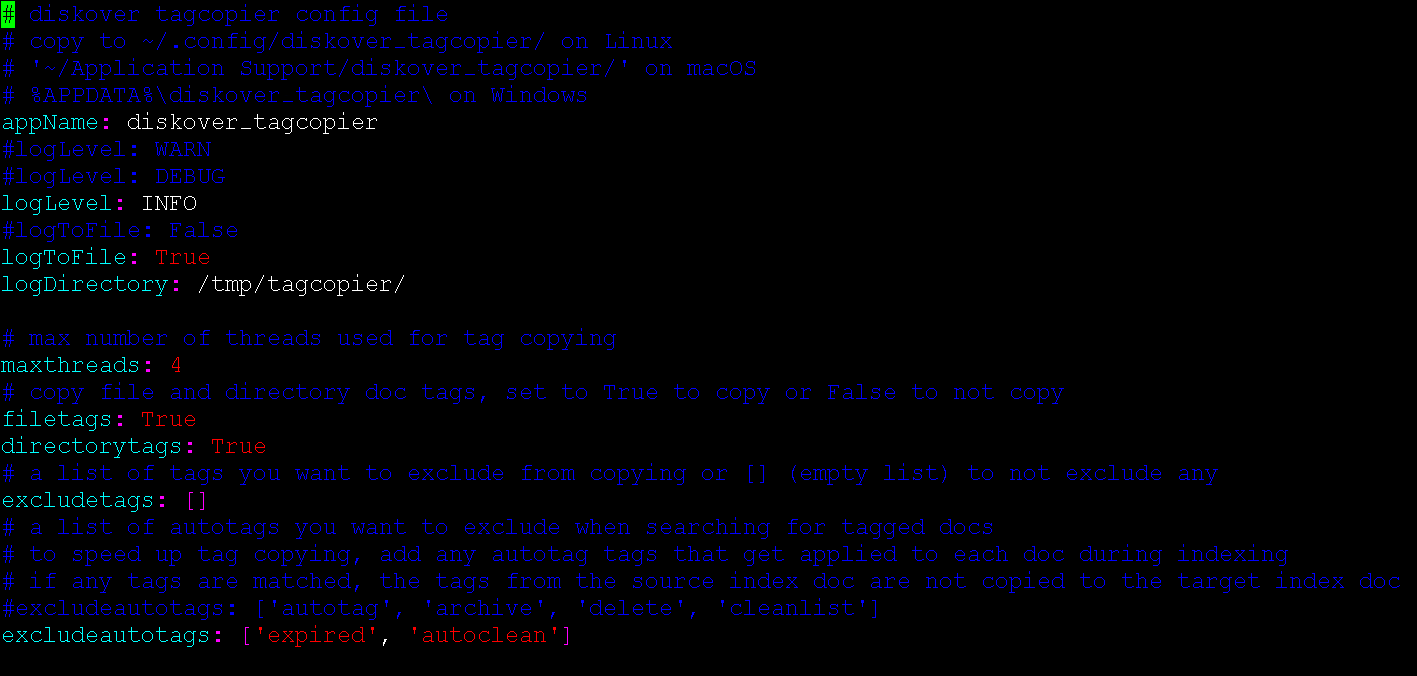

🔴 Confirm existing of tagcopier configuration file:

cat /root/.config/diskover_tagcopier/config.yaml

🔴 If the file does not exist:

mkdir /root/.config/diskover_tagcopier/

cp /opt/diskover/configs_sample/diskover_tagcopier/config.yaml /root/.config/diskover_tagcopier/

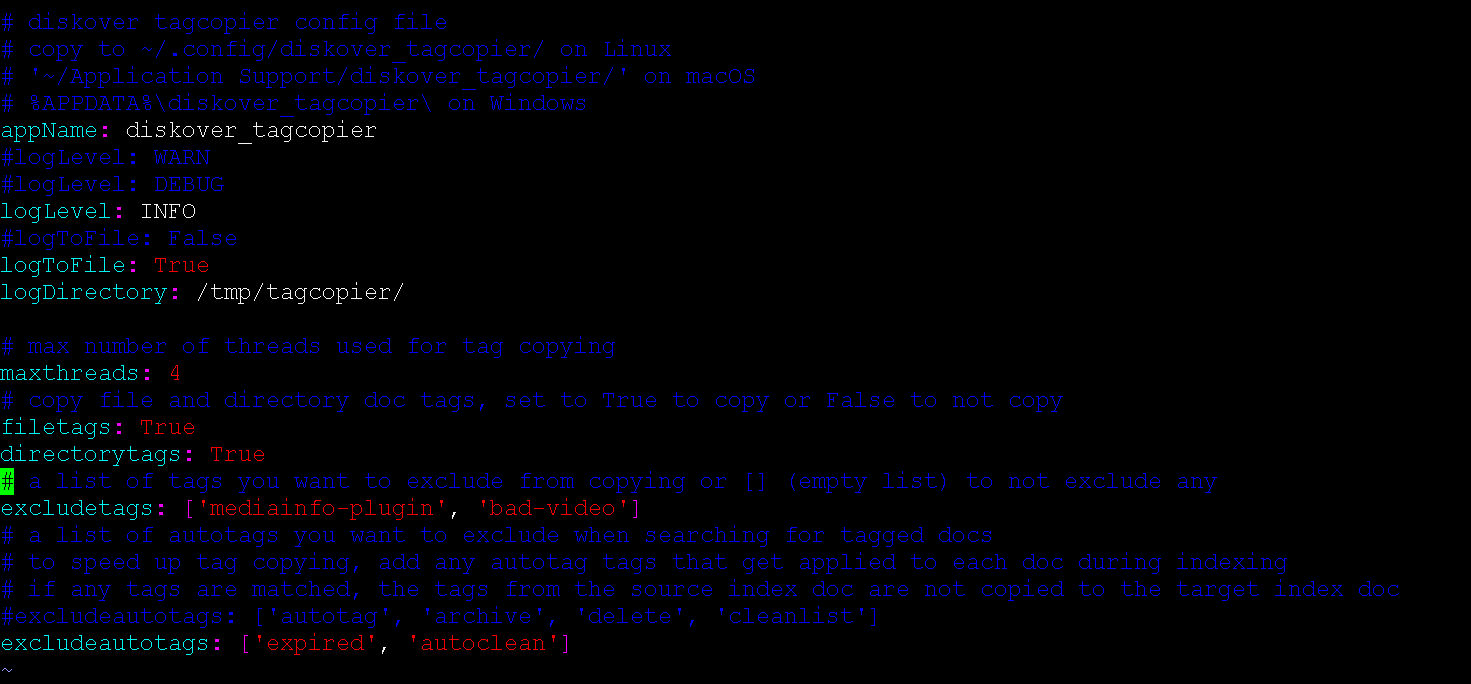

🔴 Configure any tags or tags applied via autotag process to exclude from migration:

🔴 Copy tags from source to destination index:

cd /opt/diskover/

python3 diskover_tagcopier.py diskover-<source_indexname> diskover-<dest_indexname>

🔴 To view usage options:

python3 diskover_tagcopier.py -h

Tag Migration / Copy from Previous Index via Task Panel

Tags can also be migrated from one index to the next index via the Diskover-Web task panel, see how to Configure Indexing Tasks to Migrate Tags from Previous Index.

Tags Display and Editor within Diskover-Web User Interface

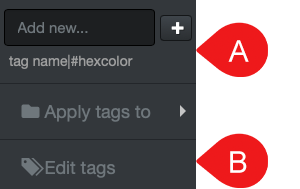

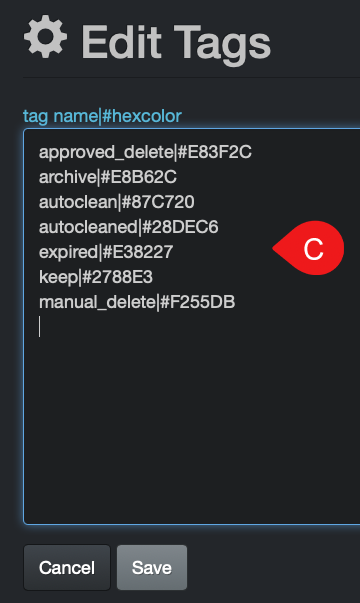

The tags displayed within the Diskover-Web user interface can be customized by users with an admin account and can be achieved from 1) the file search page or 2) Analytics menu > Tags.

Format to follow for tags: tag name|#hexcolor (instead of #hexcolor code, you can also type a simple color name, ex: red)

Note: Autotags have a gray color by default in the user interface.

A) From the file search page > select any file and/or directory > Tag drop-down list > type/add a new tag in the Add new field.

B) From the file search page > select any file and/or directory > Tag drop-down list > Edit tags will open a C) window and allow you to add, delete or edit exiting tags > Save when done editing.

C) From Analytics menu > select Tags > Edit tags button > will open C) window and allow you to add, delete or edit exiting tags > Save when done editing.

Reporting

Diskover provides powerful reporting capabilities. Reports can be generated to align with business context and can be constructed from any Elasticsearch query. Therefore, any combination of names, tags, metadata fields, etc. can be used to construct business facing reports.

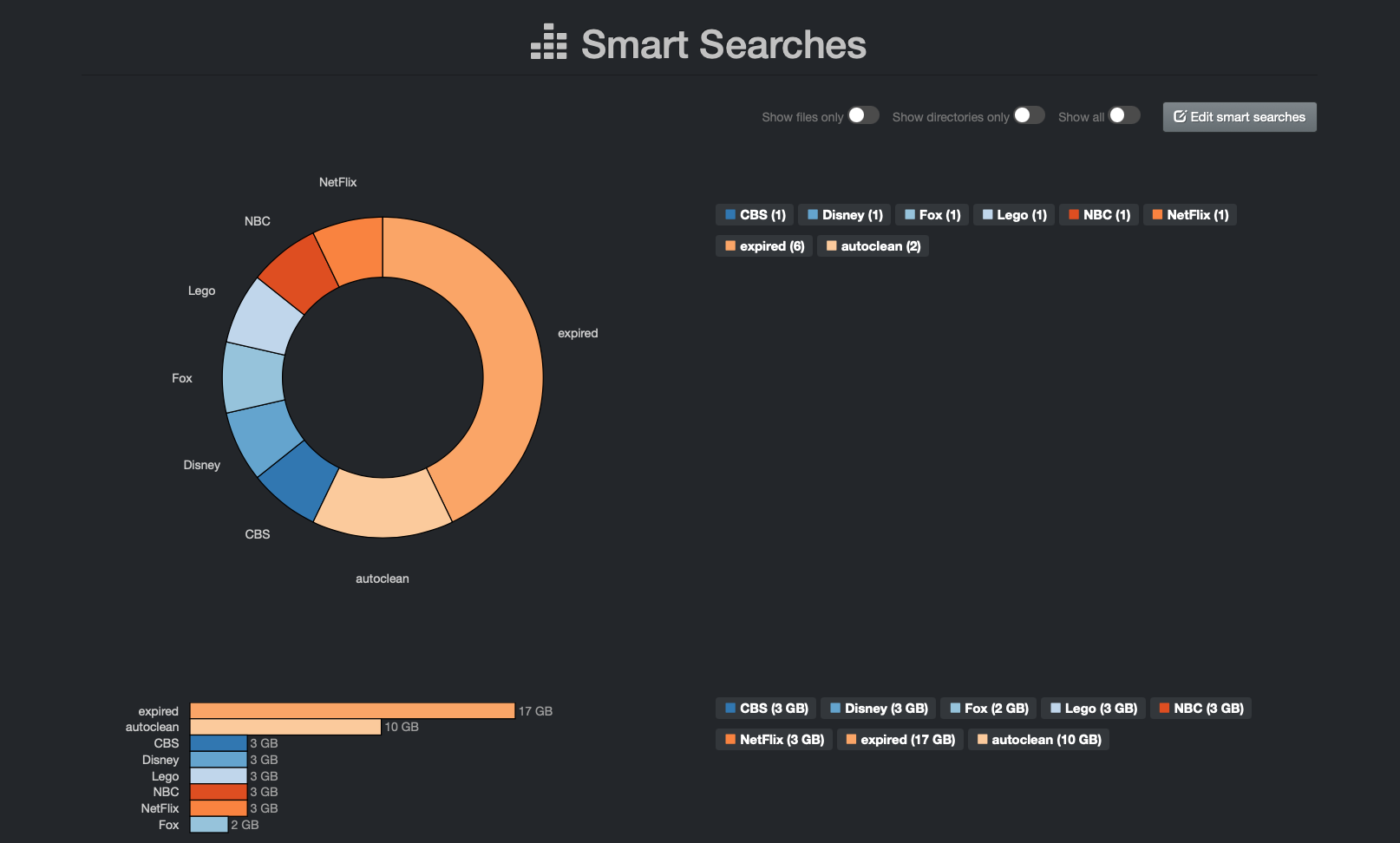

Smart Searches

Smart Searches provide a mechanism to create repeatable reports or bookmarks based on search queries. Any combination of names, tags, metadata fields, etc. can be used to construct business facing reports.

Any users can access Smart Searches, but only users with an admin level account can add, edit or delete queries.

Accessing Smart Searches

Within the Diskover-Web user interface, Smart Searches is located under Analytics > Smart Searches.

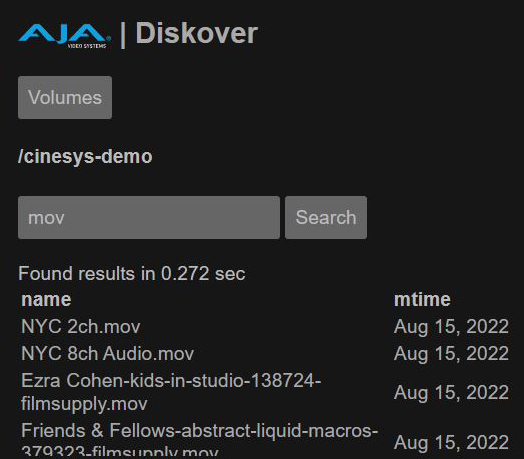

The following provides an example smart search configuration by client - The names in the report are fictitious and for demonstration only:

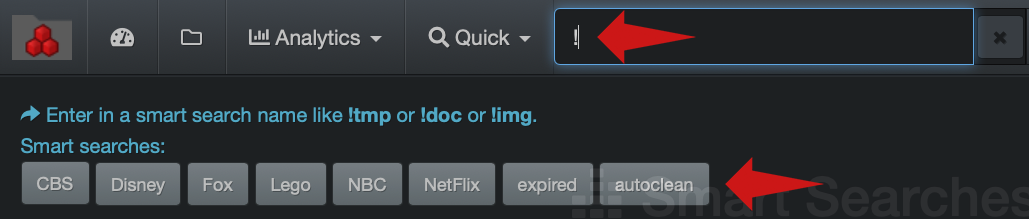

Business users can click on a report link in the Smart Searches page and/or launch the query by typing the queryname in the search bar starting with !, ex: !queryname

When typing a ! in the search bar, the list of existing Smart Searches reports will appear under the search bar and you can just click on the one you want to launch or type the rest of the name of the report in the search bar:

Using Smart Searches

Please refer to the Diskover User Guide for information on how to use Smart Searches.

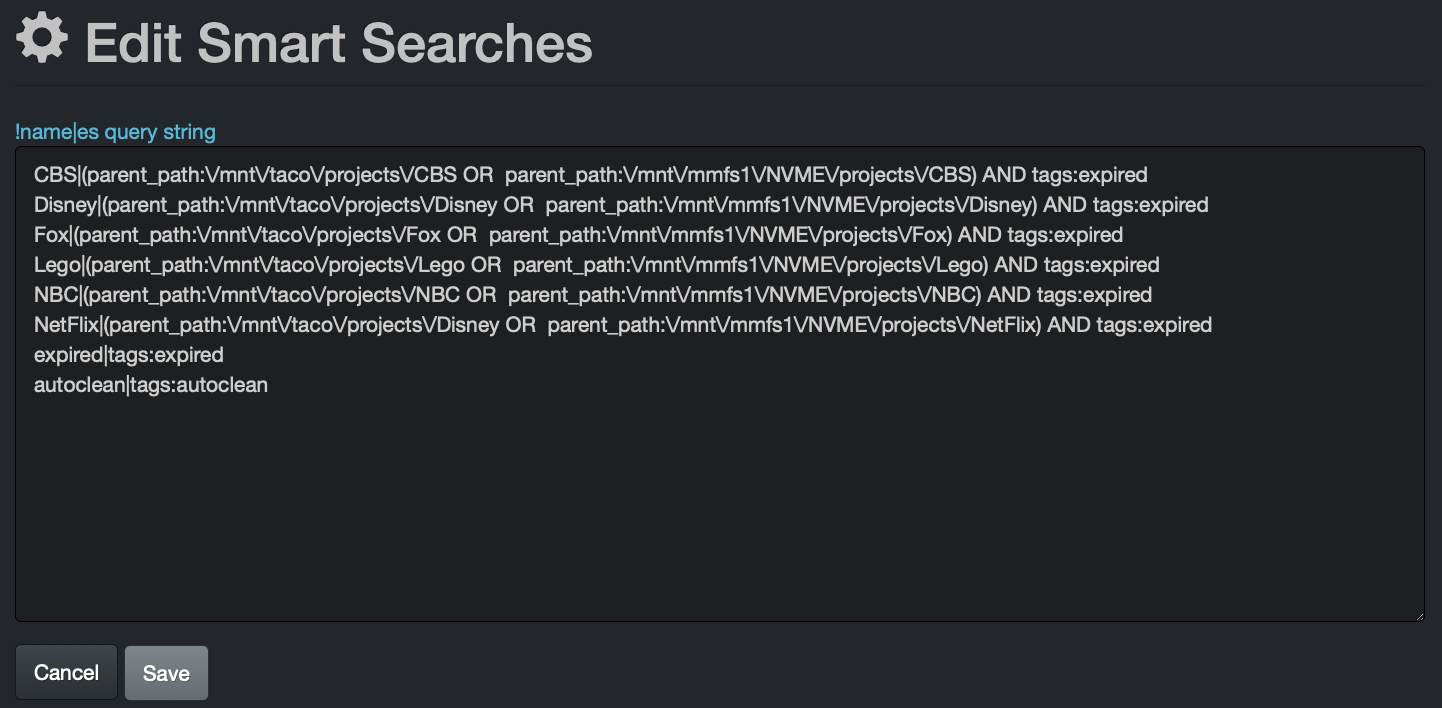

Smart Searches Configuration

By default, Diskover has preconfigured reports by file types/extensions, but any type of queries with single or mixed criteria can be added.

- To add, edit or delete a report > Analytics > Smart Searches > click the Edit smart searches button.

- For queries syntax and rules, please refer to the Diskover User Guide:

- Queries with built-in search tools

- Syntax and rules for manual queries

Smart searches queries need to be built in the following format: !report name|query

The above example report by client was configured using these smart search logic queries - you can copy the following queries and replace by your company's own variables to achieve similar results:

CBS|(parent_path:\/mnt\/taco\/projects\/CBS OR parent_path:\/mnt\/mmfs1\/NVME\/projects\/CBS) AND tags:expired

Disney|(parent_path:\/mnt\/taco\/projects\/Disney OR parent_path:\/mnt\/mmfs1\/NVME\/projects\/Disney) AND tags:expired

Fox|(parent_path:\/mnt\/taco\/projects\/Fox OR parent_path:\/mnt\/mmfs1\/NVME\/projects\/Fox) AND tags:expired

Lego|(parent_path:\/mnt\/taco\/projects\/Lego OR parent_path:\/mnt\/mmfs1\/NVME\/projects\/Lego) AND tags:expired

NBC|(parent_path:\/mnt\/taco\/projects\/NBC OR parent_path:\/mnt\/mmfs1\/NVME\/projects\/NBC) AND tags:expired

NetFlix|(parent_path:\/mnt\/taco\/projects\/Disney OR parent_path:\/mnt\/mmfs1\/NVME\/projects\/NetFlix) AND tags:expired

expired|tags:expired

autoclean|tags:autoclean

Reports

Reports provide a mechanism to create repeatable reports and search/analyze top results.

Any users can access Reports, but only users with an admin level account can add, edit or delete queries.

Accessing Reports

Within the Diskover-Web user interface, Reports is located under Analytics > Reports.

The following report provides an example configured by order status when using the Xytech Order Status Plugin.

Click here for a full-screen view.

Using Reports

Please refer to the Diskover User Guide for information on how to use Reports.

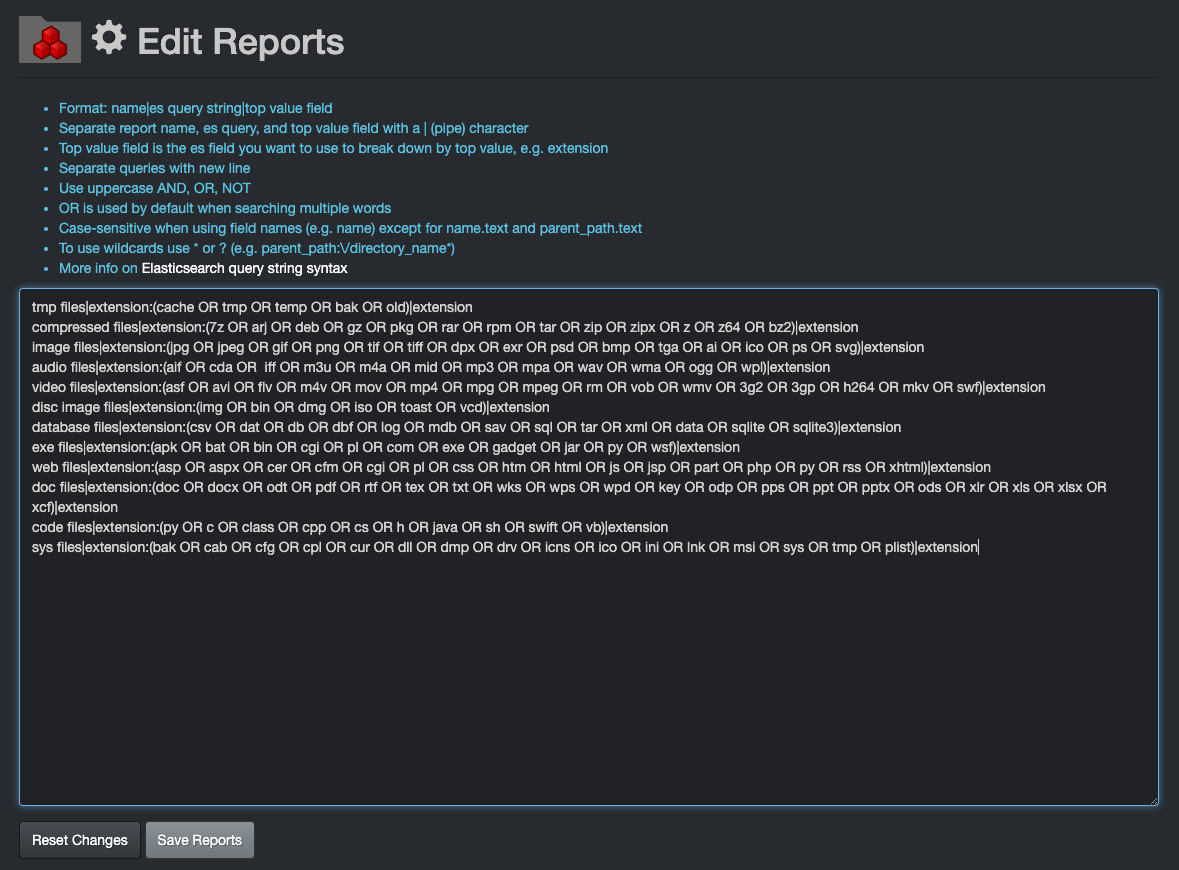

Reports Configuration

By default, Diskover has preconfigured reports by file types/extensions, but any type of queries with single or mixed criteria can be added and/or replace the default queries.

- To add, edit or delete a query > Analytics > Reports > click the Edit reports button.

- For queries syntax and rules, please refer to the Diskover User Guide:

- Queries with built-in search tools

- Syntax and rules for manual queries

Reports queries need to be built in the following format: report name|query|top value

🔴 This is the query that was used in the example above to report on the Xytech order phase:

Xytech Phase|xytech:*|xytech.phase

🔴 The example below is the default query by file type the first time you open Reports:

tmp files|extension:(cache OR tmp OR temp OR bak OR old)|extension

compressed files|extension:(7z OR arj OR deb OR gz OR pkg OR rar OR rpm OR tar OR zip OR zipx OR z OR z64 OR bz2)|extension

image files|extension:(jpg OR jpeg OR gif OR png OR tif OR tiff OR dpx OR exr OR psd OR bmp OR tga OR ai OR ico OR ps OR svg)|extension

audio files|extension:(aif OR cda OR iff OR m3u OR m4a OR mid OR mp3 OR mpa OR wav OR wma OR ogg OR wpl)|extension

video files|extension:(asf OR avi OR flv OR m4v OR mov OR mp4 OR mpg OR mpeg OR rm OR vob OR wmv OR 3g2 OR 3gp OR h264 OR mkv OR swf)|extension

disc image files|extension:(img OR bin OR dmg OR iso OR toast OR vcd)|extension

database files|extension:(csv OR dat OR db OR dbf OR log OR mdb OR sav OR sql OR tar OR xml OR data OR sqlite OR sqlite3)|extension

exe files|extension:(apk OR bat OR bin OR cgi OR pl OR com OR exe OR gadget OR jar OR py OR wsf)|extension

web files|extension:(asp OR aspx OR cer OR cfm OR cgi OR pl OR css OR htm OR html OR js OR jsp OR part OR php OR py OR rss OR xhtml)|extension

doc files|extension:(doc OR docx OR odt OR pdf OR rtf OR tex OR txt OR wks OR wps OR wpd OR key OR odp OR pps OR ppt OR pptx OR ods OR xlr OR xls OR xlsx OR xcf)|extension

code files|extension:(py OR c OR class OR cpp OR cs OR h OR java OR sh OR swift OR vb)|extension

sys files|extension:(bak OR cab OR cfg OR cpl OR cur OR dll OR dmp OR drv OR icns OR ico OR ini OR lnk OR msi OR sys OR tmp OR plist)|extension

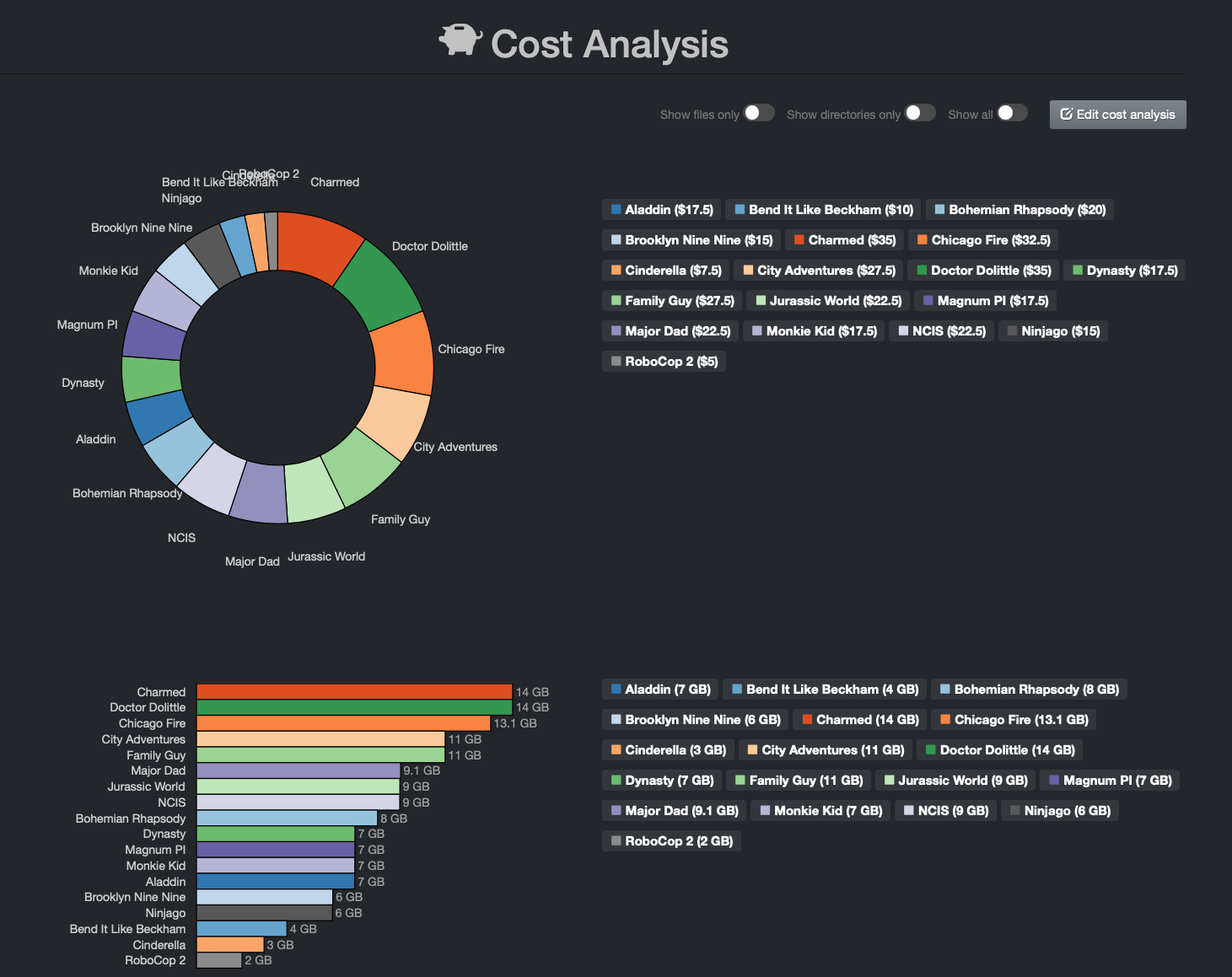

Storage Cost Reporting

Cost reporting can be generated to align with business context and can be constructed from any Elasticsearch query. Therefore, any combination of names, tags, metadata fields, etc. can be used to construct business facing reports.

Storage cost can be set globally or per storage volume, directory, etc. This tool is designed to control operating costs by 1) charging clients accurately for storage of their projects, and 2) clean-up/data curation incentivizing.

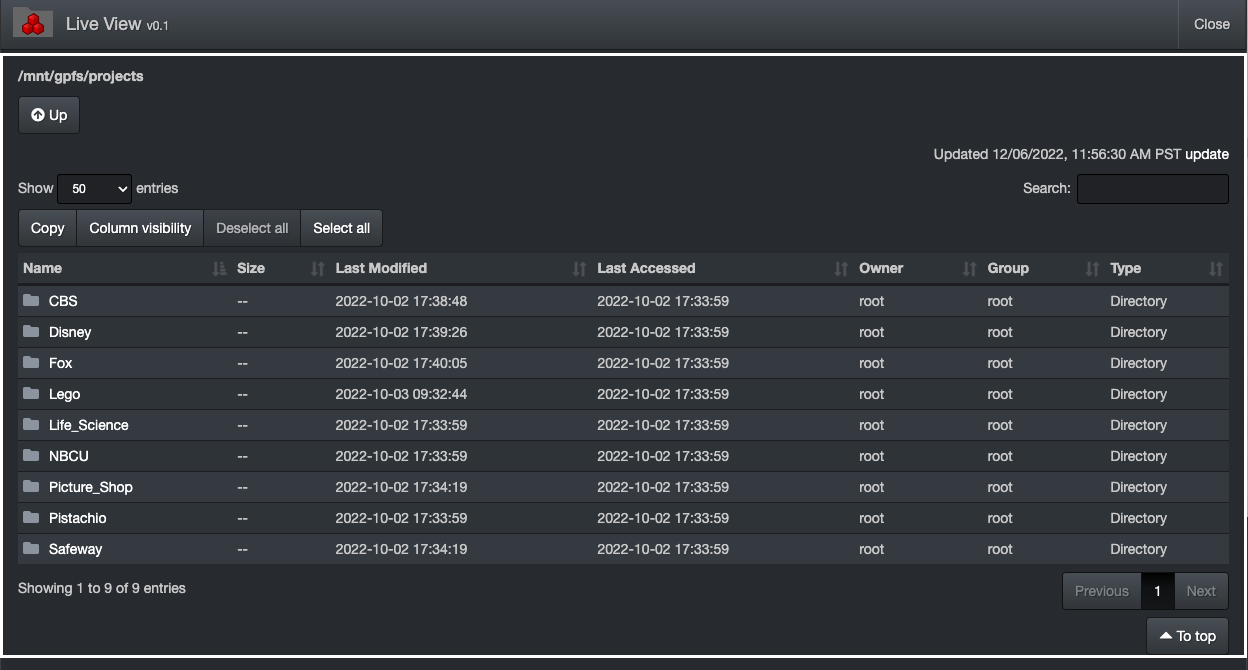

The following report provides an example of cost analysis by client - The names in the report are fictitious and for demonstration only:

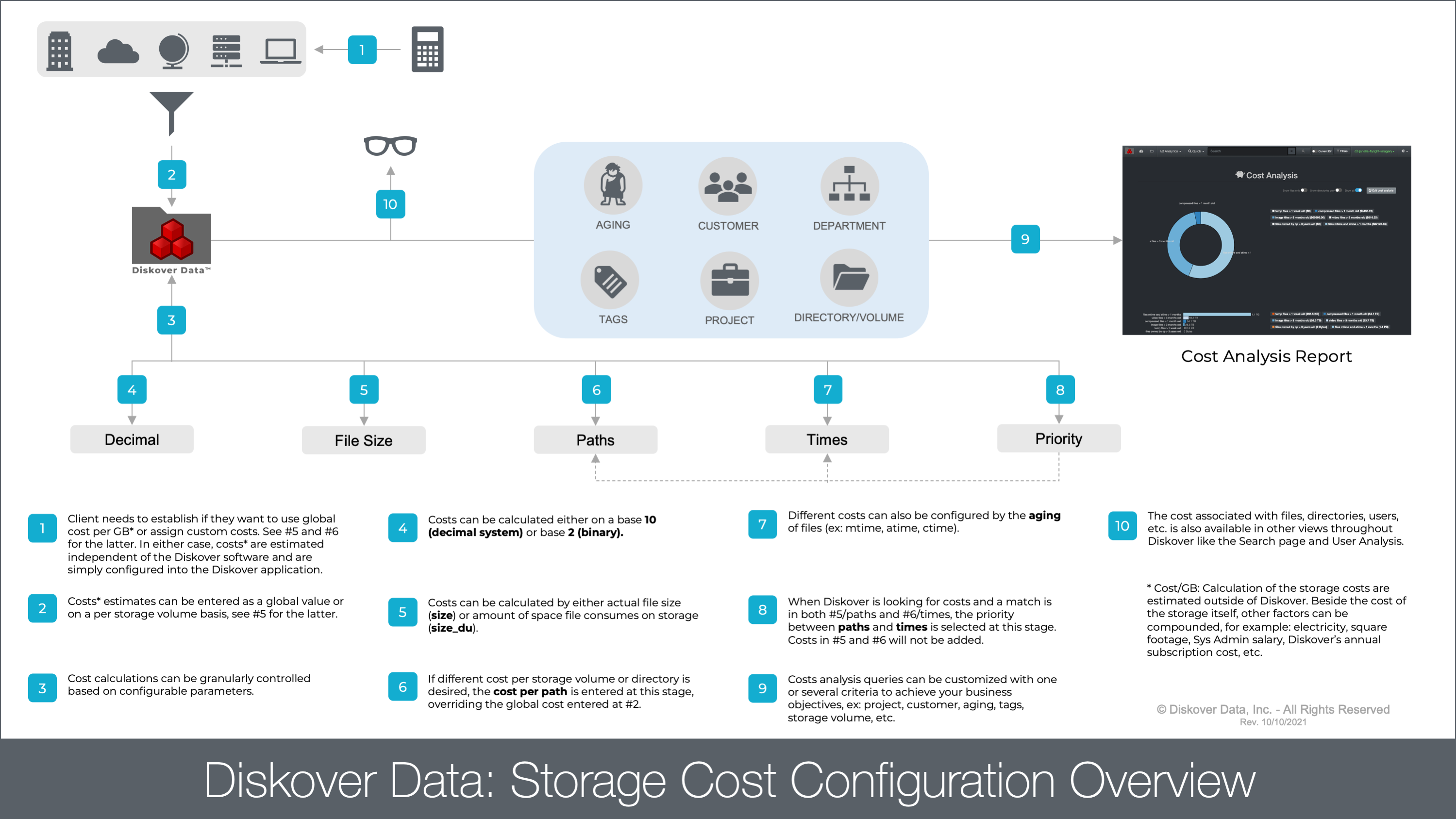

Storage Cost Configuration Overview Diagram

Click here for a full view of the diagram.

Calculation of Cost Estimates

The storage cost can either be estimated globally or by storage volume, directory, etc. The estimations need to be done outside of Diskover. Beside the cost of the storage itself, other factors can be compounded like electricity, service contract, System Administrator’s salary, subscription fees, etc.

Estimation needs to be estimated and configured per gigabyte.

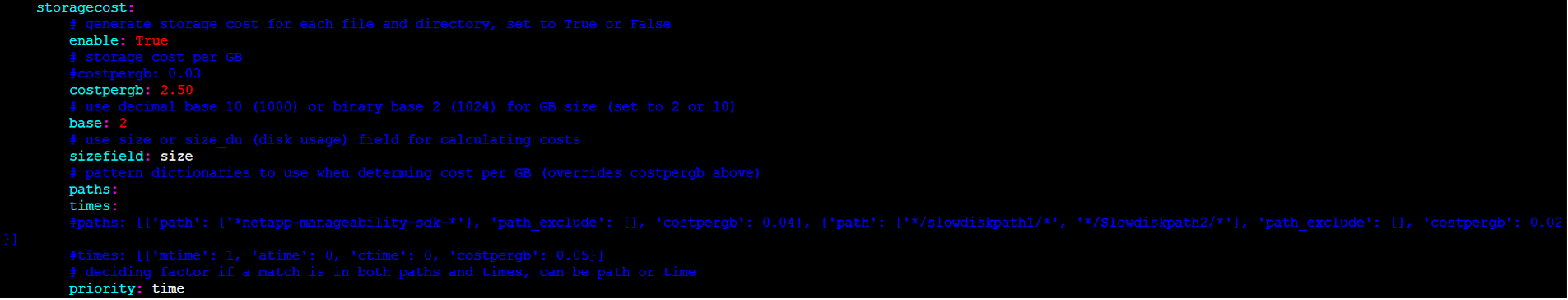

Storage Cost Configuration

🔴 Once the estimation per GB is achieve, open a terminal session:

vim /root/.config/diskover/config.yaml

🔴 Enable storagecost by changing to True:

enable: True

🔴 Enter global estimated cost per GB, example below at $2.50 per GB:

costpergb: 2.50

🔴 Enter base preference by typing 10 for decimal or 2 for binary, example below is set for binary:

base: 2

🔴 Enter size preference by typing size for file size or size_du for disk usage, example below is set for file size:

sizefield: size

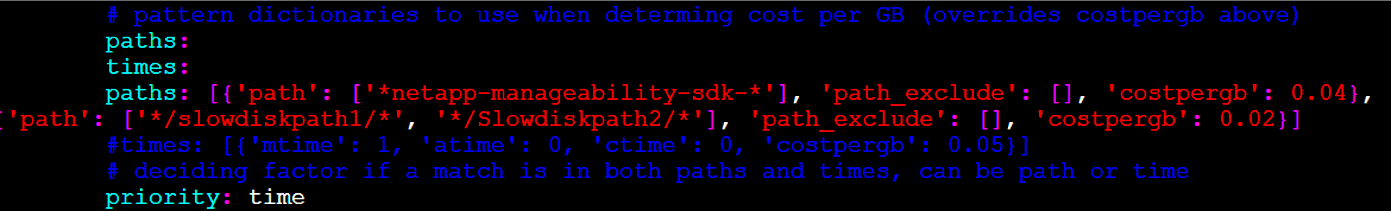

🔴 Different costs can be assigned to specific paths, overriding the global cost per GB as described above, providing for very granular cost calculations:

paths: [{'path': ['*fast_storage*'], 'path_exclude': [], 'costpergb': 4.50}]

Different costs can also be configured by file aging, costs can be assigned based on age of data to incentivize movement of older data to less expensive storage:

🔴 In the case where storage cost could read either the paths or times override, you need to assign a priority preference either by path or time, example below is set for time:

priority: time

Accessing Storage Costs

Within the Diskover-Web user interface, cost information is displayed in different locations:

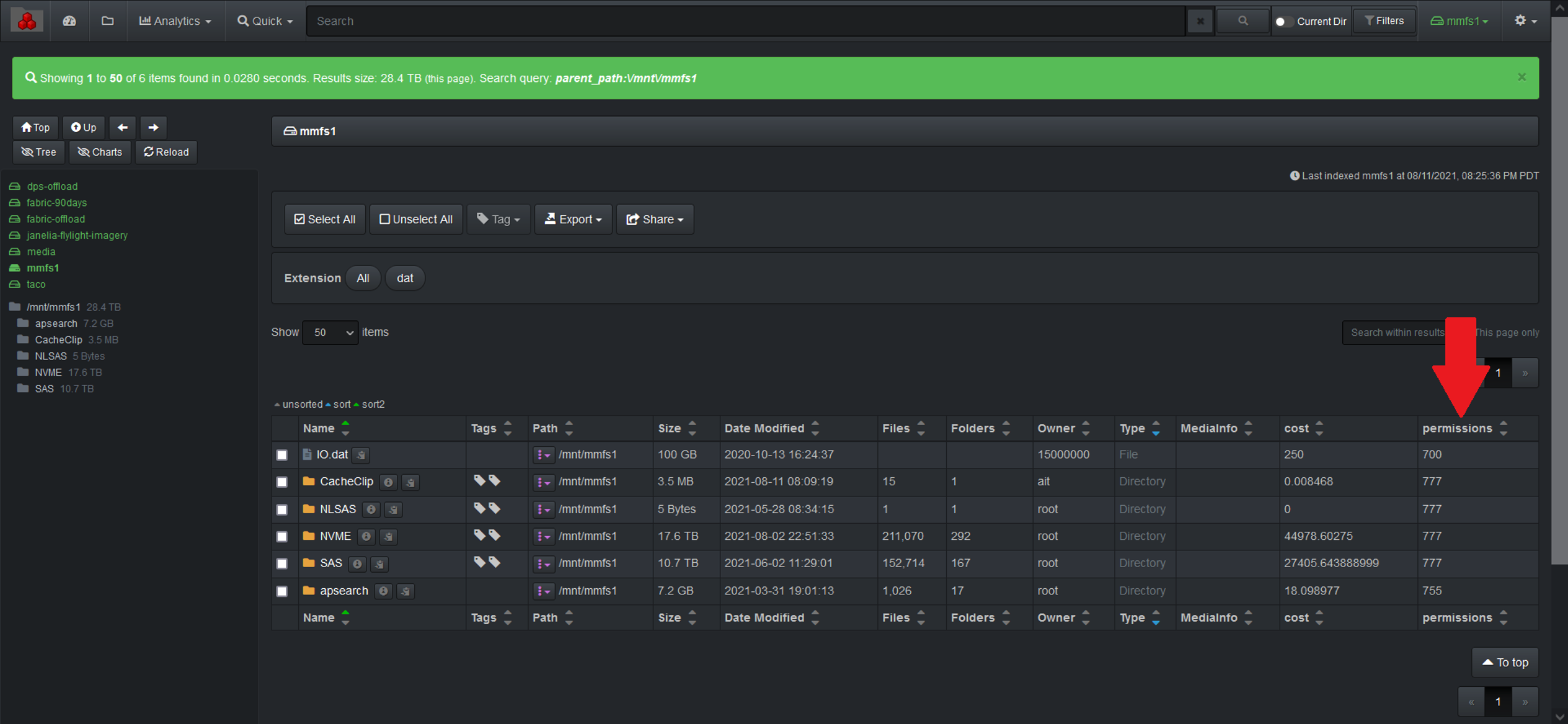

- File search page > Cost column in search results pane (Cost column might need to be unhidden from your Settings > Hide fields in search results)

- Analytics > Cost Analysis > to access a report, users can click on a report link.

- Analytics > User Analysis

Using Cost and User Analysis Reports

Please refer to the Diskover User Guide:

Cost Analysis Reports Configuration

The Cost Analysis reports are repeatable queries which can be customized and are located in Analytics > Cost Analysis. Any users can access the reports, but only users with an admin level account can add/edit/delete reports.

- To add, edit or delete a report > Analytics > Cost Analysis > click the Edit cost analysis button.

- For queries syntax and rules, please refer to the Diskover User Guide:

- Queries with built-in search tools

- Syntax and rules for manual queries

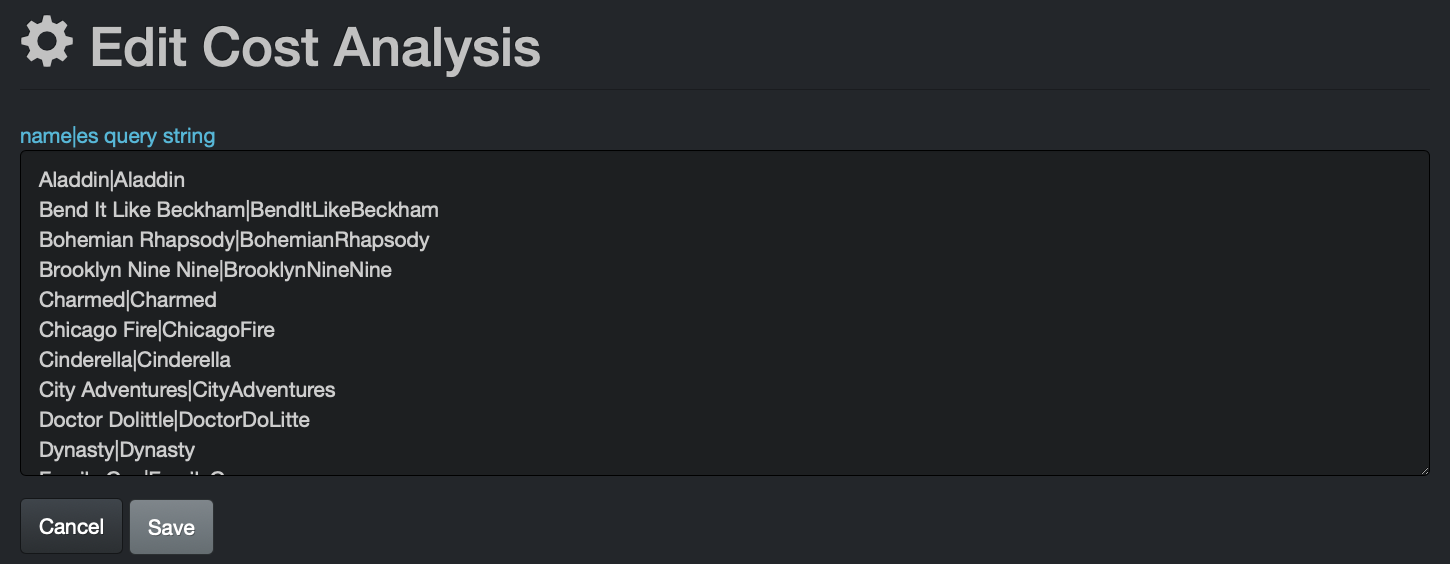

Cost Analysis queries need to be built in the following format: report name|querycriteria

Here are the very simple queries from the example displayed at the beginning of this section - you can copy the following queries and replace by your company's variables to achieve similar results:

Aladdin|Aladdin

Bend It Like Beckham|BendItLikeBeckham

Bohemian Rhapsody|BohemianRhapsody

Brooklyn Nine Nine|BrooklynNineNine

Charmed|Charmed

Chicago Fire|ChicagoFire

Cinderella|Cinderella

City Adventures|CityAdventures

Doctor Dolittle|DoctorDoLitte

Dynasty|Dynasty

Family Guy|FamilyGuy

Jurassic World|JurassicWorld

Magnum PI|MagnumPI

Major Dad|MajorDad

Monkie Kid|MonkieKid

NCIS|NCIS

Ninjago|Ninjago

RoboCop 2|RoboCop2

Task Configuration Files

Configuration of File Locations

Diskover worker nodes use a series of YAML files for various configuration settings. A worker node can be a local distributed node that can perform a variety of tasks from indexing, tagging, check for duplicates, autoclean, or any other custom task. YAML is a human-readable data serialization standard that can be used in conjunction with all programming languages and is often used for configuring file settings.

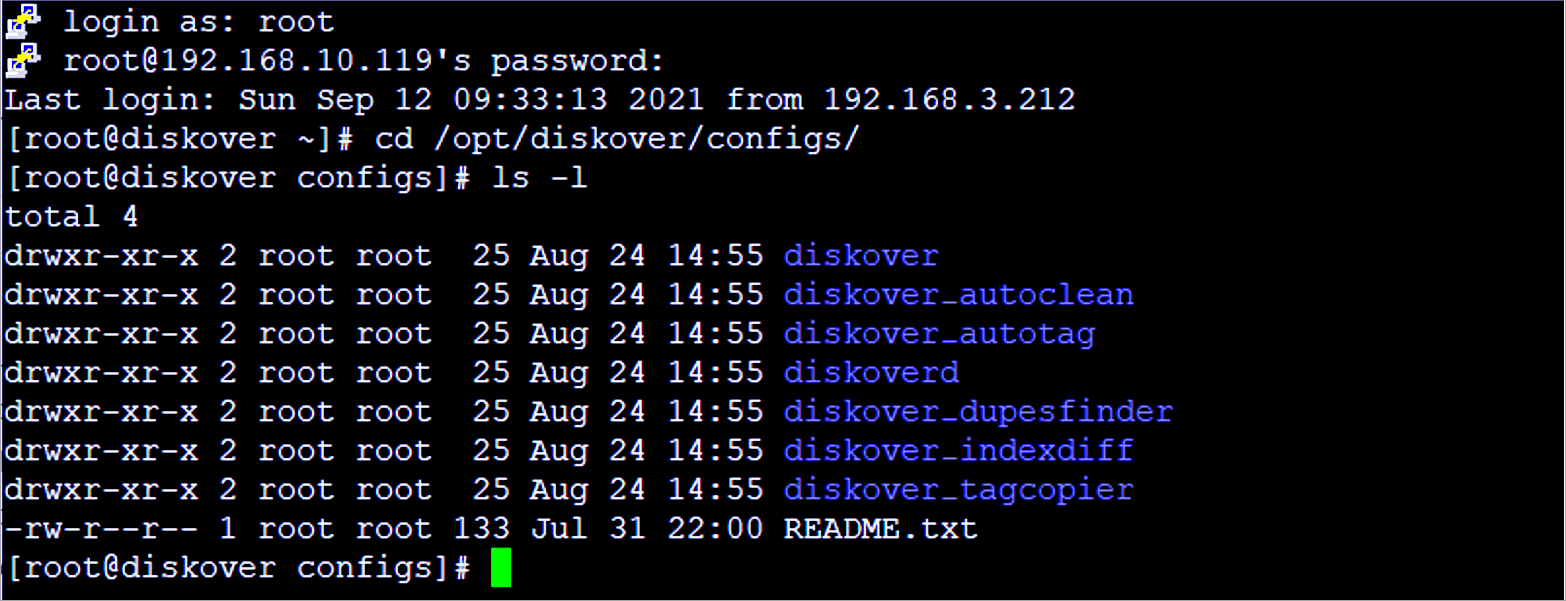

Diskover task workers use a separate directory for each config file. At time of installation, example config.yaml files are located in /opt/diskover/configs/

At time of installation, these files are copied to:

- Linux:

/root/.config/ - Windows:

%APPDATA%\ - MacOS:

~/.config/

During installation, at least one index worker is configured, that configuration file is located at: /root/.config/diskover/config.yaml

Using Alternate Configuration Files

Diskover provides default configuration files for tasks which are located in /opt/diskover/configs_sample

Diskover provides the ability for tasks to use alternate configuration files to match the task requirements via the altconfig variable. Alternate configuration files can be invoked both via the command line and with the Diskover Task Panel.

Alternate Configuration Invocation Via Command Line

Worker nodes typically launch tasks from the Diskover application directory. Depending on the worker’s task, different environment variables need to be exported to invoke usage of the desired alternate configuration file. The convention for exporting alternate configuration files is:

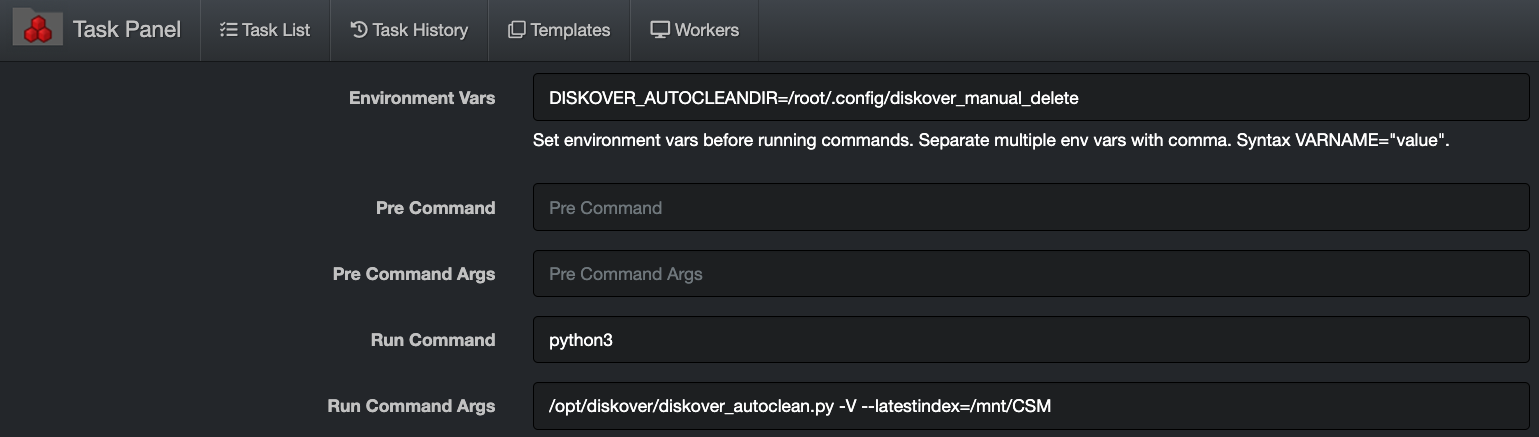

Alternate Configuration Export Variable: Is the DISKOVER_NAMEOFTASKDIR where NAMEOFTASK equals the name of python executable, for example the export variable for diskover_autoclean.py is DISKOVER_AUTOCLEANDIR

There are separate configs for diskover crawler, autotag, dupes-finder, diskoverd, etc. The default config files are not used by diskover crawler, etc., they are default/sample configs and need to be copied to the appropriate directory based on the OS.

For example, in Linux the config files are in ~/.config/<appName>/config.yaml. Each config file has a setting appName that matches the directory name where the config file is located. For diskover dupes-finder for example, this would be ~/.config/diskover_dupesfinder/config.yaml.

Note: When editing config.yaml files, use spaces in config files, not tabs.

If you get an error message when starting diskover.py like

Config ERROR: diskover.excludes.dirs not found, check config for errors or missing settings from default config., check that your config file is not missing any lines from default/sample config or there are no errors in your config like missing values.

To invoke alternate configuration files instead of the default configuration files:

🔴 Indexing tasks: The DISKOVERDIR variable is used to invoke the alternative configuration file for indexing tasks:

export DISKOVERDIR=/someconfigfile.yaml

🔴 Autoclean tasks: The DISKOVER_AUTOCLEANDIR variable is used to invoke the alternative configuration file for autoclean tasks.

export DISKOVER_AUTOCLEANDIR=/path/alt_config_dir/

🔴 Autotag tasks: The DISKOVER_AUTOTAGDIR variable is used to invoke the alternative configuration file for post-index autotag tasks.

export DISKOVER_AUTOTAGDIR=/path/alt_config_dir/

🔴 Duplicate finder tasks: The DISKOVER_DUPESFINDERDIR variable is used to invoke the alternative configuration file for duplicate finder tasks.

export DISKOVER_DUPESFINDERDIR=/path/alt_config_dir/

🔴 Tag copier tasks: The DISKOVER_TAGCOPIERDIR variable is used to invoke the alternative configuration file for tag copier tasks.

export DISKOVER_TAGCOPIERDIR=/path/alt_config_dir/

Note: It is recommended to test any changes to alternative configuration files via the command line for errors. If you get an error message when starting

diskover.pylike Config ERROR: diskover.excludes.dirs not found, check config for errors or missing settings from the default configuration file. Ensure that your config file is not missing any lines from default/sample config or there are no errors in your config like syntax errors or missing values.

Alternate Configuration Invocation Via Task Panel

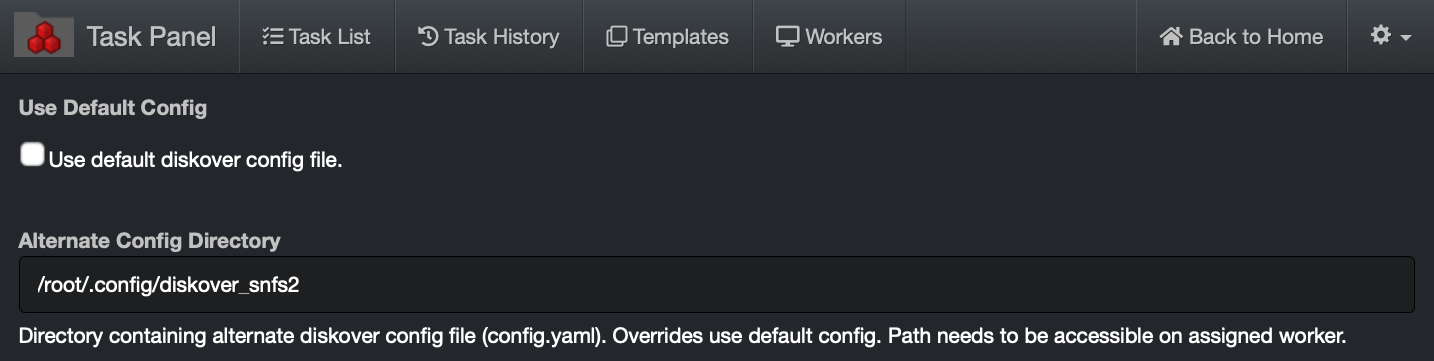

Detailed discussion on configuration and administration of Diskover Task Management functionality can be found in the Task Management Chapter. To maintain context, the following describes how to use alternate configuration files within the Diskover Task Panel.

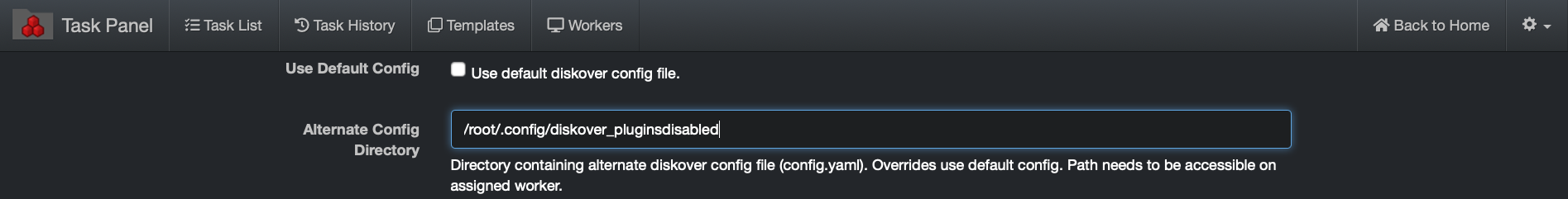

Indexing Tasks

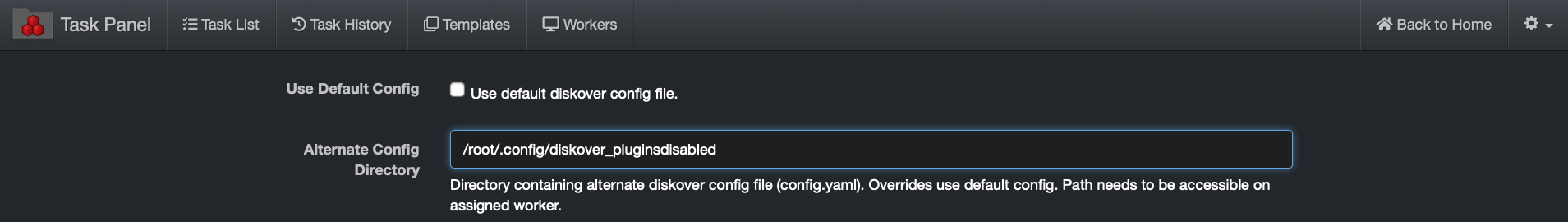

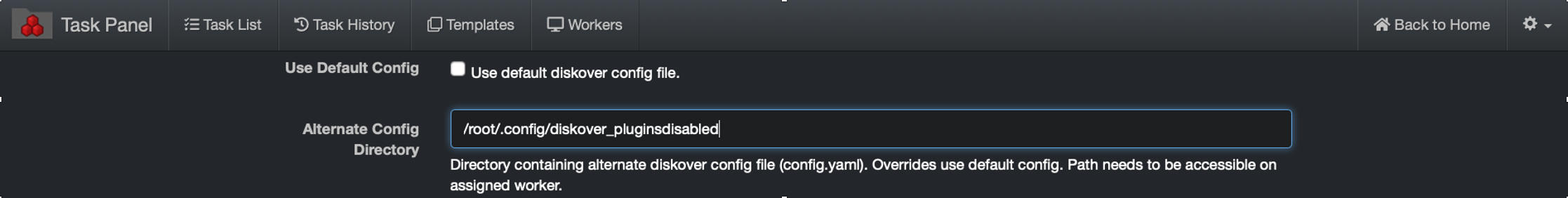

🔴 To invoke the use of alternate configuration file for indexing tasks > Uncheck Use default diskover config file and enter alternate configuration directory in Alternate Config Directory dialog box.

Custom Tasks

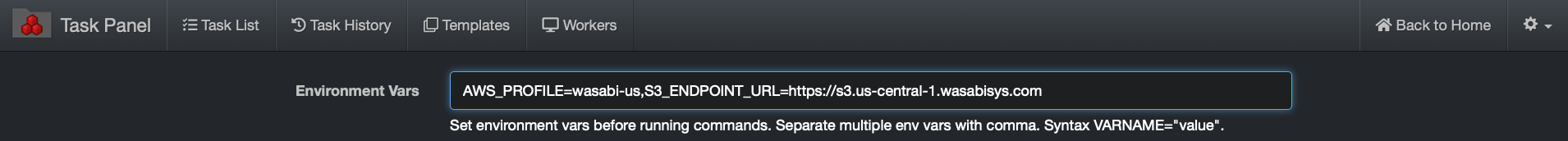

🔴 To invoke the use of alternate configuration file for custom tasks > Enter alternate configuration export command in Environment Vars dialog box.

Task Management

The task panel can be used to schedule building indices or running any type of file action task such as copying files, running duplicate file finding, checking permissions on directories, etc. The task panel is a swiss-army knife for data management.

Distributed Architecture and Management

Diskover has a distributed task system where workers can be distributed among many resources. For each resource providing a task worker, services will need to have diskoverd installed. Please refer to instructions on how to set up the the diskoverd task service in the Diskover Installation Guide - Setting Up Diskover Task Worker Daemon. This section will describe setting up both indexing and custom tasks within the Diskover-Web Task Panel.

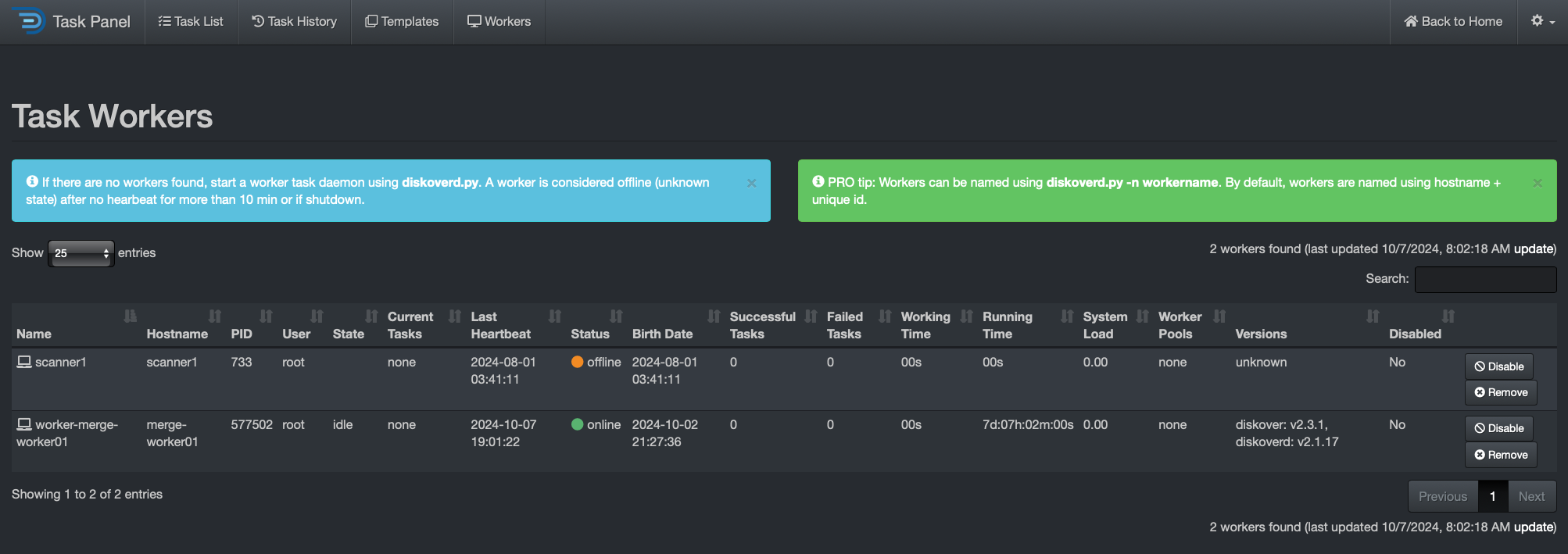

Validate Task Management System has Task Worker(s)

🔴 Open the Task Panel within the Diskover-Web user interface > gear icon > Task Panel and go to Workers tab.

🔴 Ensure the presence of at least one online task worker under Status column.

Managing Diskover Tasks via the Task Panel

The following will illustrate how to create basic indexing and custom tasks via the Diskover Task Panel.

Index Tasks

A default indexing task is provided in the task panel. The configuration of indexing tasks is different for Posix File Systems and S3 based object storage, the following will illustrate setting up basic indexing tasks for each.

Posix File System Indexing Task

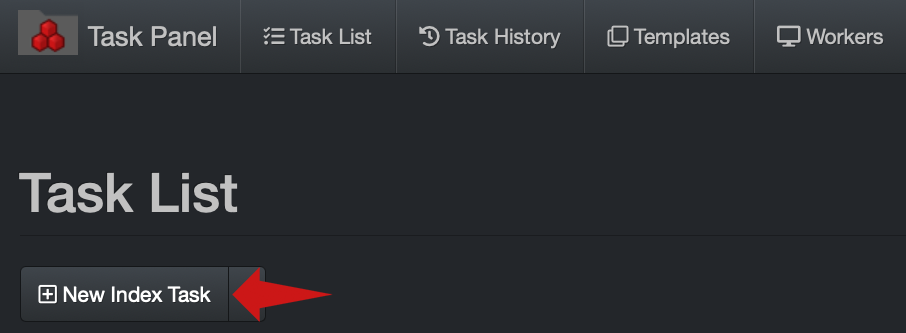

🔴 Select New Index Task from > Task Panel > Task List tab:

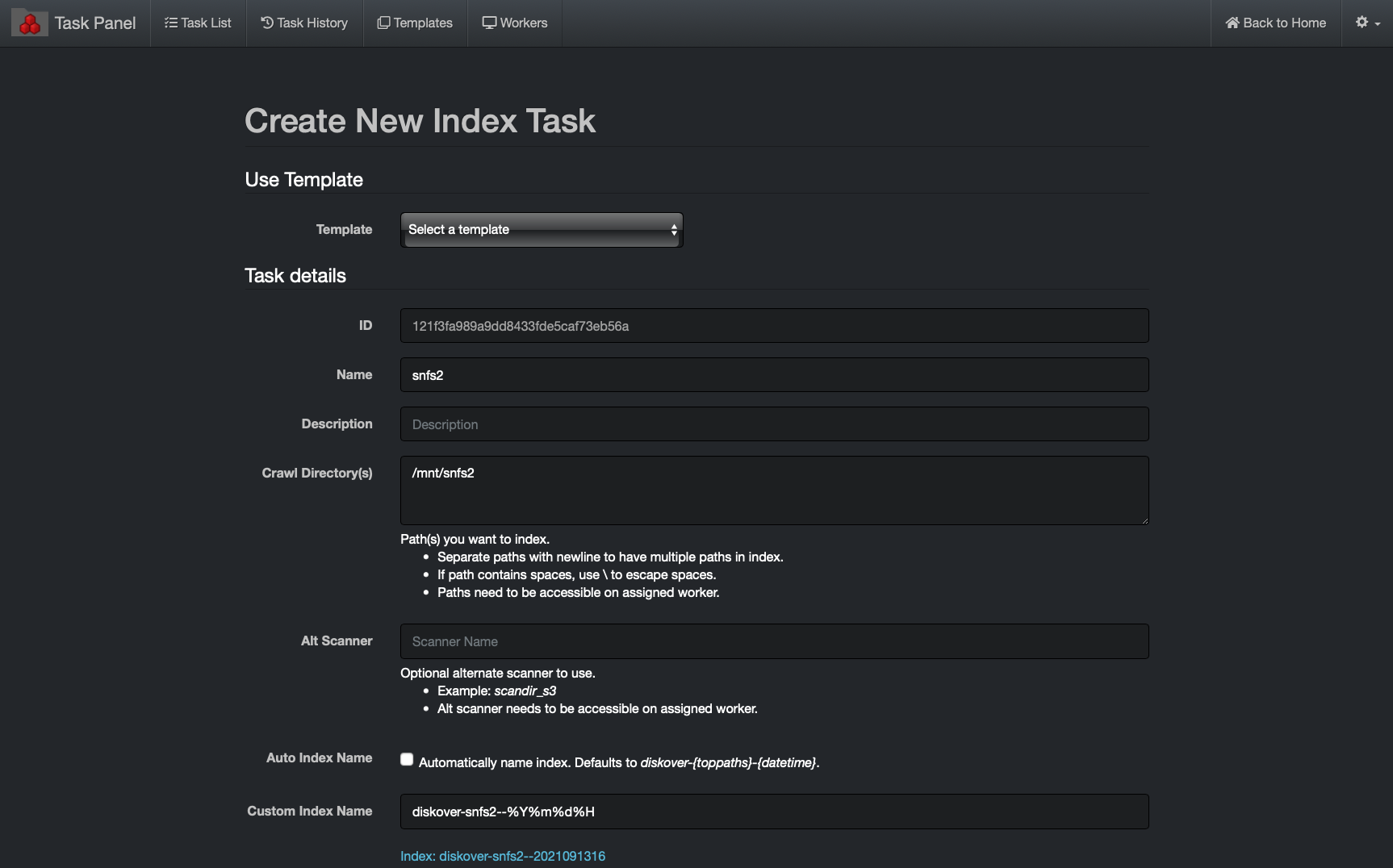

The following Create New Index Task dialog box will appear. Configure as follow:

🔴 Name: index-volumename (where volumename is the name of volume, in this example snfs2)

🔴 Crawl Directory(s): /mnt/volumedir (where volumedir is the volume mountpoint, in this example /mnt/snfs2)

Note: Paths are case sensitive and must exist on the indexing task worker host. For Windows task worker, set the crawl directory to for example

H:\\SomefolderorC:\\using double backslashes (escaped) or for UNC paths use\\\\UNC\\share.

🔴 Auto Index Name: unselect box

🔴 Custom Index Name: diskover-volumename-%Y%m%d%H

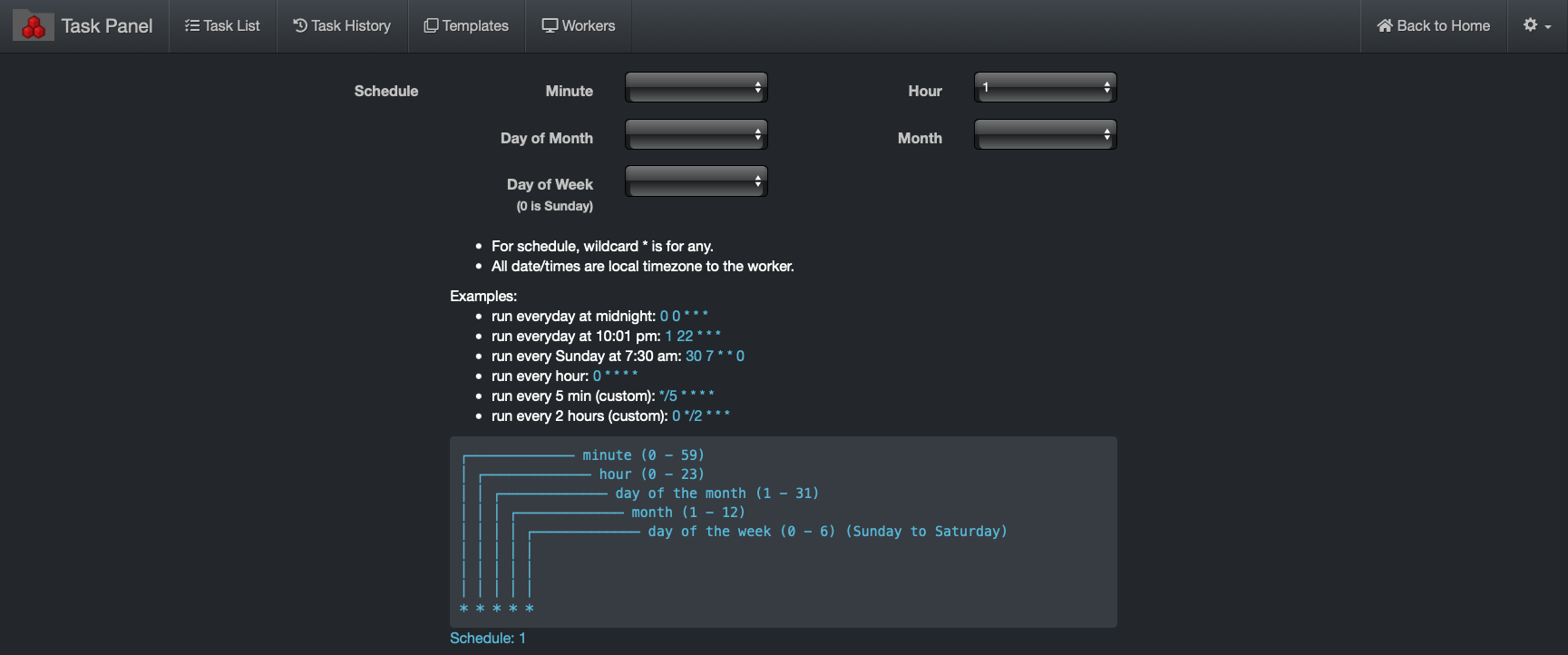

🔴 A schedule is required to create the indexing task. The example below > Hour > 1 will run the indexing task every day at 1:00 am.

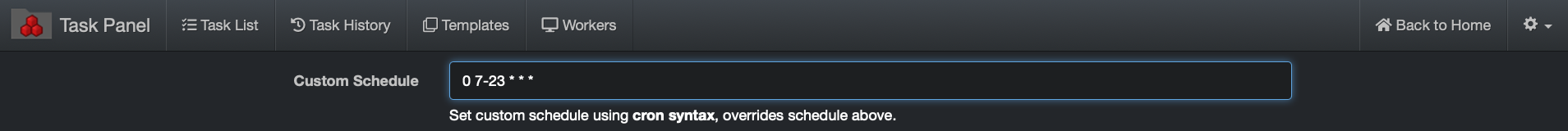

🔴 To use a custom schedule to set the volume to index every hour from 7 am to 11pm for example, enter the following in the Custom Schedule box:

0 7-23 * * *

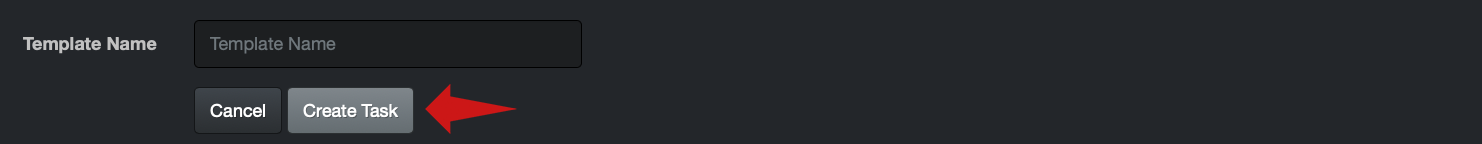

🔴 Then select Create Task:

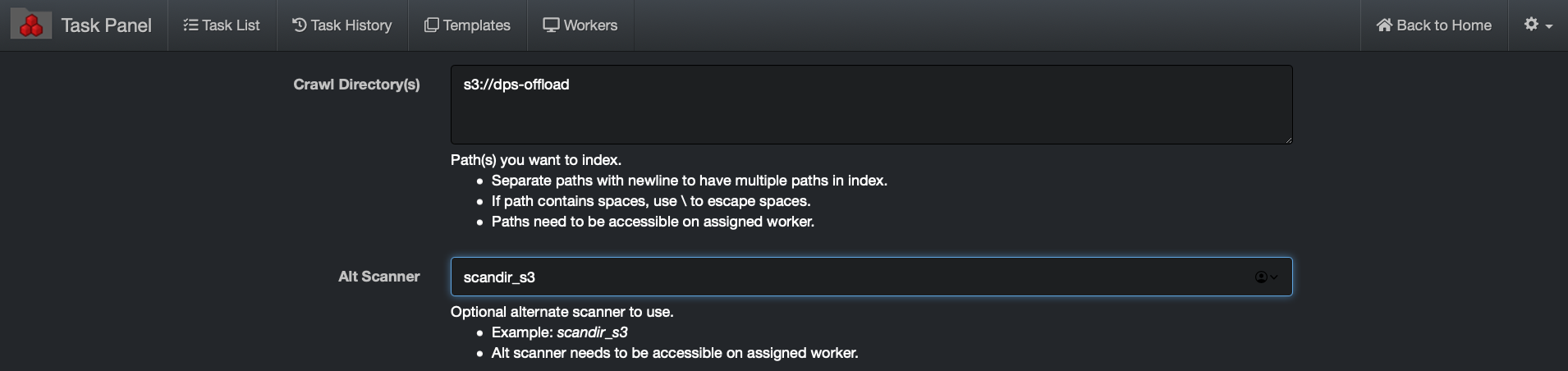

S3 Bucket Indexing Task

Indexing tasks for S3 buckets are slightly different than Posix File systems, the following outlines the configuration differences required in the Create New Index Task in the Task Panel.

Configure the following differences for indexing S3 buckets:

🔴 Crawl Directory(s): s3://bucketname

Where bucketname is the actual name of the S3 bucket desired for indexing, in example below, the bucket name is dps-offload:

🔴 If the media info plugin is enabled in the default config.yaml file, then configure the following to disable the media info plugin for S3 based storage as described in Task Configuration Files chapter.

Non S3 Bucket Indexing Task

Indexing tasks for non-AWS S3 buckets is slightly different than the previous section. The following outlines the configuration differences required for alternate credentials and endpoints.

In addition, you need to configure the Environment Vars for non-AWS S3 buckets:

🔴 Where profile is the name of desired_profile, as found in /root/.aws/credentials (where desired_profile in this example is wasabi-us)

🔴 Where alternate_endpoint.com is the URL of the S3 bucket (where alternate_endpoint.com in this example is https://s3.us-central-1.wasabisys.com)

AWS_PROFILE=profile,S3_ENDPOINT_URL=https://alternate_endpoint.com

Diskover Plugins

Diskover is designed for extensibility. The open-source architecture is designed to promote extensibility via plugins.

Plugins Installation and Config Files Location

There are two invocation methods for plugins; 1) plugin executed at time of index, 2) plugin executed post index as CRON like tasks.

Diskover config file are located in:

- Linux:

~/.config/diskover/config.yaml - Windows:

%APPDATA%\diskover\config.yaml - MacOS:

~/Library/Application Support/diskover/config.yaml

The default configs are located in configs_sample/. There are separate configs for diskover autotag, dupes-finder, etc. They are default/sample configs and need to be copied to the appropriate directory based on the OS.

For example, in Linux the config files are in ~/.config/<appName>/config.yaml. Each config file has a setting appName that matches the directory name where the config file is located. For Diskover dupes-finder for example, this would be ~/.config/diskover_dupesfinder/config.yaml.

Autoclean Plugin

The autoclean plugin is designed to move, copy, delete, rename or run custom commands on files and/or directories based on a set of highly configurable criteria. Any Elasticsearch query (tags, age, size, path, filename, etc.) can be used for the criteria providing very granular actions.

With the use of tags, the autoclean plugin can be used to implement a RACI model or approval process for archive and deletion (approved_archive, approved_delete, etc.) tag application. The plugin criteria can then be set to meet desired set of tags (times, etc.) to invoke action.

🔴 Check that you have the config file in ~/.config/diskover_autoclean/config.yaml, if not, copy from default config folder in configs_sample/diskover_autoclean/config.yaml.

🔴 The autoclean plugin runs post as scheduled job operating on completed indices, to enable:

vim /root/.config/diskover_autoclean/config.yaml

🔴 Configure desired rules:

- Query can be any valid Elasticsearch query using query string query.

- Action can be: delete, rename, move, copy or custom. Custom can be used to run a command or script.

Note: When using custom action,

custocmdvalue is required. The full file/directory path is passed asargtocustomcmd.

Example using custom action:

Set action to custom and specifiy customcmd, in this example we are using a bash script:

dirs: [

{

'query': 'tags:archive AND type:directory',

'action': 'custom',

'customcmd': './scripts/autoclean_rsync_dir.sh',

'renametext': '',

'movedir': '',

'copydir': '',

'checktimes': ['ctime', 'mtime'],

'tags': ['autocleaned', 'custommove']

}

]

Create bash script and make it executable for customcmd:

touch autoclean_rsync_dir.sh

chmod +x autoclean_rsync_dir.sh

vim autoclean_rsync_dir.sh

#!/bin/bash

#

# Sync directory using Linux rsync command.

#

# Note: We don't need to check if source directory exists since autoclean

# takes care of that before calling this script.

#

# get source path from arg 1

SRC_PATH=$1

# set destination directory

DST_PATH=/mnt/nas2/archive/

# make destination directory if it does not exist

if [ ! -d "$DST_PATH" ]; then

mkdir -p "$DST_PATH"

# check if mkdir worked

if [ $? -gt 0 ]; then

>&2 echo ERROR could not make destination directory $DST_PATH !

exit 1

fi

fi

# use rsync command to sync directory

echo Syncing "$SRC_PATH" to "$DST_PATH" ...

rsync -avz "$SRC_PATH" "$DST_PATH"

# check if rsync worked

if [ $? -gt 0 ]; then

>&2 echo ERROR syncing directory!

exit 1

else

echo Done.

fi

exit 0

Run Autoclean from cli

🔴 Run autoclean and get help to see cli options:

cd /opt/diskover/plugins_postindex

python3 diskover_autoclean.py -h

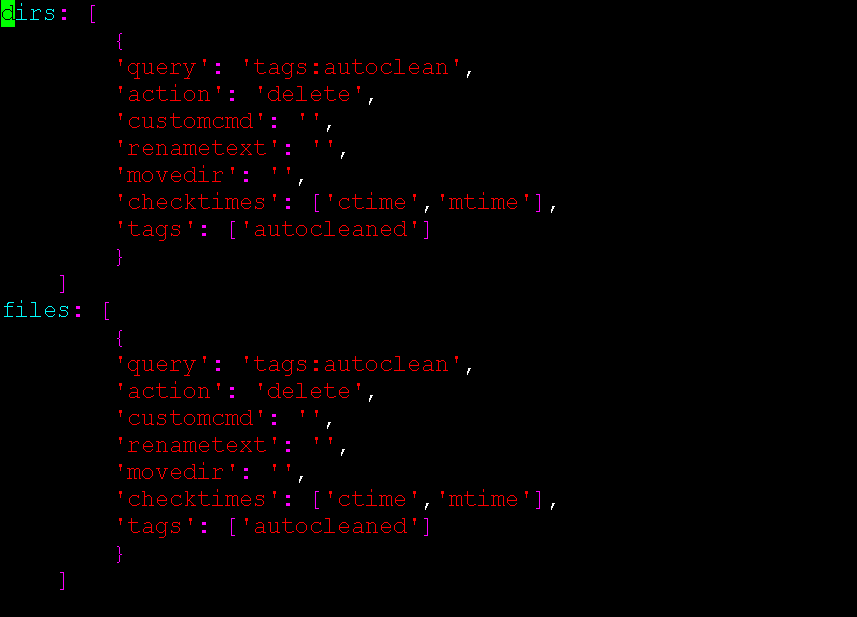

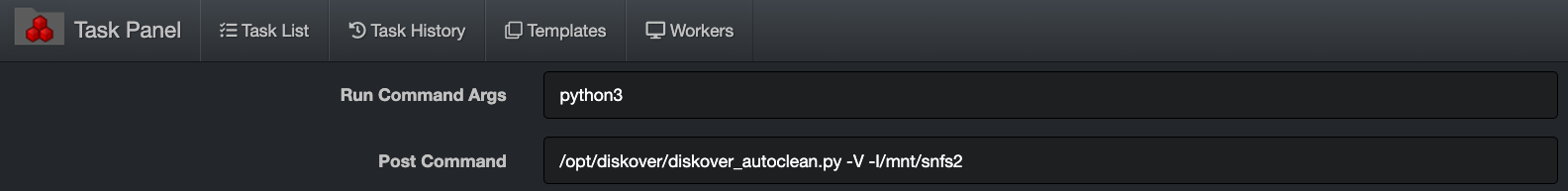

Add Autoclean Task to Diskover-web

🔴 Create custom task in Task Panel to run on scheduled basis.

🔴 Set the following:

- Run Command Args: python3

- Post Command: /opt/diskover/diskover-autoclean.py -V -l /mnt/snfs2

🔴 Change /mnt/snfs2 to the desired top_level_path, for example, if the desired volume to index is isilon, then the path would be /mnt/isilon

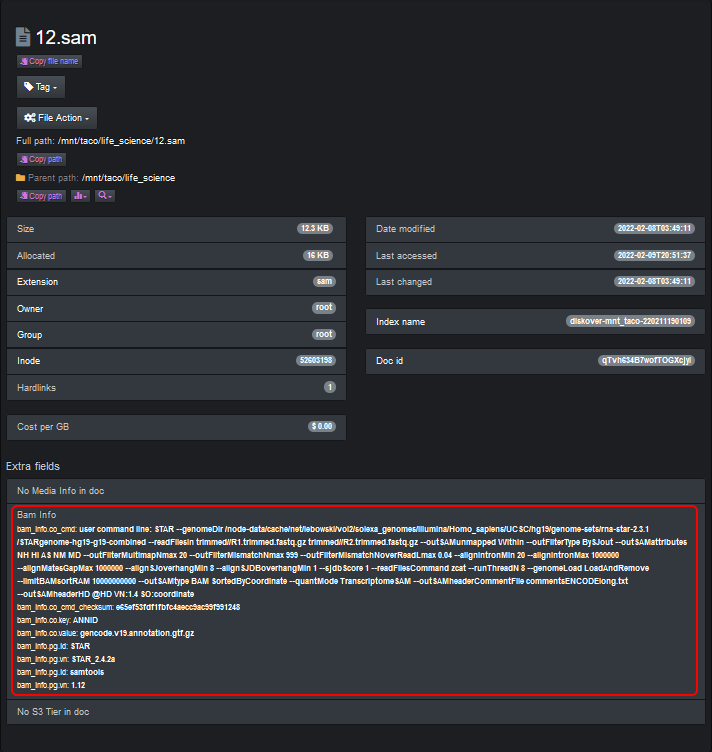

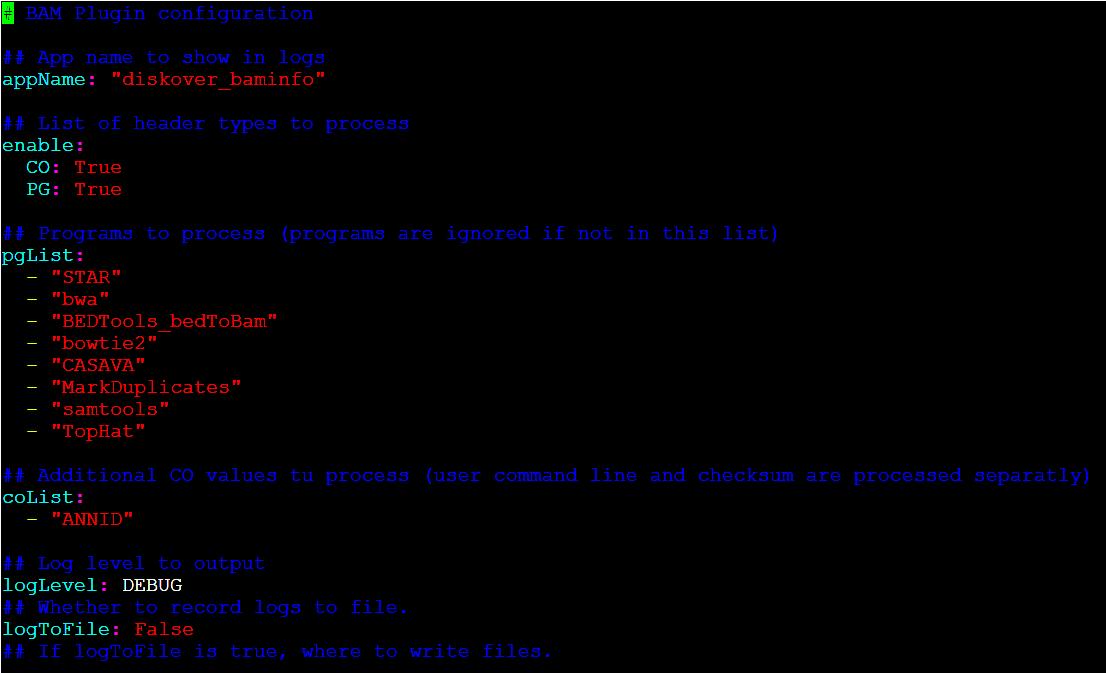

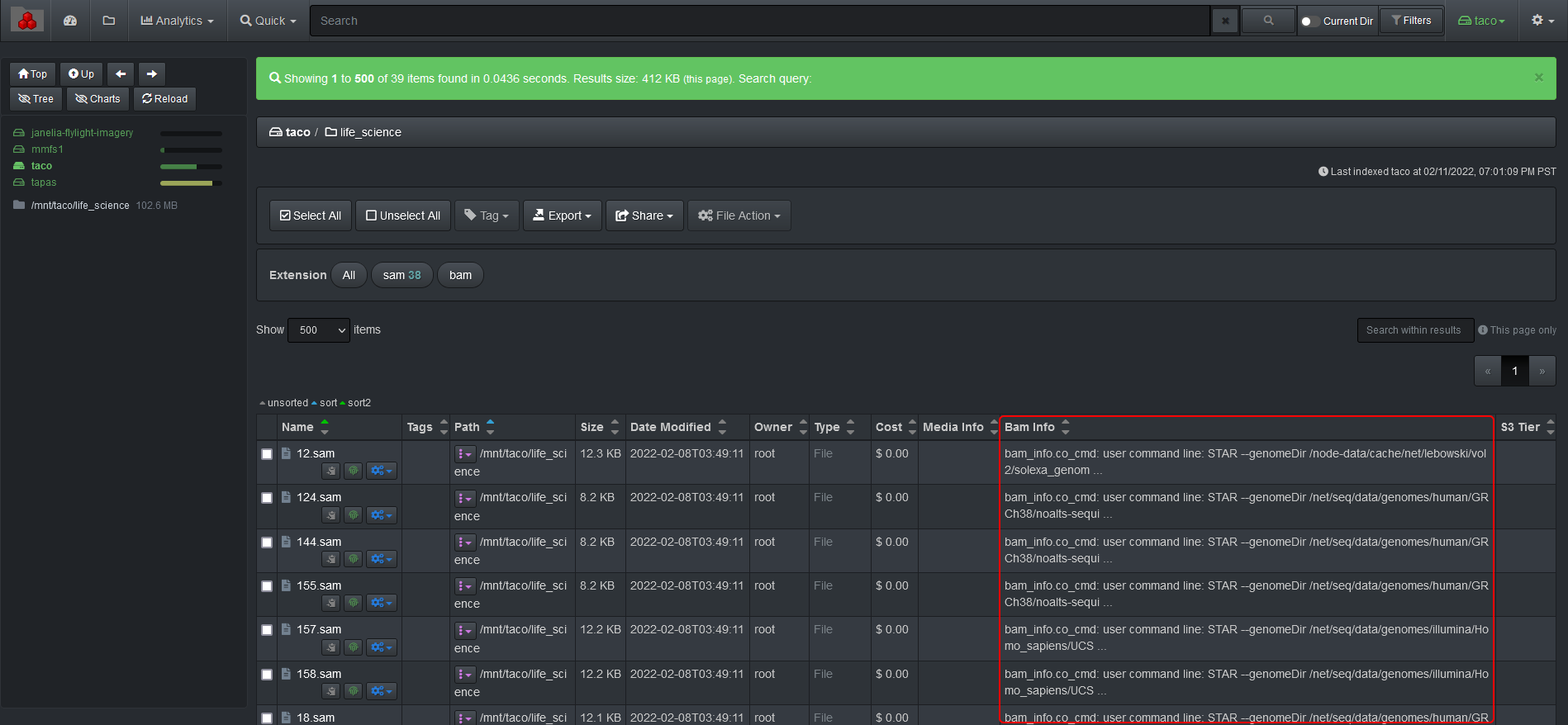

BAM Info Harvest Plugin

🍿 Watch Demo Video

The BAM info harvest plugin is designed to provide BAM metadata attributes about a file without granting the Diskover user any read/write file system access. The BAM info plugin enables additional metadata for the SAM and BAM file formats to be harvested at time of index, and are therefore searchable within Diskover.

The specification for the SAM file format can be found here:

https://samtools.github.io/hts-specs/SAMv1.pdf

The BAM info plugin uses the Python pysam to harvest attributes about the BAM and SAM files:

https://pysam.readthedocs.io/en/latest/

New indices will use the plugin and any SAM or BAM file will get additional info added to the Elasticsearch index’s bam_info field.

The attributes provide the ability to view storage and file system content from a workflow perspective, for example, all the frame rates on any given storage.

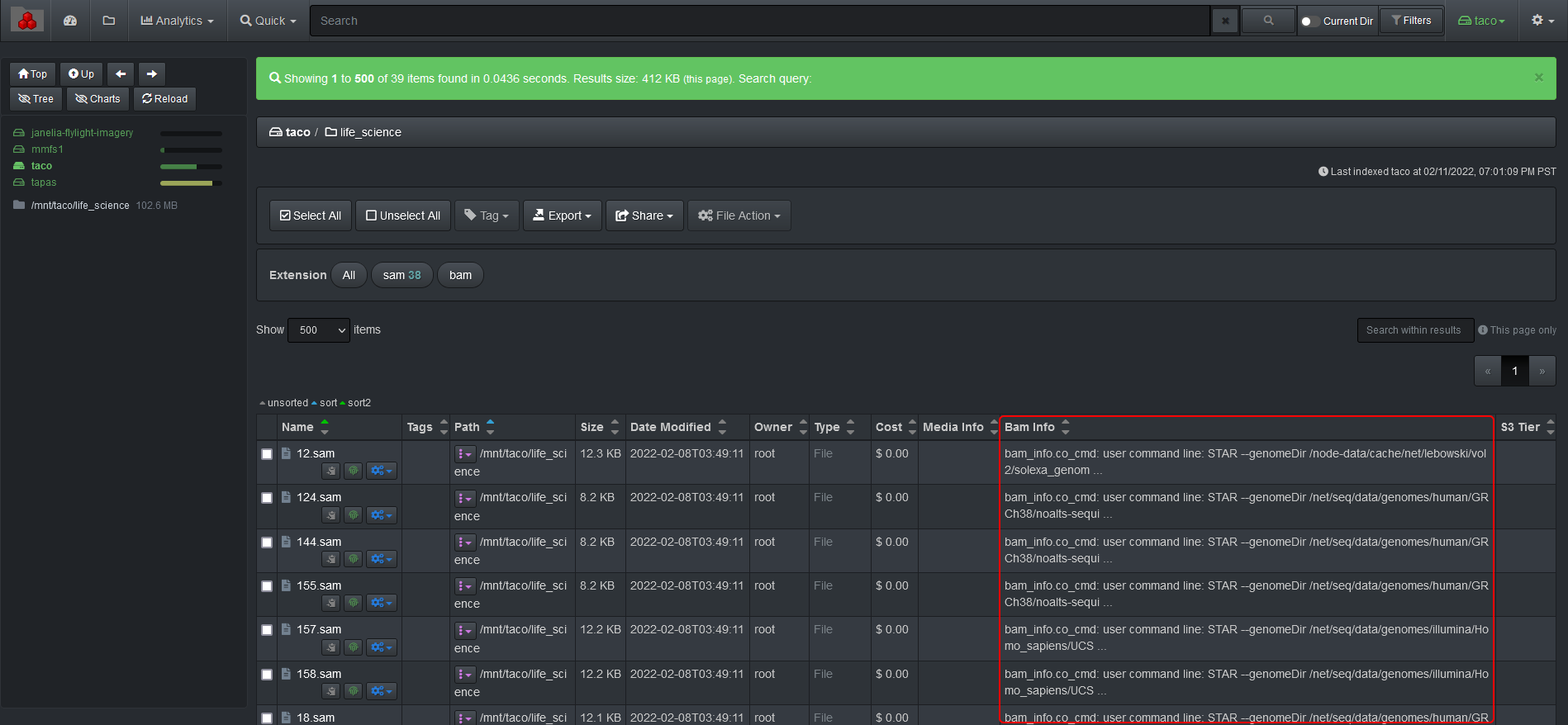

You can view and search on BAM info attributes in Diskover-Web since it will store it in a new field for video files, the field name is bam_info.

The BAM info fields are shown as additional searchable attributes to each file. You can view detailed attributes when opening up a file in Diskover.

Install BAM Info Dependencies

🔴 Copy the BAM info content in the install location:

cp __init__.py /opt/diskover/plugins/baminfo/

cp README.rnd /opt/diskover/plugins/baminfo/

cp requirements.txt /opt/diskover/plugins/baminfo/

mkdir /root/.config/diskover_baminfo/

cp config.yaml /root/.config/diskover_baminfo/

🔴 Edit the BAM info plugin to specify programs used within the software pipeline, in the example below the following programs are used:

- "STAR"

- "bwa"

- "BEDTools_bedToBam"

- "bowtie2"

- "CASAVA"

- "MarkDuplicates"

- "samtools"

- "TopHat"

🔴 The BAM info plugin requires the following dependencies on CentOS:

yum install zlib-devel -y

yum install bzip2-devel

yum install xz-devel

cd /opt/diskover/plugins/baminfo/

pip3 install -r requirements.txt

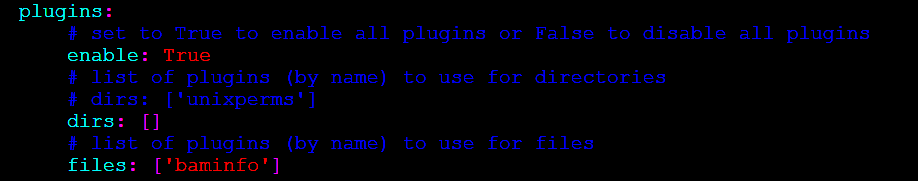

🔴 The BAM info plugin runs as part of the indexing process, to enable:

vim /root/.config/diskover/config.yaml

🔴 Set > enable: True

🔴 Set > files: [‘baminfo’]

Note: The BAM info plugin is currently not supported for S3 based object storage. If the BAM info plugin is enabled in the default configuration file, an alternate configuration file must be created where the media info plugin is disabled. The alternate configuration file must be invoked when indexing S3 based volumes:

/root/.config/diskover_pluginsdisabled

BAM Info Field within Diskover-Web

🔴 To display the bam_info fields within Diskover-Web, edit the Contants.php configuration:

vim /var/www/diskover-web/src/diskover/Constants.php

🔴 Add the following under EXTRA_FIELDS:

const EXTRA_FIELDS = [

'Bam Info' => 'bam_info'

];

Search BAM Attributes within Diskover-Web

The BAM attributes can be used in a manual search query by using the BAM field name bam_info. The structure is as follow:

bam_info.<key>:<value>

For example:

bam_info.pg.id:STAR

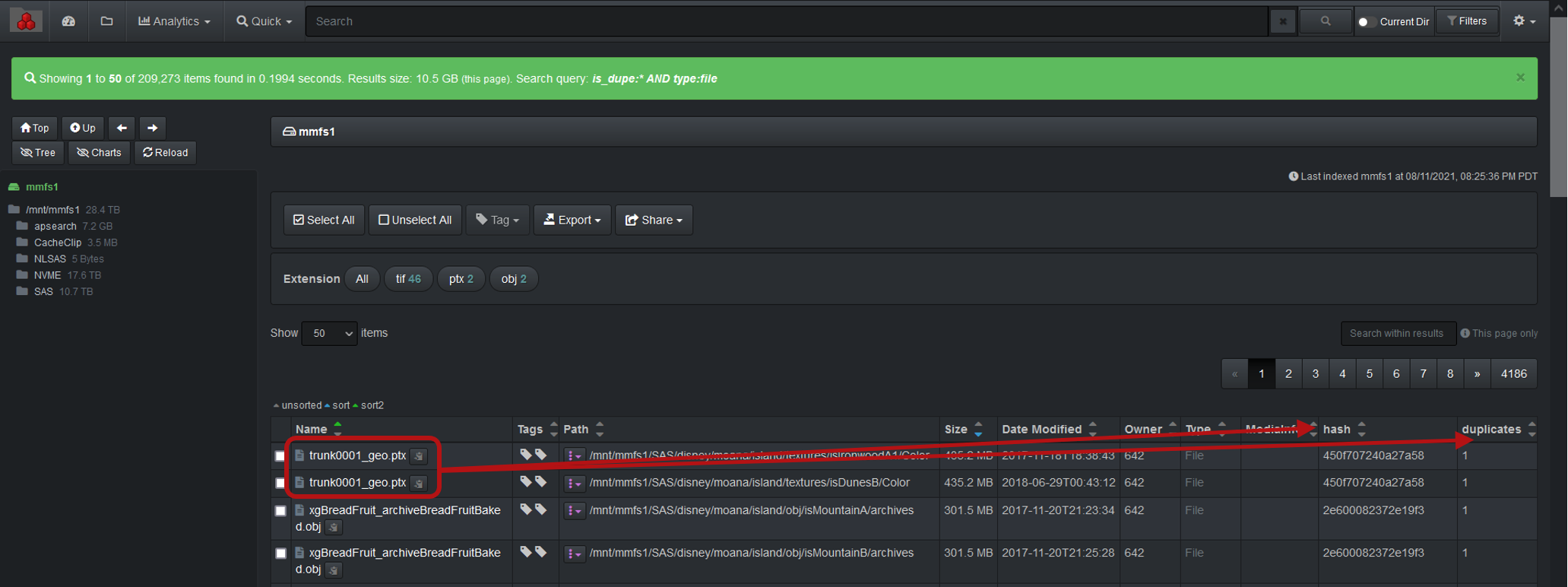

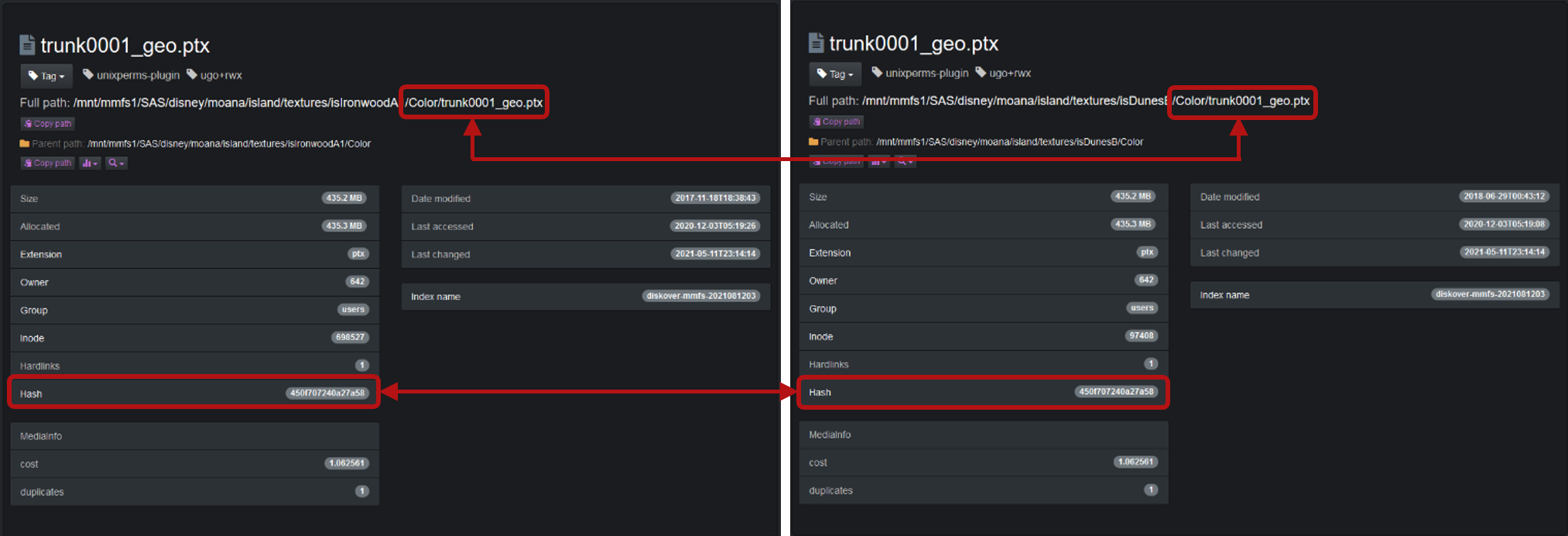

Duplicates Finder Plugin

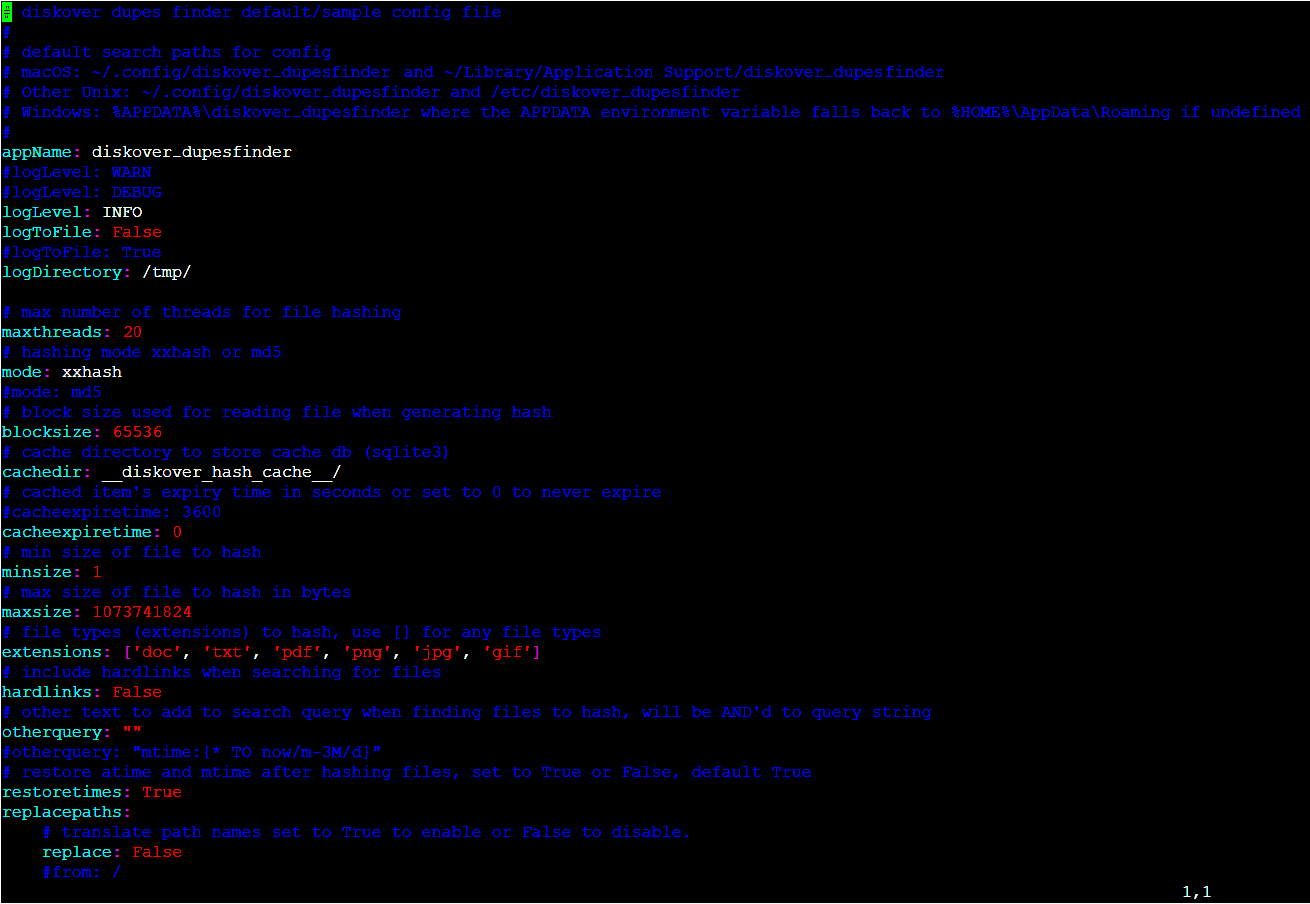

The Diskover duplicates finder plugin (dupes-finder) leverages post processing of index to check for duplicates, across all file systems, or subset thereof. The plugin supports xxhash, md5, sha1, and sha256 checksums. The plugin is designed for multiple use cases:

- To check for duplicate files across a single or all file systems (single or multiple indices) and indexing the file docs in index that are dupes

- Calculating file checksums/hashes for all duplicate files or all files and indexing hashes to file docs in index

Calculating file hash checksums is an expensive CPU/disk operation. The dupes-finder provides configuration options to control what files in the index get a hash calculated and marked as a dupe (is_dupe field in file docs set to true). In addition, the dupes-finder provides additional optimization mechanisms:

- The diskover-cache sqlite3 db can be used to store file hashes (-u cli option).

- An existing index can be used to lookup file hashes (-U cli option).

- The Elasticsearch fields for file type that get updated are

hashandis_dupe.hashis an object field type and each hash type is stored in a sub-field:hash.xxhash,hash.md5,hash.sha1,hash.sha256.is_dupeis a boolean field and only gets added and set totrueif the file is a duplicate file.

🔴 To use the default hashing mode xxhash, you will first need to install the xxhash Python module. Post indexing plugins are located in plugins_postindex/ directory.

pip3 install xxhash

The dupes-finder can also be used to add file hashes to all the files in the index, not just the duplicates found.

The duplicates plugin will store hash values that can be stored only for duplicates or for all files.

🔴 Check that you have the config file in /root/.config/diskover_dupesfinder/config.yaml, if not, copy from the default config folder in configs_sample/diskover_dupesfinder/config.yaml.

mkdir /root/.config/diskover_dupesfinder

cd /opt/diskover/configs_sample/diskover_dupes_finder

cp config.yaml /root/.config/diskover_dupesfinder/

🔴 The dupes-finder plugin runs post index and operates on completed indices as a scheduled job or on demand job to provide duplicates analysis on completed indices, to enable:

vim /root/.config/diskover_dupesfinder/config.yaml

🔴 At minimum configure the following:

- mode: desired checksum xxhash, md5, sha1, or sha256, can also be set using -m cli option

- minsize and maxsize: minimum and maximum size (in bytes) of files to hash

- extensions: desired file extensions to check, for all files use

[]

🔴 Some additional settings:

- maxthreads: maximum number of threads to use for file hashing, leave empty/blank to auto-set based on number of cpu cores

- otherquery: additional Elasticsearch query when searching an index for which files to hash

- restoretimes: restore atime/mtime file times after hashing file

- replacepaths: for translating paths from index path to real path, example translating

/to/mnt/. This is required if path translations were done in index or needing to convert to a Windows path.

🔴 To run the duplicates check via command line:

cd /opt/diskover/plugins_postindex

python3 diskover_dupesfinder.py diskover-<indexname>

🔴 To run the duplicates check and cache duplicate file hashes in sqlite cache db:

python3 diskover_dupesfinder.py diskover-<indexname> -u

🔴 To run the duplicates check and cache all file hashes in sqlite cache db, and hash all files (not just dupe files):

python3 diskover_dupesfinder.py diskover-<indexname> -u -a

🔴 To run the duplicates check and cache all file hashes in sqlite cache db, use hash mode sha1, and save all dupe files to csv:

python3 diskover_dupesfinder.py diskover-<indexname> -u -m sha1 -c

🔴 To run the dupes finder for multiple completed indices and compare dupes between indices:

python3 diskover-dupesfinder.py diskover-<indexname1> diskover-<indexname2>

🔴 Get help and see all cli options:

python3 diskover-dupesfinder.py -h

Index ES Query Report Plugin

The index Elasticsearch (ES) query report plugin is designed to search for es query string in an existing completed index and create a csv report with the ability to to send the report to one or more email recipients.

🔴 The index ES query report plugin runs post index and operates on completed indices as a scheduled job or on demand job to search for docs in an index.

🔴 Copy default/sample config:

mkdir /root/.config/diskover_esqueryreport

cd /opt/diskover

cp configs_sample/diskover_esqueryreport/config.yaml /root/.config/diskover_esqueryreport/

🔴 Edit the ES query report config and edit for your environment:

vim /root/.config/diskover_esqueryreport/config.yaml

Note: By default report csv files are saved in

/tmp folder

🔴 To run es query report plugin via command line:

cd /opt/diskover/plugins_postindex

python3 diskover-esqueryreport.py -q "es query string" indexname

🔴 To get help and see all cli options:

python3 diskover-esqueryreport.py -h

Index Illegal File Name Plugin

🍿 Watch Demo Video

The index illegal file name plugin is designed to search for illegal file names and directory names in an existing completed index.

🔴 The index illegal file name plugin runs post index and operates on completed indices as a scheduled job or on demand job to search for and tag docs in an index.

🔴 Copy default/sample config:

mkdir /root/.config/diskover_illegalfilename

cd /opt/diskover

cp configs_sample/diskover_illegalfilename/config.yaml /root/.config/diskover_illegalfilename/

🔴 Edit the illegal file name config and edit defaults if needed:

vim /root/.config/diskover_illegalfilename/config.yaml

Note: By default any illegal file names are tagged with illegalname and any long file names are tagged with longname

🔴 To run illegal file name plugin via command line:

cd /opt/diskover/plugins_postindex

python3 diskover-illegalfilename.py indexname

🔴 To get help and see all cli options:

python3 diskover-illegalfilename.py -h

Index Auto Tag Plugin

The index auto tag plugin is designed to auto tag an existing completed index. Auto-tagging can also be done during crawl time by adding tag rules in the diskover config file.

🔴 The index auto tag plugin runs post index and operates on completed indices as a scheduled job or on demand job to auto tag docs in an index.

🔴 Copy default/sample config:

mkdir /root/.config/diskover_autotag

cd /opt/diskover

cp configs_sample/diskover_autotag/config.yaml /root/.config/diskover_autotag/

🔴 Edit the autotag config and set the directory and file tag rules:

vim /root/.config/diskover_autotag/config.yaml

🔴 To run auto tag via command line:

cd /opt/diskover/plugins_postindex

python3 diskover-autotag.py indexname

🔴 To get help and see all cli options:

python3 diskover-autotag.py -h

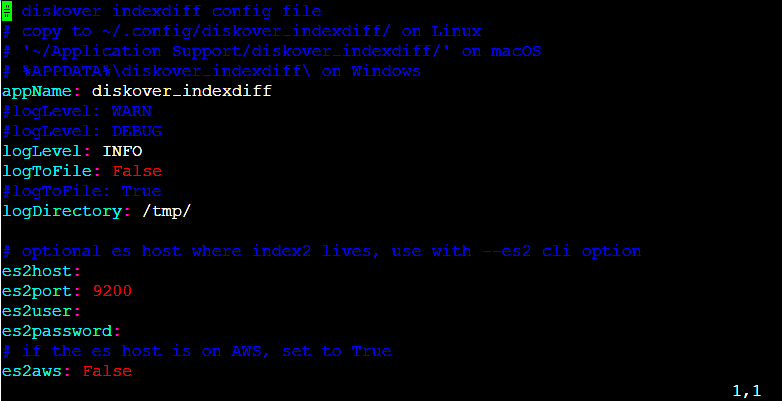

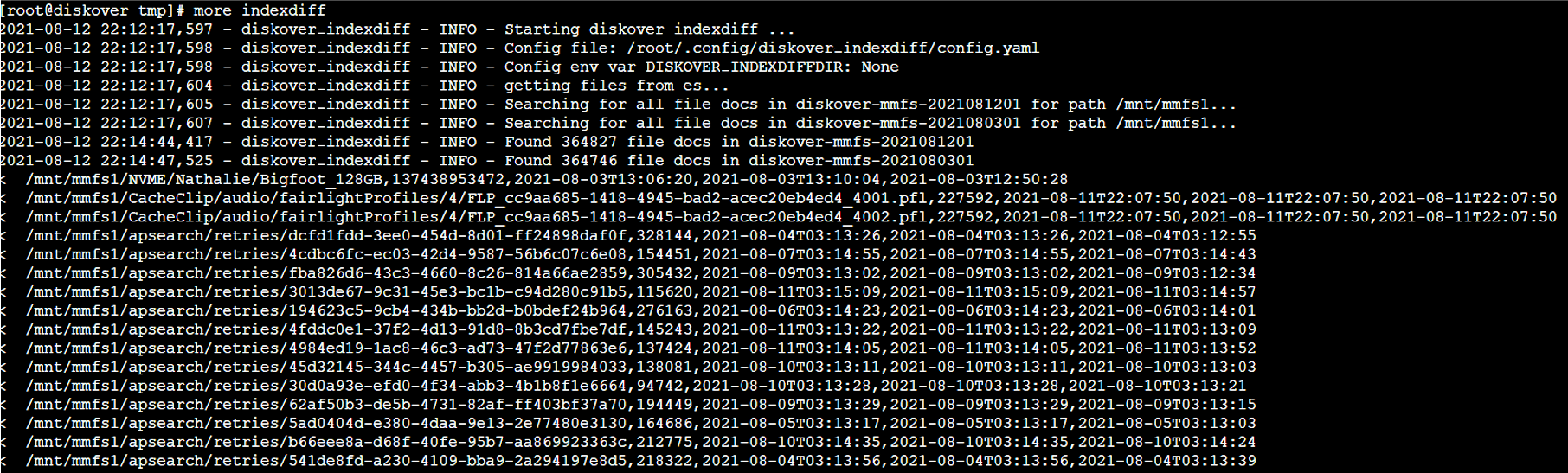

Index Differential Plugin

The index differential plugin is designed to provide a list of file differences between two indices (or points in time). The differential list can be used to feed synchronization tools (i.e. rsync) or identify deltas where two repositories should be identical. Outputs a CSV file containing the diffs between the two indices. It can also be used to compare checksums/hashes of files between two indices.

🔴 The index differential plugin runs post index and operates on completed indices as a scheduled job or on demand job to provide differences between two indices.

🔴 Copy default/sample config:

mkdir /root/.config/diskover_indexdiff

cd /opt/diskover

cp configs_sample/diskover_indexdiff/config.yaml /root/.config/diskover_indexdiff/

vim /root/.config/diskover_indexdiff/config.yaml

🔴 No configuration changes are usually required to the configuration file unless comparison involves indices from different Elasticsearch clusters, or changing default settings.

🔴 To run the index diff via command line and compare two indices with same top paths and output all diffs to csv file:

cd /opt/diskover/plugins_postindex

python3 diskover-indexdiff.py -i indexname1 -I indexname2 -d /mnt/stor1/foo

🔴 Compare two indices with different top paths and also compare file sizes (not just file names):

python3 diskover-indexdiff.py -i indexname1 -I indexname2 -d /mnt/stor1/foo -D /mnt/stor2/foo -s

🔴 Compare two indices and compare xxhash hash of files, tag indexname1 with diffs, and don't create csv file of diffs:

python3 diskover-indexdiff.py -i indexname1 -I indexname2 -d /mnt/stor1/foo -D /mnt/stor2/foo -c xxhash --tagindex --nocsv

🔴 To get help and see all cli options:

python3 diskover-indexdiff.py -h

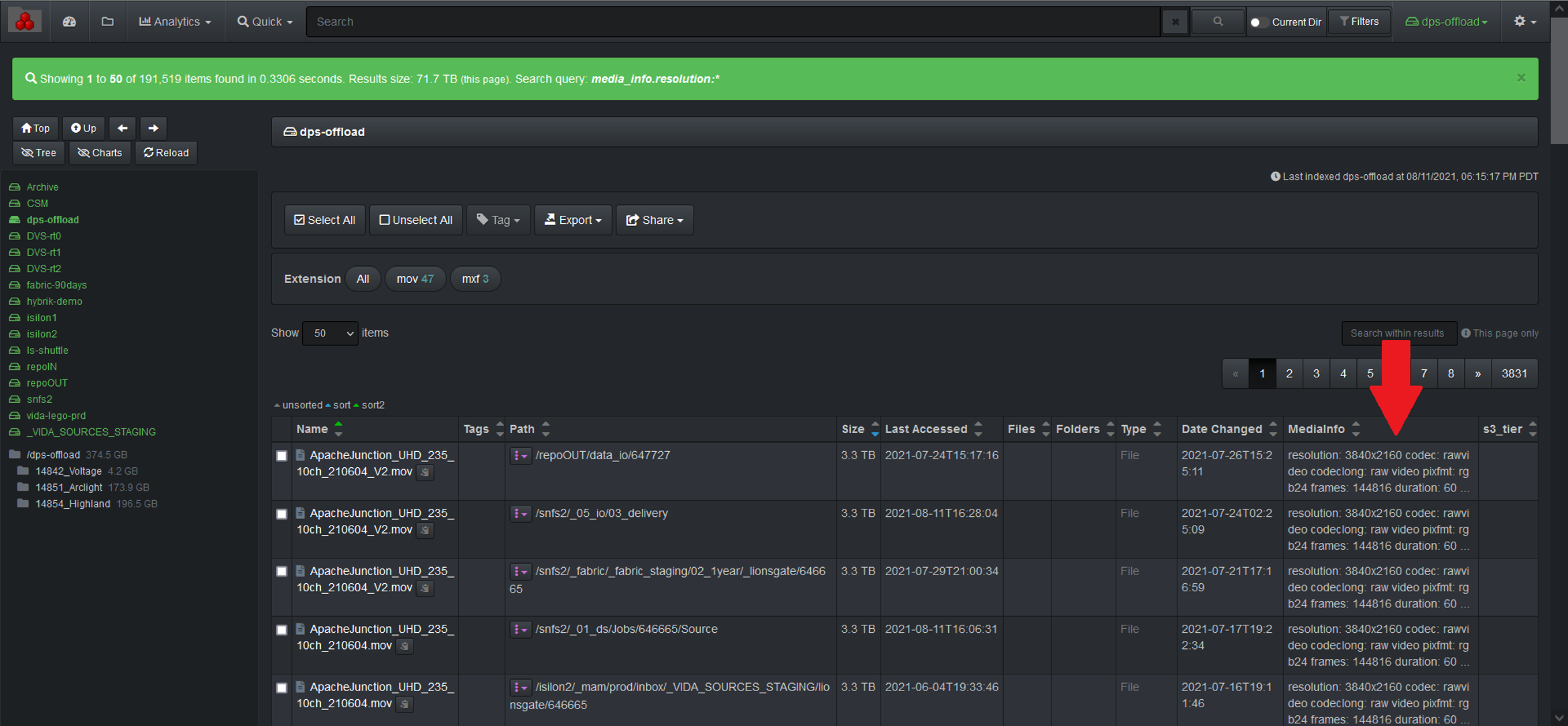

Media Info Harvest Plugin

The media info harvest plugin is designed to provide media metadata attributes about a file without granting the Diskover user any read/write file system access.

The media info plugin enables additional metadata for video files to be harvested at time of index or post-index. The media info plugin uses ffmpeg/ffprobe to harvest attributes about the media file.

New indices will use the plugin and any video file will get additional media info added to the Elasticsearch index’s media_info field.

The attributes provide the ability to view storage and file system content from a workflow perspective, for example all the frame rates on any given storage.

You can view and search on media info attributes in Diskover-Web since it will store it in a new field for video files, the field name is media_info.

Install Media Info Dependencies

🔴 The media info plugin uses the ffmpeg https://www.ffmpeg.org/ open-source package to harvest media attributes for media file types.

Install ffmpeg on Centos 7.x:

yum install epel-release

yum localinstall --nogpgcheck https://download1.rpmfusion.org/free/el/rpmfusion-free-release-7.noarch.rpm

yum install ffmpeg ffmpeg-devel

ffmpeg -version

Install ffmpeg on Centos 8.x:

dnf install epel-release dnf-utils

yum-config-manager --set-enabled PowerTools

yum-config-manager --add-repo=https://negativo17.org/repos/epel-multimedia.repo

dnf install ffmpeg

ffmpeg -version

Install ffmpeg on Ubuntu 18.x/20.x:

apt update

apt install ffmpeg

ffmpeg -version

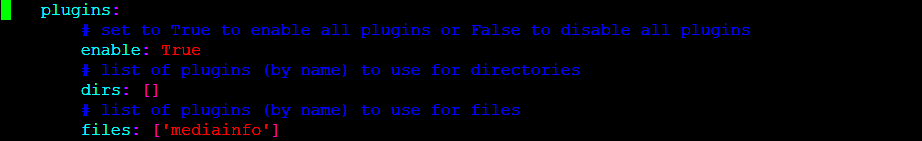

🔴 The media info plugin runs as part of the indexing process. To enable:

vim /root/.config/diskover/config.yaml

🔴 enable: set to True

🔴 files: [‘mediainfo’]

🔴 Copy the default/sample media info config file:

mkdir /root/.config/diskover_mediainfo_plugin

cp /opt/diskover/configs_sample/diskover_mediainfo_plugin/config.yaml /root/.config/diskover_mediainfo_plugin

🔴 Edit the media info config file:

vim /root/.config/diskover_mediainfo_plugin/config.yaml

Note: The media info plugin is currently not supported for S3 based object storage. If the media info plugin is enabled in the default configuration file, an alternate configuration file must be created where the media info plugin is disabled. The alternate configuration file must be invoked when indexing S3 based volumes.

🔴 For reference, here are all the media info fields that are currently stored in the Elasticsearch index:

mediatext = {

'resolution': str(stream['width']) + 'x' + str(stream['height']) if 'width' in stream and 'height' in stream else None,

'codec': stream['codec_name'] if 'codec_name' in stream else None,

'codeclong': stream['codec_long_name'] if 'codec_long_name' in stream else None,

'codectag': stream['codec_tag_string'] if 'codec_tag_string' in stream else None,

'pixfmt': stream['pix_fmt'] if 'pix_fmt' in stream else None,

'frames': int(stream['nb_frames']) if 'nb_frames' in stream else None,

'duration': duration,

'framerate': framerate,

'bitrate': bitrate

}

🔴 Here is the ffprobe command used:

ffprobe -v quiet -print_format json -show_format -show_streams <file_path>

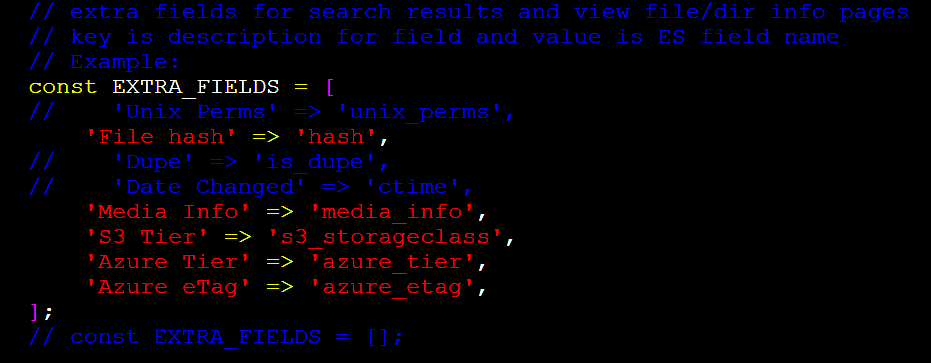

Visibility of the Media Info Field in Diskover UI

Technically at this point, the media info fields should be visible and searchable in the Diskover UI, but if it's not:

🔴 Globally expose that column in the user interface:

cd /var/www/diskover-web/src/diskover/

vi Constants.php

🔴 Uncomment the EXTRA_FIELDS array to look something like this:

Tag Copier Plugin

The tag copier plugin is designed to migrate tags from one index to the next. Generally, these tags are not applied at time of index via autotag functionality, but are applied post index through:

- Manual tag application.

- Plugin tag application (harvest, duplicate hashes, etc.)

🔴 Check that you have the config file in ~/.config/diskover_tagcopier/config.yaml, if not, copy from default config folder in configs_sample/diskover_tagcopier/config.yaml.

🔴 The tag copier runs post as scheduled job or immediately after index process copying tags from previous index to new index. To enable:

vim /root/.config/diskover_tagcopier/config.yaml

🔴 Configure for directories, files, or both.

🔴 Configure any tags to exclude from migration.

Invoke Tag Migration via Command Line

🔴 Tag migration can be invoked via a command line:

cd /opt/diskover

python3 diskover_tagcopier.py diskover-<source_indexname> diskover-<dest_indexname>

🔴 See all cli options:

python3 diskover-tagcopier.py -h

Configure Indexing Tasks to Migrate Tags from Previous Index

🔴 Create post command script to invoke tag roller:

cd /opt/diskover/scripts

cp cp task-postcommands-example.sh task-postcommands.sh

Set the following indexing tasks in Task Panel to migrate tasks from one index to the next:

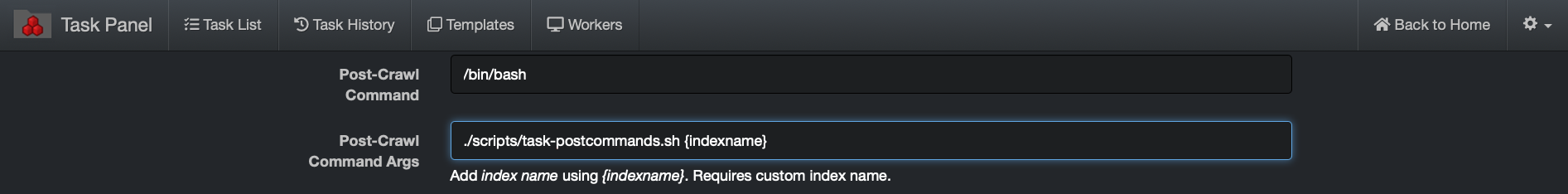

🔴 In the post-crawl command add:

/bin/bash

🔴 In the post-crawl command Args add:

./scripts/task-postcommands.sh {indexname}

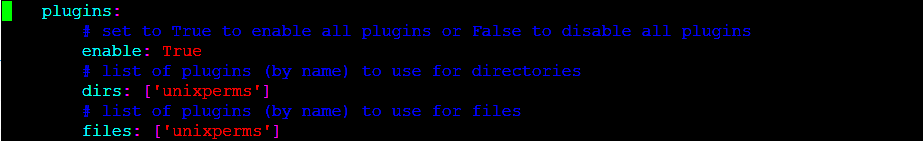

Unix Permissions Plugin

The Unix permissions plugin adds the Unix permissions of each file and directory to the Diskover index at time of indexing. Two tags are added, unixperms-plugin and ugo+rwx, if a file or directory is found with fully open permissions (777 or 666).

🔴 The unixperms runs as part of the indexing process. To enable:

vim /root/.config/diskover/config.yaml

🔴 enable: set to True

🔴 dirs: [‘unixperms’]

🔴 files: [‘unixperms’]

Windows Owner Plugin

The Windows Owner plugin adds the Windows file owner and primary group of each file and directory to the Diskover index at time of indexing. It replaces all docs showing username 0 with the Windows file/directory owner name.

Note: Using this plugin increases scanning time due to name/sid lookups.

🔴 Requirements:

- This plugin works in Windows only

- pywin32 python module, install with pip https://pypi.org/project/pywin32/

🔴 The windows-owner runs as part of the indexing process. To enable edit diskover config:

notepad %APPDATA%\diskover\config.yaml

🔴 enable: set plugins enable to True

🔴 dirs: [‘windows-owner’]

🔴 files: [‘windows-owner’]

plugins:

# set to True to enable all plugins or False to disable all plugins

enable: True

# list of plugins (by name) to use for directories

dirs: ['windows-owner']

# list of plugins (by name) to use for files

files: ['windows-owner']

🔴 There are a few settings at the top of the windows-owner plugin:

-

INC_DOMAIN: include domain in owner/group names, set to True or False -

GET_GROUP: get group info (primary group) as well as owner, set to True or False -

USE_SID: store sid if owner/group lookup returns None, set to True or False

Windows Attributes Plugin

The Windows Attributes plugin adds the Windows file owner, primary group and ACE's of each file and directory to the Diskover index after indexing is complete. It replaces all docs showing owner 0 and group 0 with the Windows file/directory owner name and primary group. It updates owner, group and windacls fields meta data of each file or directory to diskover index after indexing with the Windows owner, primary group and acl info.

Note: The plugin can take a long time to run due to name/sid lookups.

🔴 Requirements:

- This plugin works in Windows only

- pywin32 python module, install with pip https://pypi.org/project/pywin32/

- enable long path support in Windows if long paths being scanned https://docs.microsoft.com/en-us/windows/win32/fileio/maximum-file-path-limitation?tabs=cmd

🔴 Check that you have the config file in %APPDATA%\diskover_winattrib\config.yaml, if not, copy from default config folder in configs_sample\diskover_winattrib\config.yaml.

🔴 Edit win-attrib plugin config and modify as needed:

notepad %APPDATA%\diskover_winattrib\config.yaml

🔴 Run windows-attrib plugin and get help to see cli options:

cd "C:\Program Files\diskover\plugins_postindex"

python diskover-winattrib.py -h

🔴 Run windows-attrib plugin using index name:

cd "C:\Program Files\diskover\plugins_postindex"

python diskover-winattrib.py diskover-index1

🔴 Run windows-attrib plugin using latest index found for top path and using sqlite db cache:

cd "C:\Program Files\diskover\plugins_postindex"

python diskover-winattrib.py -l /somepath -u

Xytech Asset Creation Plugin

🍿 Watch Demo Video

Xytech Asset Creation Plugin Overview

Post facilities often have customer assets stored on LTO tape media. However, these assets are difficult to discover within the Xytech Media Operations Platform if there is no Asset ID in the customers vault of assets. The plugin is designed to use the Diskover indexer to discover newly restored customer assets from any media. The assets are restored into a folder with naming convention CustomerNumber_CustomerName.

The Xytech Asset Creation plugin then uses the Xytech API to create an asset for the customer in the vault library. The path location is added to the asset within Xytech and the asset # is assigned as a tag to the file/object within the Diskover index.

Xytech Asset Creation Plugin Installation

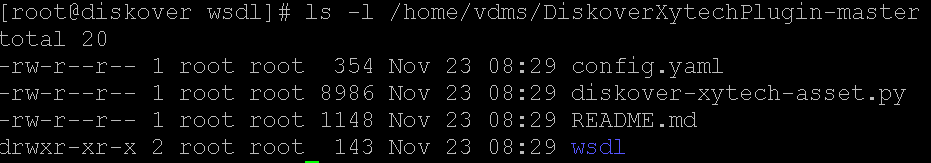

🔴 Extract DiskoverXytechPlugin-master.zip:

cd /tmp

unzip DiskoverXytechPlugin-master.zip

cd /tmp/DiskoverXytechPlugin-master.zip

🔴 Make destination directories:

mkdir /root/.config/diskover_xytech_asset

mkdir /opt/diskover/plugins_postindex/xytech_plugin

🔴 List plugin contents:

ls -l /tmp/DiskoverXytechPlugin-master

🔴 Copy files to proper locations:

cd /tmp/DiskoverXytechPlugin-master

mv config.yaml /root/.config/diskover_xytech_asset/

mv diskover-xytech-asset.py /opt/diskover/plugins_postindex/xytech_plugin/

mv wsdl/ /opt/diskover/plugins_postindex/xytech_plugin/

🔴 Install Python dependencies:

pip3 install suds-community

🔴 Configure plugin settings:

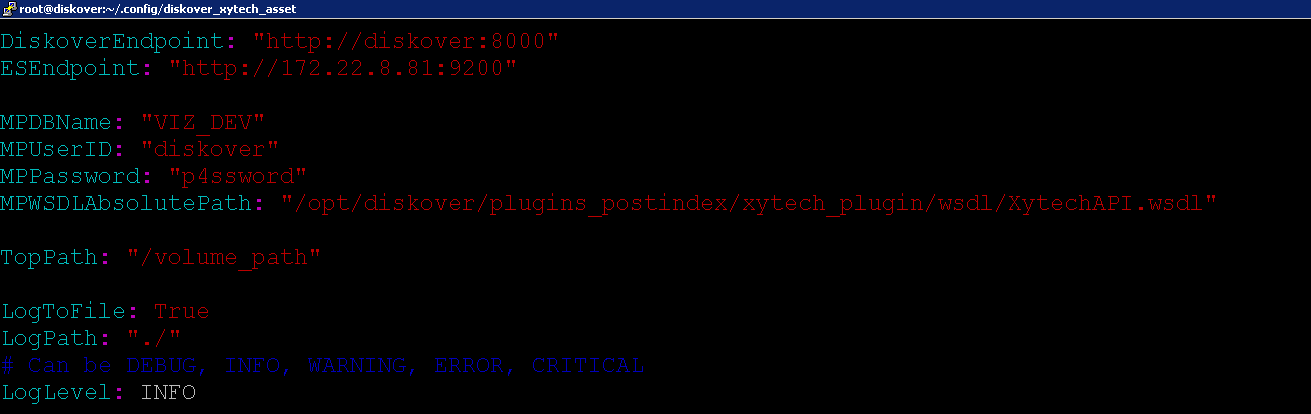

vim /root/.config/diskover_xytech_asset/config.yaml

Diskover Endpoint:

DiskoverEndpoint: http://localhost:8000

ElasticSearch Endpoint:

ESEndpoint: http://172.22.8.31:9200

Xytech Credentials:

MPDBName: VIZ_DEV

MPUserID: diskover

MPPassword: p4ssword

Xytech WSDL file ABSOLUTE path:

MPWSDLAbsolutePath: /opt/diskover/plugins_postindex/xytech_plugin/wsdl/XytechAPI.wsdl

Diskover top path where assets will be restored:

TopPath: /volume_path

Plugin logging:

LogToFile: True

LogPath: ./

LogLevel: INFO

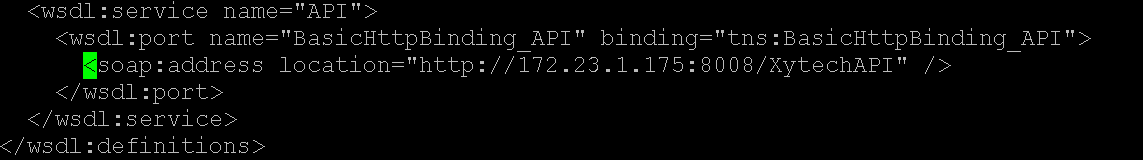

🔴 Configure Xytech API Endpoint

vim /opt/diskover/plugins_postindex/xytech_plugin/XytechAPI.wsdl

Set Xytech API Endpoint:

<soap:address location="http://172.23.1.154:8008/XytechAPI" />

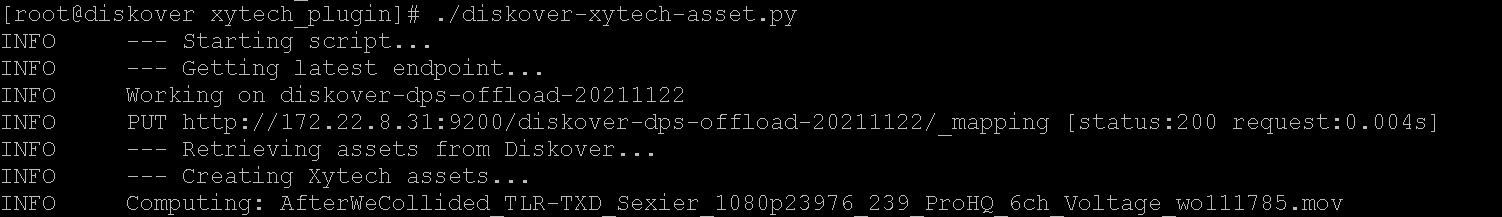

🔴 Run Xytech Asset Creation Plugin:

cd /opt/diskover/plugins_postindex/xytech_plugin/

🔴 Run Command:

./diskover-xytech-asset.py

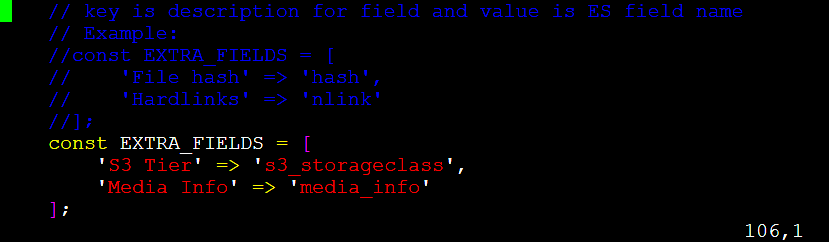

Display Extra Fields on File Search Page

To display additional columns within the file search page of the Diskover-Web user interface, using Media Info as an example:

vim /var/www/diskover-web/src/diskover/Constants.php

🔴 Change ownership to EXTRA_FIELDS:

'Media Info' => 'media_info'

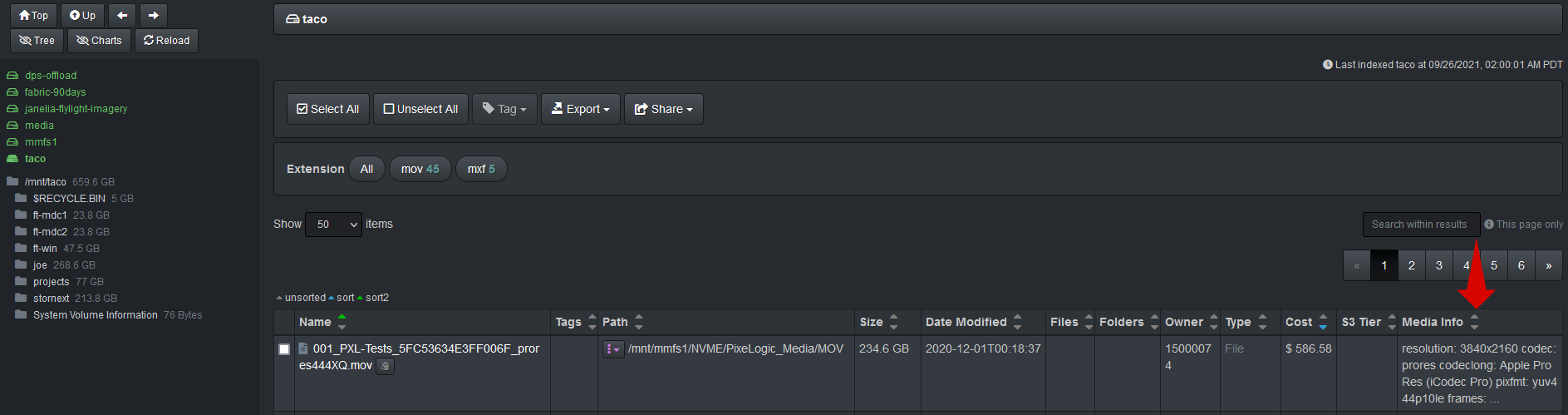

The Media Info column will now be displayed on the file search page of Diskover-web use interface:

List of Extra Fields

For an exhaustive list of extra fields, please refer to the Diskover-Web user interface > gear icon > Help > Default index fields.

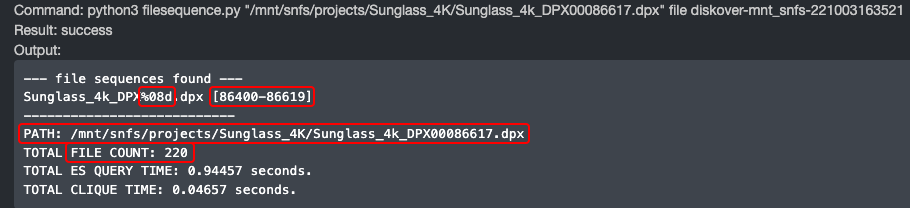

Diskover-Web Plugins | File Actions

Diskover-web is designed for extensibility. The open-source architecture is designed to promote extensibility via web plugins known as File Actions.

File Actions are located in the public/fileactions directory.

File Actions are written in PHP and have the extension .php. Diskover-web includes a few example file actions in the fileaction_samples directory. Any sample files that you wish to use need to be copied to public/fileactions.

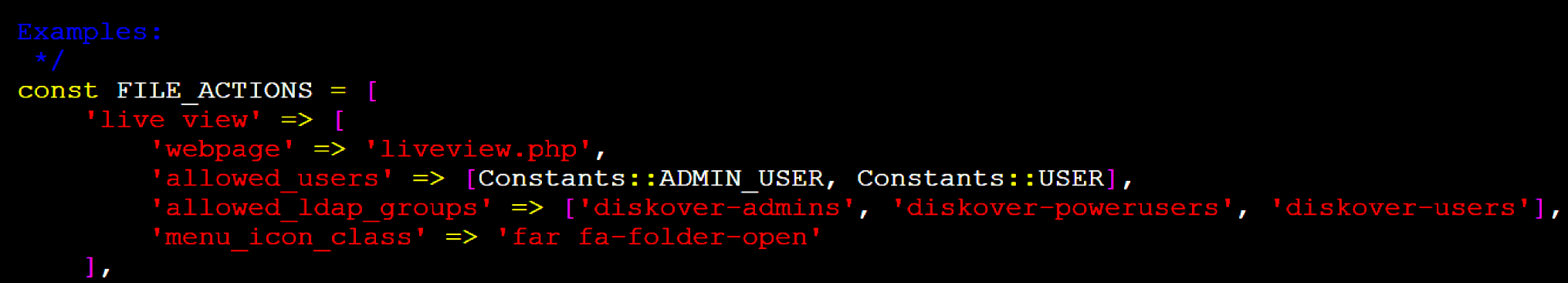

After you have created a new File Action with an extension .php, you will need to add the File Action to the web config file in src/diskover/Constants.php. Edit the config file and look for the section titled File Actions. You will need to add the file action to the const FILE_ACTIONS array. There are some examples in the Constants.php.sample default config file and below.

Here is an example of adding a File Action:

const FILE_ACTIONS = [

'find file sequences' => [

'webpage' => 'filesequence.php',

'allowed_users' => [Constants::ADMIN_USER, Constants::USER],

'allowed_ldap_groups' => ['diskover-admins', 'diskover-powerusers', 'diskover-users'],

'menu_icon_class' => 'far fa-images'

]

];

🔴 Each File Action is stored as an associative array with the key being the file action name:

-

webpage : the filename of the File Action

-

allowed_users : list of allowed local and/or AD/LDAP user names that can run the File Action

-

allowed_ldap_groups : list of allowed AD/LDAP group names that can run the File Action

-

menu_icon_class : Font Awesome css class name for icon https://fontawesome.com/

Other File Action examples:

const FILE_ACTIONS = [

'list dir' => [

'webpage' => 'listdir.php',

'allowed_users' => [Constants::ADMIN_USER, Constants::USER],

'allowed_ldap_groups' => ['diskover-admins', 'diskover-powerusers', 'diskover-users'],

'menu_icon_class' => 'far fa-folder-open'

],

'newer subdirs' => [

'webpage' => 'newersubdirs.php',

'allowed_users' => [Constants::ADMIN_USER, Constants::USER],

'allowed_ldap_groups' => ['diskover-admins', 'diskover-powerusers', 'diskover-users'],

'menu_icon_class' => 'fas fa-folder-minus'

],

'get image http' => [

'webpage' => 'getimagehttp.php',

'allowed_users' => [Constants::ADMIN_USER, Constants::USER],

'allowed_ldap_groups' => ['diskover-admins', 'diskover-powerusers', 'diskover-users'],

'menu_icon_class' => 'far fa-file-image'