Diskover Setup and Administration Guide

For annual subscriptions v2.4 +

This guide is intended for Service Professionals and System Administrators.

The best way to install Diskover is by using our internal Ansible playbooks. If you're a customer or setting up a POC, please create a support ticket for assistance with your installation or upgrade. If you prefer to blaze your own trail with a more manual approach, please follow the instructions below.

Introduction

Overview

Diskover Data is a web-based platform that provides single-pane viewing of distributed digital assets. It provides point-in-time snapshot indexes of data fragmented across cloud and on-premise storage spread across an entire organization. Users can quickly and easily search across company files. Diskover is a data management application for your digital filing cabinet, providing powerful granular search capabilities, analytics, file-based workflow automation, and ultimately enables companies to scale their business and be more efficient at reducing their operating costs.

For more information, please visit diskoverdata.com

Approved AWS Technology Partner

Diskover Data is an official AWS Technology Partner. Please note that AWS has renamed Amazon Elasticsearch Service to Amazon OpenSearch Service. Most operating and configuration details for OpenSearch Service should also be applicable to Elasticsearch..

Diskover Use Cases

Diskover addresses unstructured data stored across various storage repositories. Data curation encompasses the manual and automated processes needed for principled and controlled data creation, maintenance, cleanup, and management, together with the capacity to add value to data.

System Administrators

The use case for System Administrators is often centered around data cleanup, data disposition, ensuring data redundancy, and automating data. System Administrators are often tasked with controlling costs associated with unstructured data.

Line of Business Users

The use cases for Line of Business users are often centered around adding value to data, finding relevant data, correlating, analyzing, taking action on data sets, and adding business context to data.

Document Conventions

| TOOL | PURPOSE |

|---|---|

| Copy/Paste Icon for Code Snippets | Throughout this document, all code snippets can easily be copied to a clipboard using the copy icon on the far right of the code block: |

| 🔴 | Proposed action items |

| ✏️ and ⚠️ | Important notes and warnings |

| Features Categorization | IMPORTANT

|

| Core Features |     |

| Industry Add-Ons | These labels will only appear when a feature is exclusive to a specific industry.    |

Architecture Overview

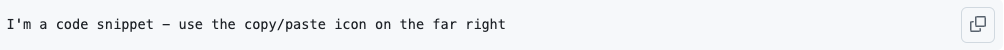

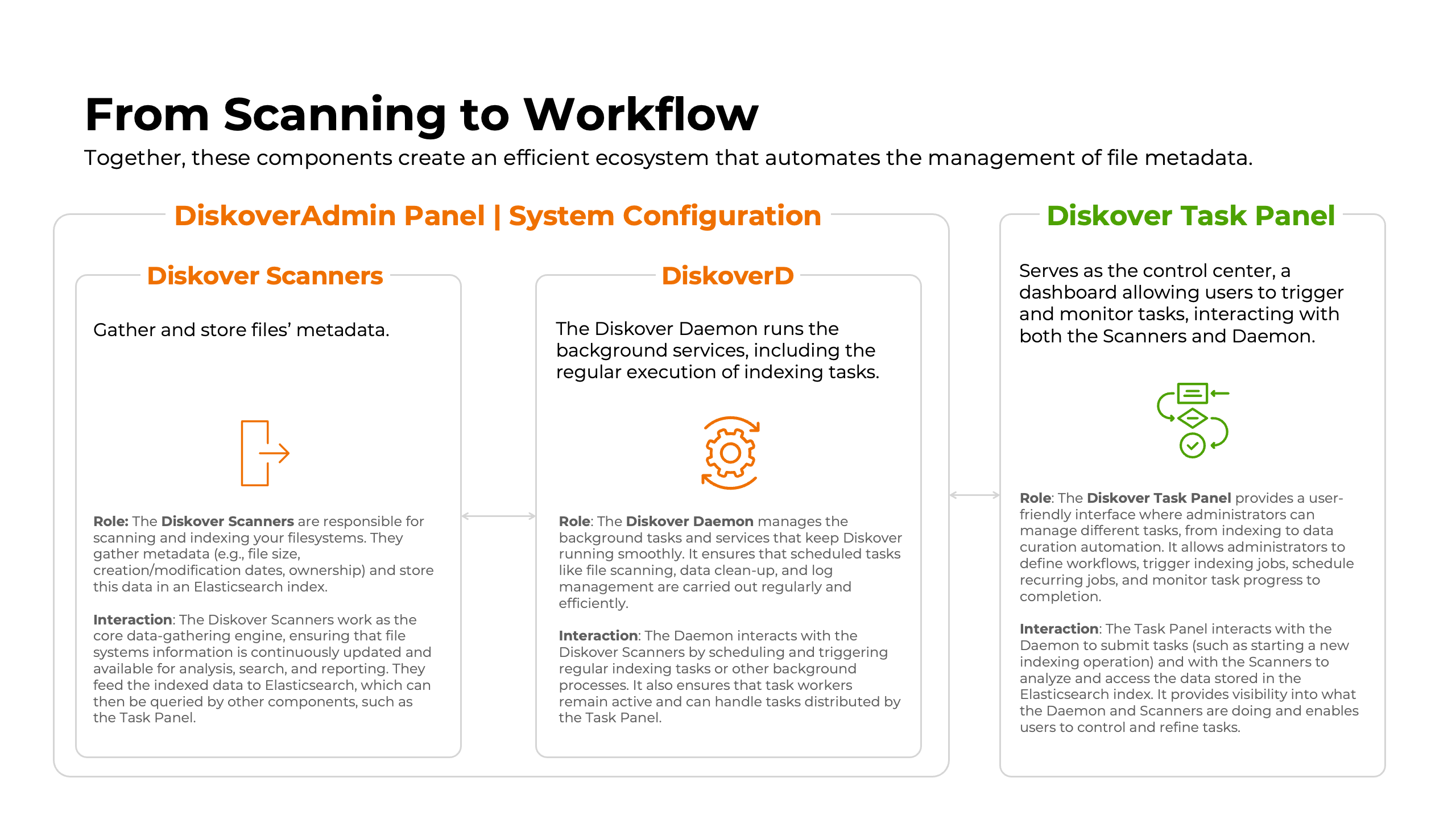

Diskover's Main Components

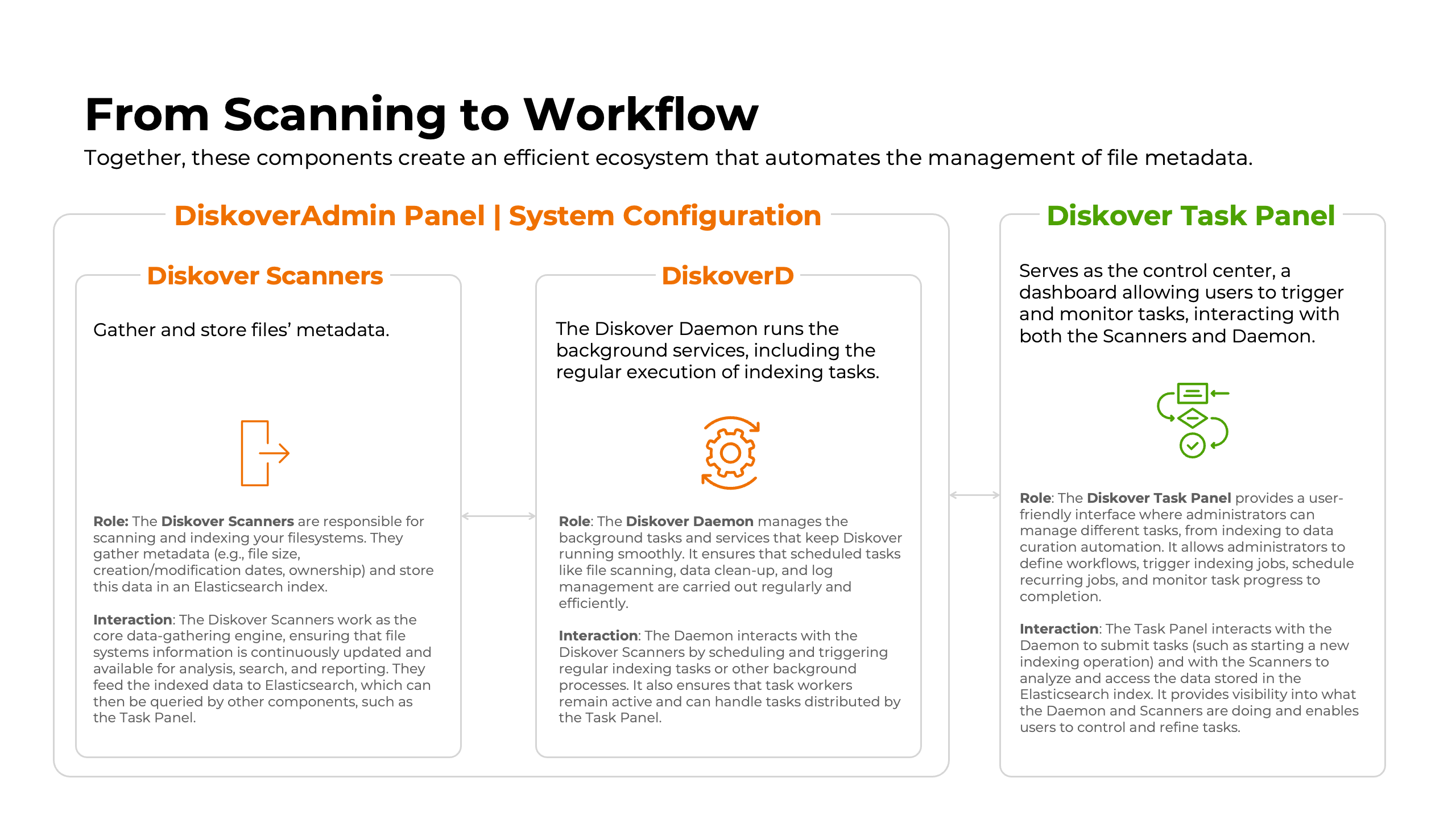

Deploying Diskover uses 3 major components:

| COMPONENT | ROLE |

|---|---|

| 1️⃣ Elasticsearch |

Elasticsearch is the backbone of Diskover. It indexes and organizes the metadata collected during the scanning process, allowing for fast and efficient querying of large datasets. Elasticsearch is a distributed, RESTful search engine capable of handling vast amounts of data, making it crucial for retrieving information from scanned file systems and directories. |

| 2️⃣ Diskover-Web |

Diskover-Web is the user interface that allows users to interact with the Diskover system. Through this web-based platform, users can search, filter, and visualize the data indexed by Elasticsearch. It provides a streamlined and intuitive experience for managing, analyzing, and curating data. Diskover-Web is where users can explore results, run tasks, and monitor processes. |

| 3️⃣ Diskover Scanners |

The scanners, sometimes called crawlers, are the components responsible for scanning file systems and collecting metadata. These scanners feed that metadata into Elasticsearch for storage and later retrieval. Diskover supports various types of scanners, which are optimized for different file systems, ensuring efficient and comprehensive data collection. Out of the box, Diskover efficiently scans generic filesystems. However, in today’s complex IT architectures, files are often stored across a variety of repositories. To address this, Diskover offers various alternate scanners as well as provides a robust foundation for building alternate scanners, enabling comprehensive scanning of any file storage location. |

| 🔀 Diskover Ingesters |

Diskover’s ingesters are the ultimate bridge between your unstructured data and high-performance, next-generation data platforms. By leveraging the open-standard Parquet format, Diskover converts and streams your data efficiently and consistently. Whether you’re firehosing into Dell data lakehouse, Snowflake, Databricks, or other modern data infrastructures, our ingesters ensure your data flows effortlessly—optimized for speed, scalability, and insight-ready delivery. |

Diskover Platform Overview

Click here for a full screen view of the Diskover Platform Overview.

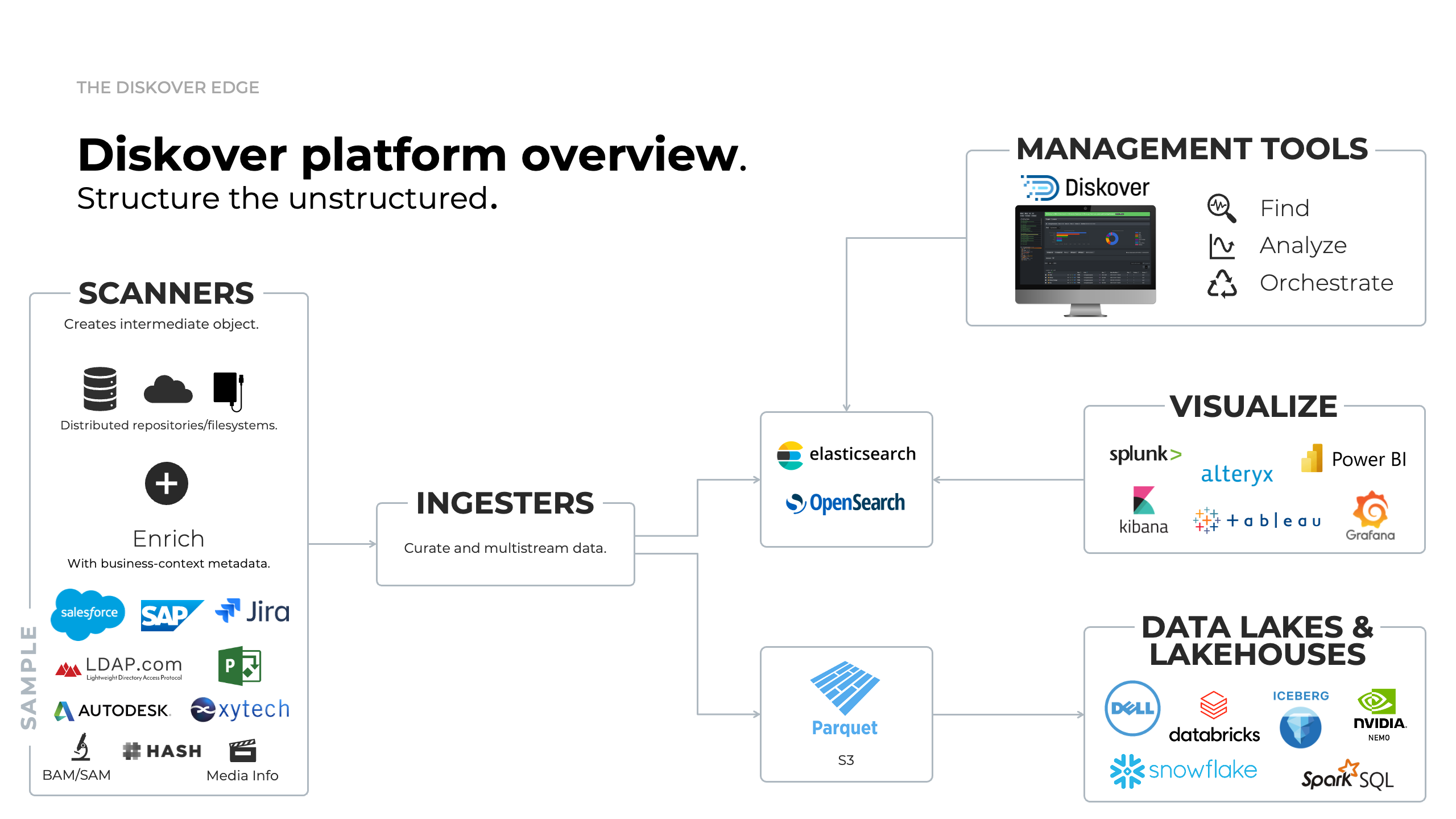

Diskover Scale-Out Architecture Overview Diagram

Click here for a full screen view of the Diskover Architecture Overview diagram.

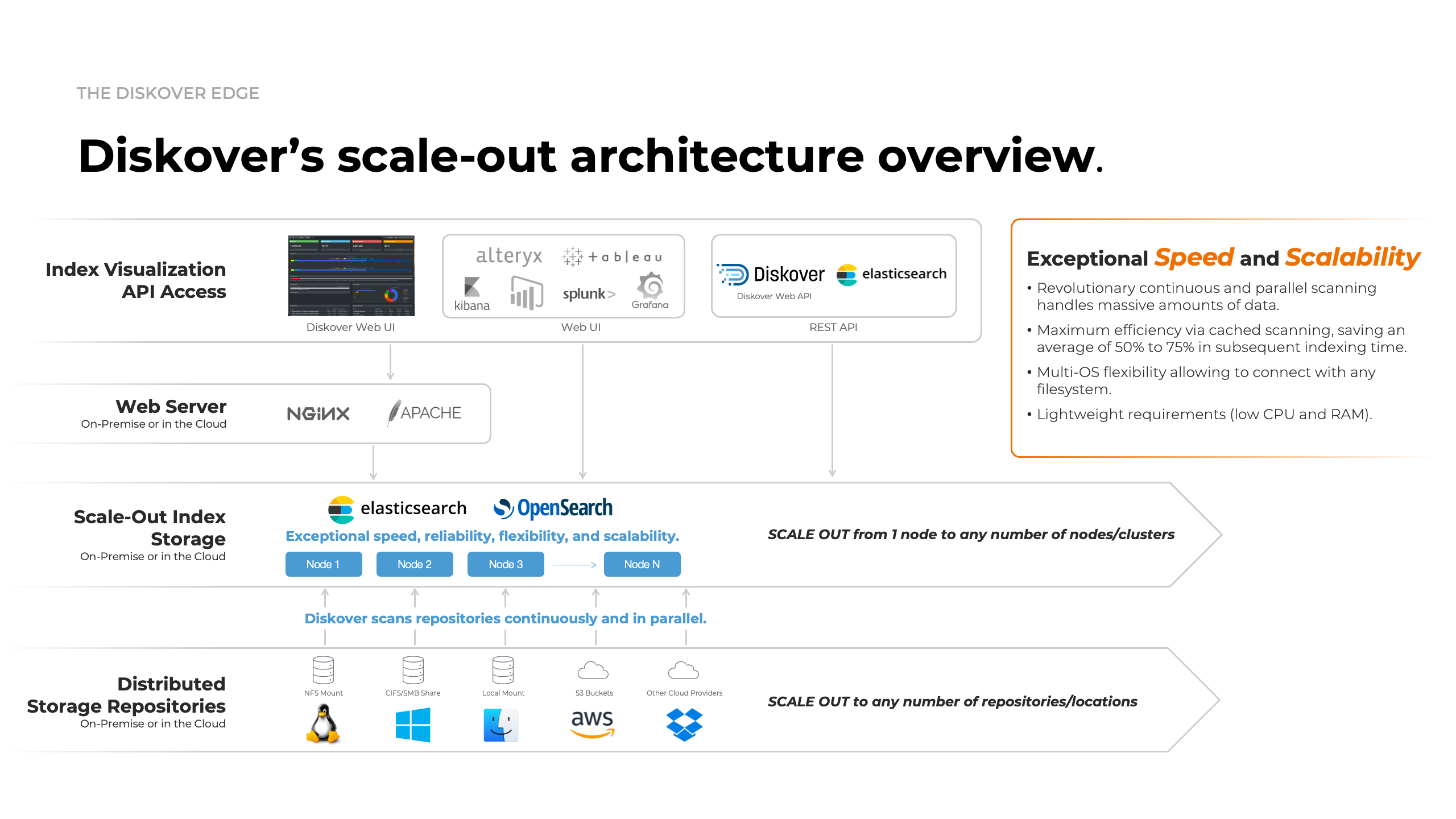

Diskover Config Architecture Overview

It is highly recommended to separate the Elasticsearch node/cluster, web server, and indexing host(s).

Click here for the full screen view of this diagram.

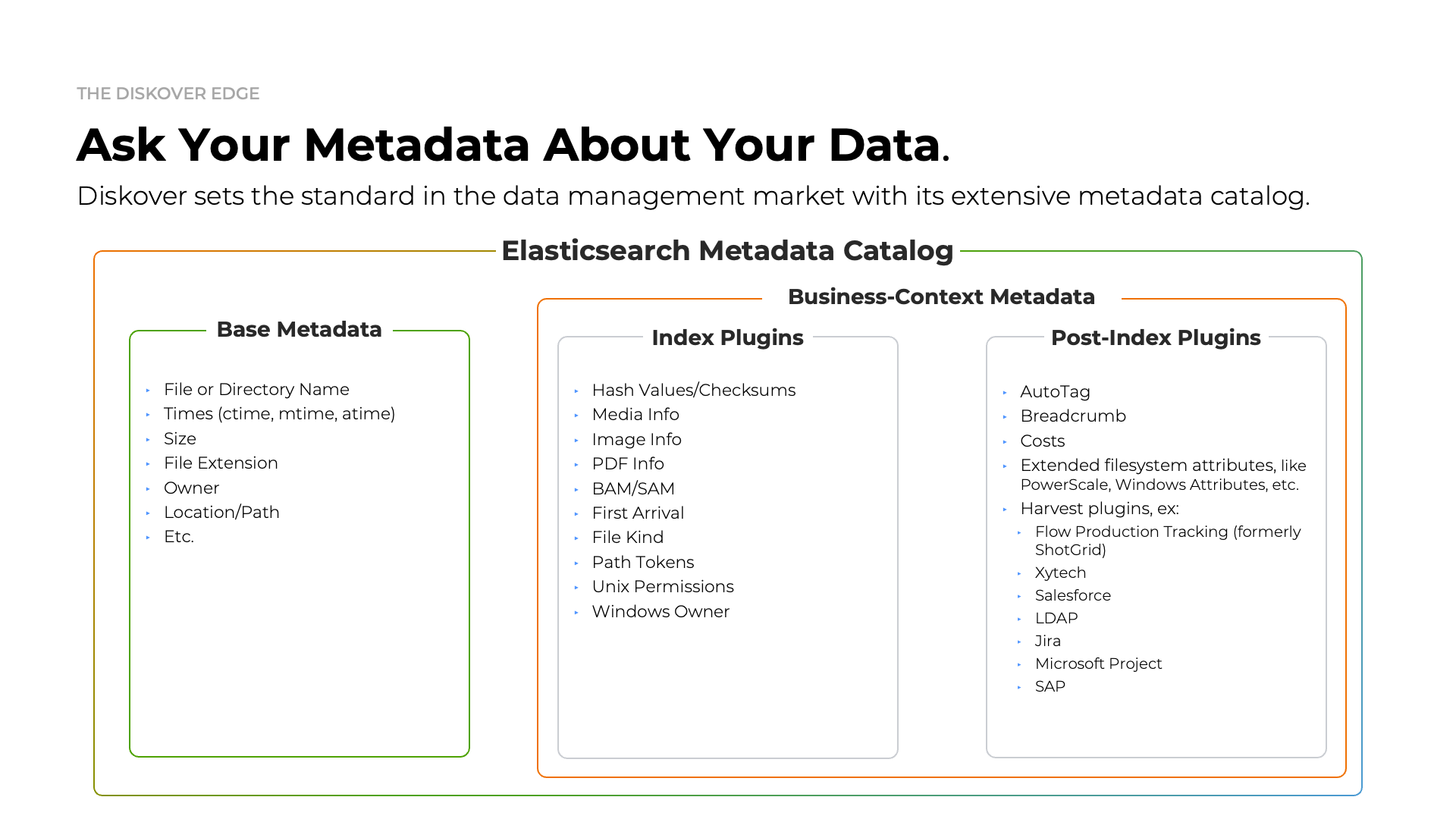

Metadata Catalog

Diskover is designed to scan generic filesystems out of the box efficiently, but it also supports flexible integration with various repositories through customizable alternate scanners. This adaptability allows Diskover to scan diverse storage locations and include enhanced metadata for precise data management and analysis.

With a wide range of metadata harvest plugins, Diskover enriches indexed data with valuable business context attributes, supporting workflows that enable targeted data organization, retrieval, analysis, and enhanced workflow. These plugins can run at indexing or post-indexing intervals, balancing comprehensive metadata capture with high-speed scanning.

Click here for a full screen view of the Metadata Catalog Summary.

Requirements

Overview

Visit the System Readiness section for further information on preparing your system for Diskover.

| Packages | Usage |

|---|---|

| Python 3.8+ | Required for Diskover scanners/workers and Diskover-Web → go to installation instructions |

| Elasticsearch 8.x | Is the heart of Diskover → go to installation instructions |

| PHP 8.x and PHP-FPM | Required for Diskover-Web → go to installation instructions |

| NGINX or Apache | Required for Diskover-Web → go to installation instructions Note that Apache can be used instead of NGINX but the setup is not supported or covered in this guide. |

Security

- Disabling SELinux and using a software firewall is optional and not required to run Diskover.

- Internet access is required during the installation to download packages with yum.

Recommended Operating Systems

As per the config diagram in the previous chapter, note that Windows and Mac are only supported for scanners.

| Linux* | Windows | Mac |

|---|---|---|

|

|

|

* Diskover can technically run on all flavors of Linux, although only the ones mentioned above are fully supported.

Elasticsearch Requirements

Elasticsearch Version

Diskover is currently tested and deployed with Elasticsearch v8.x. Note that ES7 Python packages are required to connect to an Elasticsearch v8 cluster.

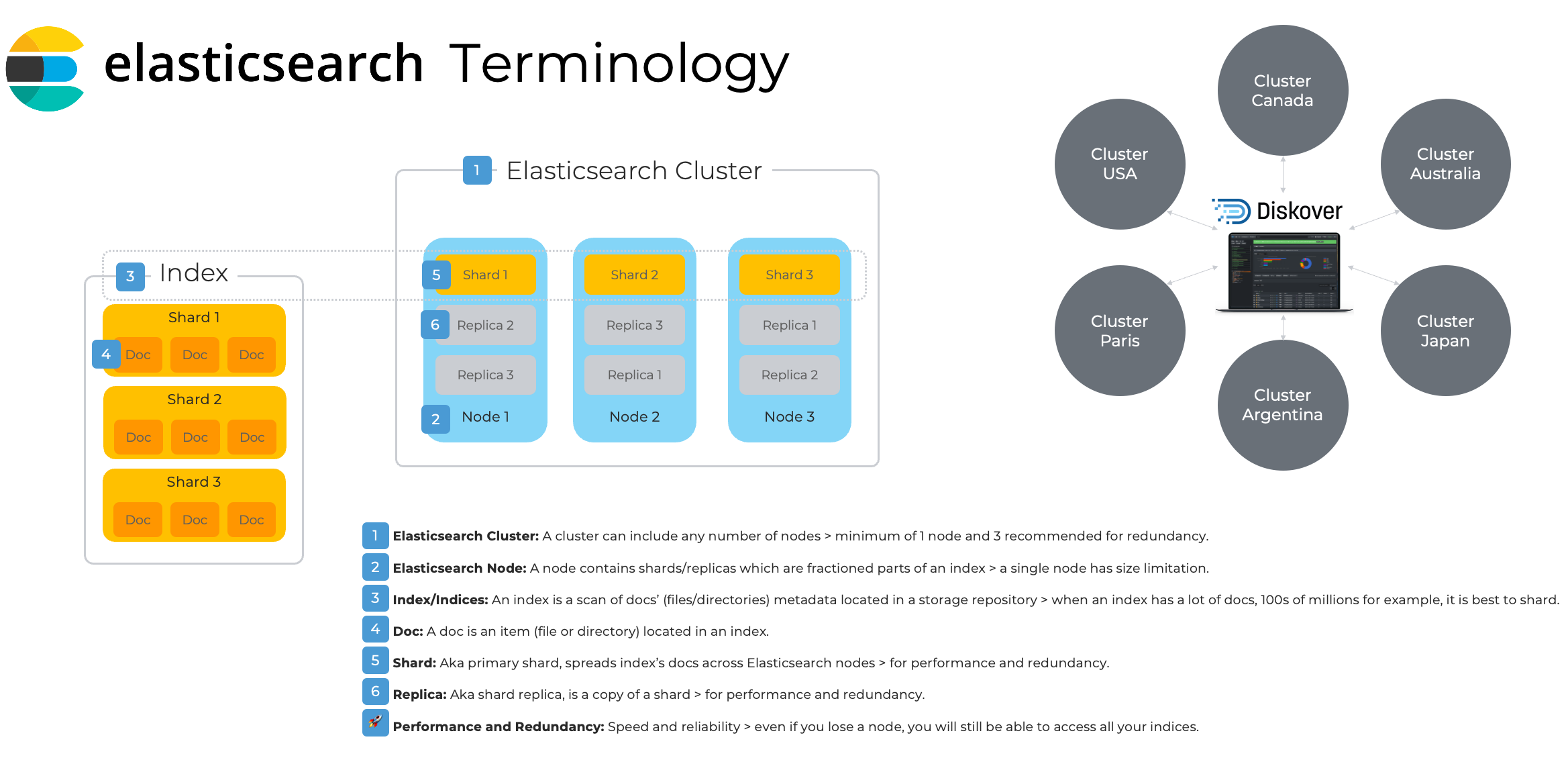

Elasticsearch Architecture Overview and Terminology

Please refer to this diagram to better understand the terminology used by Elasticsearch and throughout the Diskover documentation.

Click here for a full-screen view of the Elasticsearch Architecture diagram.

Click here for a full-screen view of the Elasticsearch Architecture diagram.

Elasticsearch Cluster

- The foundation of the Diskover platform consists of a series of Elasticsearch indexes, which are created and stored within the Elasticsearch endpoint.

- An important configuration for Elasticsearch is that you will want to set Java heap mem size - it should be half your Elasticsearch host ram up to 32 GB.

- For more detailed Elasticsearch guidelines, please refer to AWS sizing guidelines.

- For more information on resilience in small clusters.

Requirements for POC and Deployment

| Proof of Concept | Production Deployment | |

|---|---|---|

| Nodes | 1 node | 3 nodes for performance and redundancy are recommended |

| CPU | 8 to 32 cores | 8 to 32 cores |

| RAM | 8 to 16 GB (8 GB reserved to Elasticsearch memory heap) | 64 GB per node (16 GB reserved to Elasticsearch memory heap |

| DISK | 250 to 500 GB of SSD storage per node (root 150 GB, home 25 GB, var 800 GB) | 1 TB of SSD storage per node (root 150 GB, home 25 GB, var 800 GB) |

AWS Sizing Resource Requirements

Please consult the Diskover AWS Customer Deployment Guide for all details.

| AWS Elasticsearch Domain | AWS EC2 Web-Server | AWS Indexers | |

|---|---|---|---|

| Minimum | i3.large | t3.small | t3.large |

| Recommended | i3.xlarge | t3.medium | t3.xlarge |

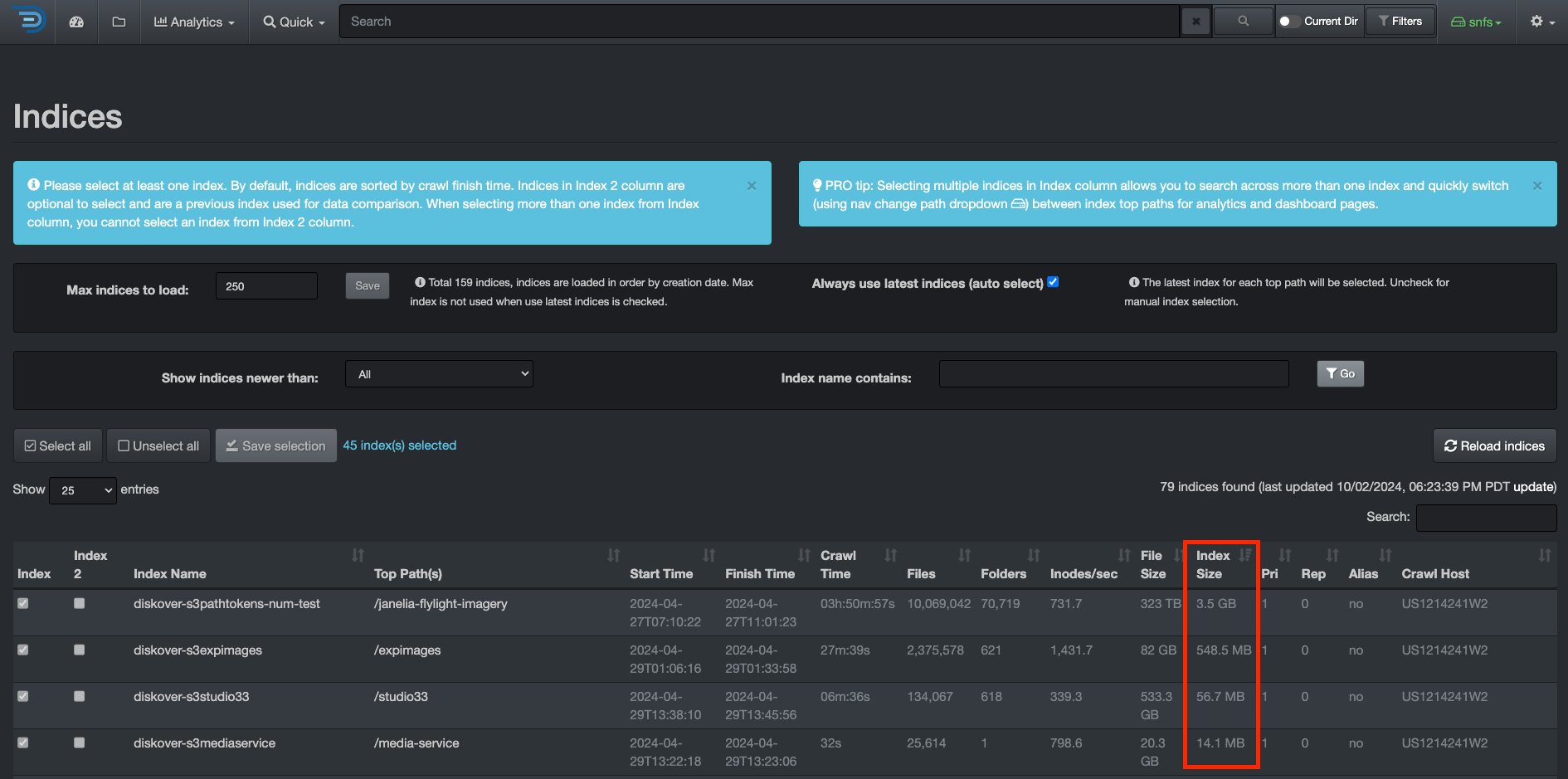

Indices

Rule of Thumb for Shard Size

- Try to keep shard size between 10 – 50 GB

- Ideal shard size approximately 20 – 40 GB

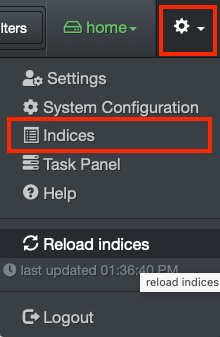

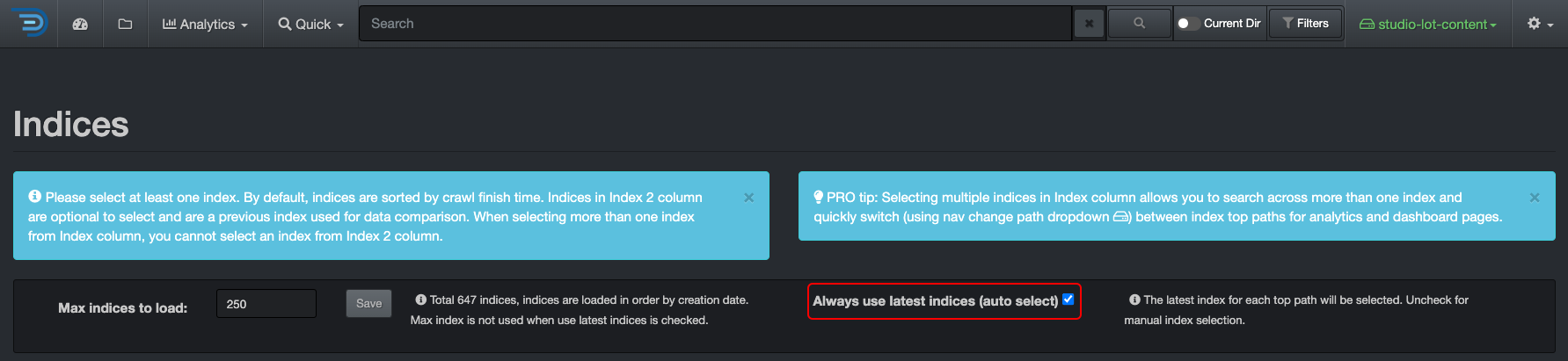

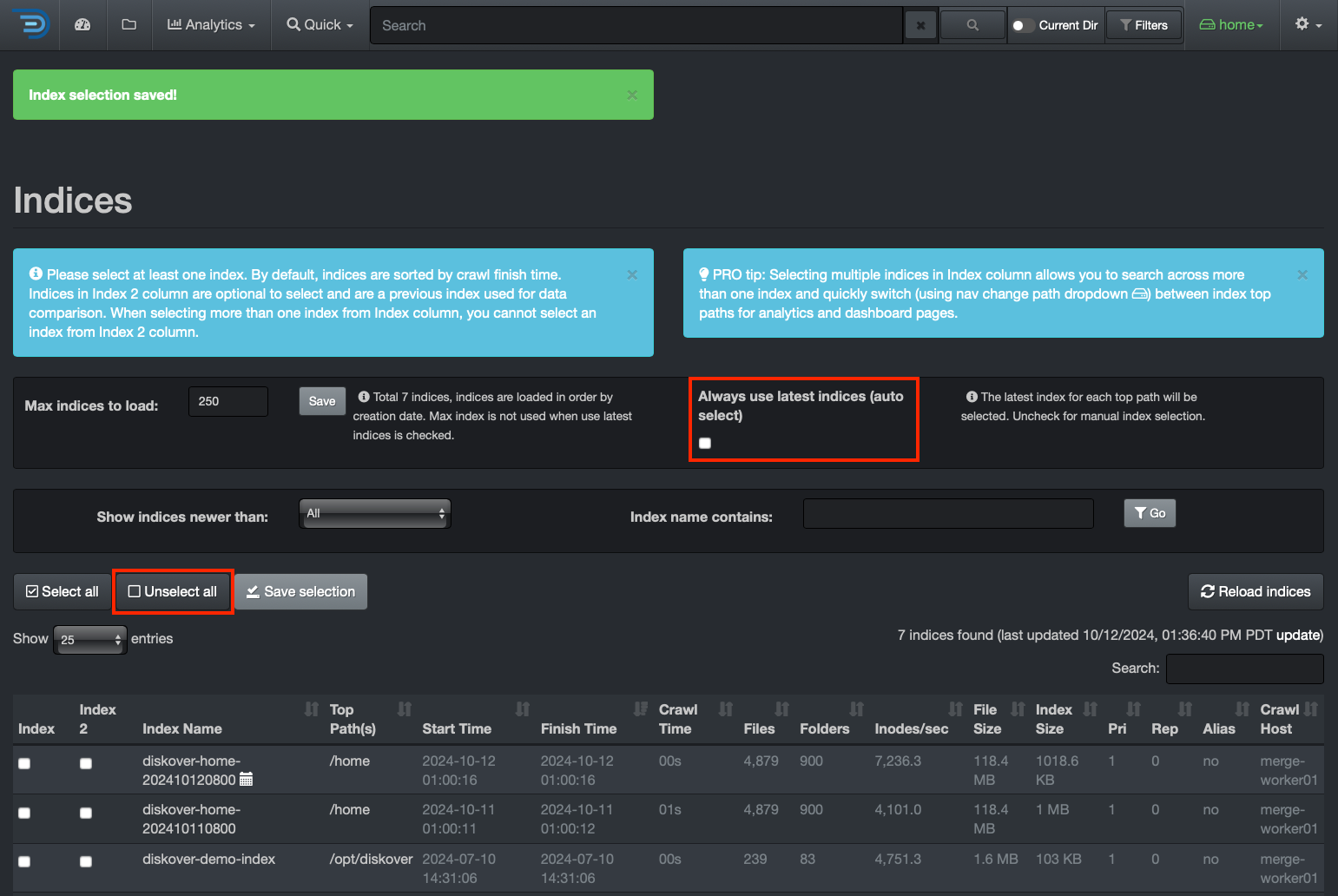

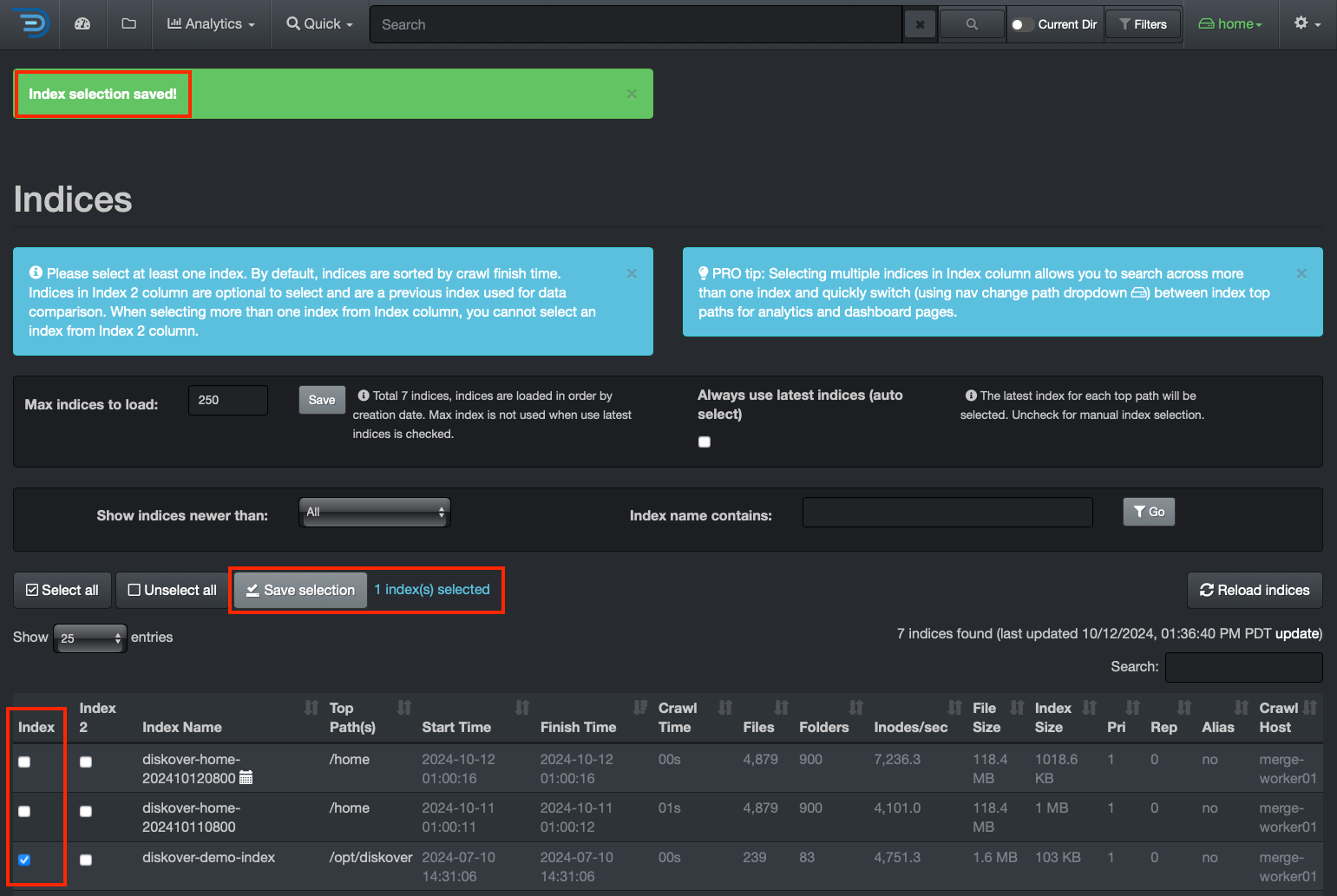

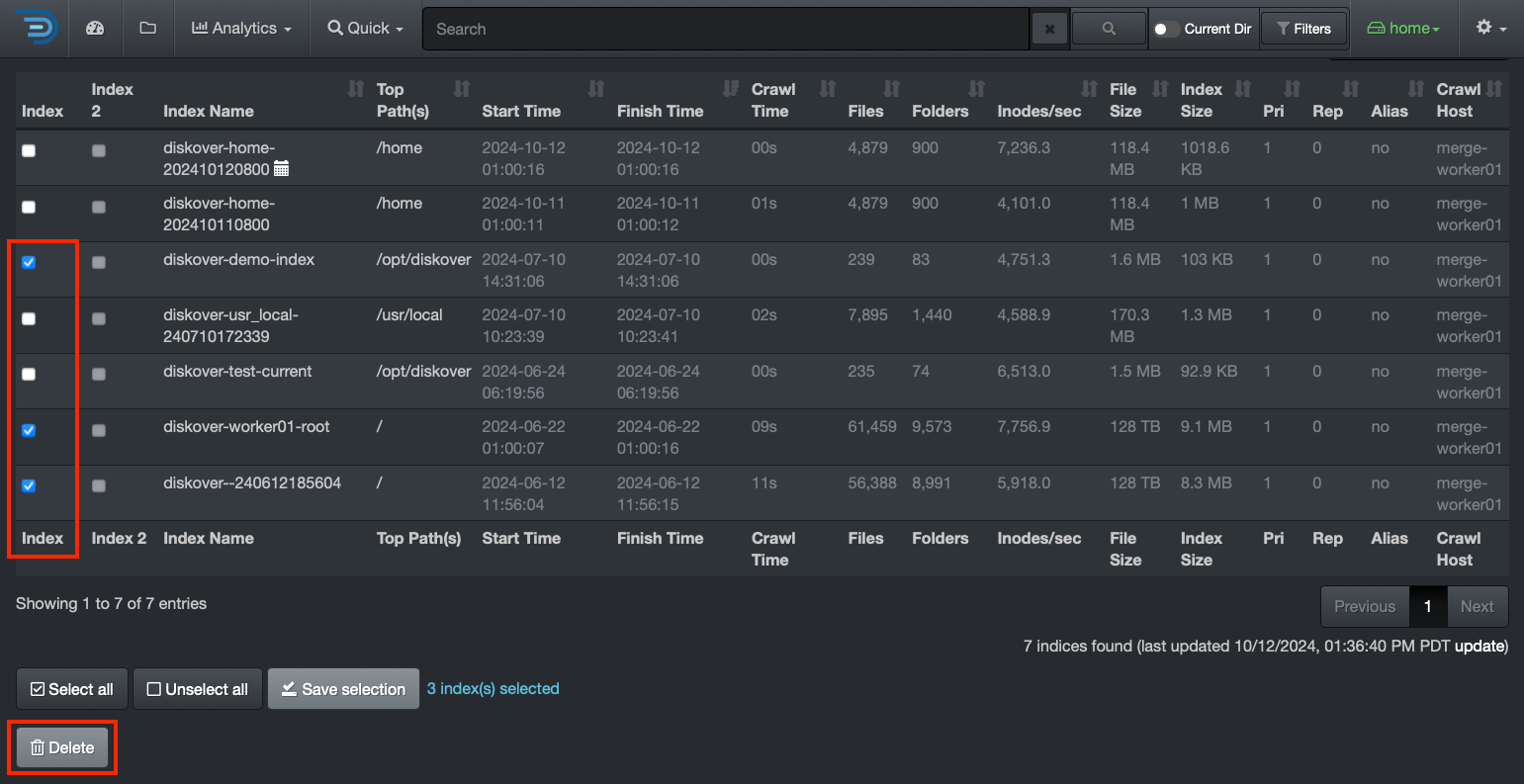

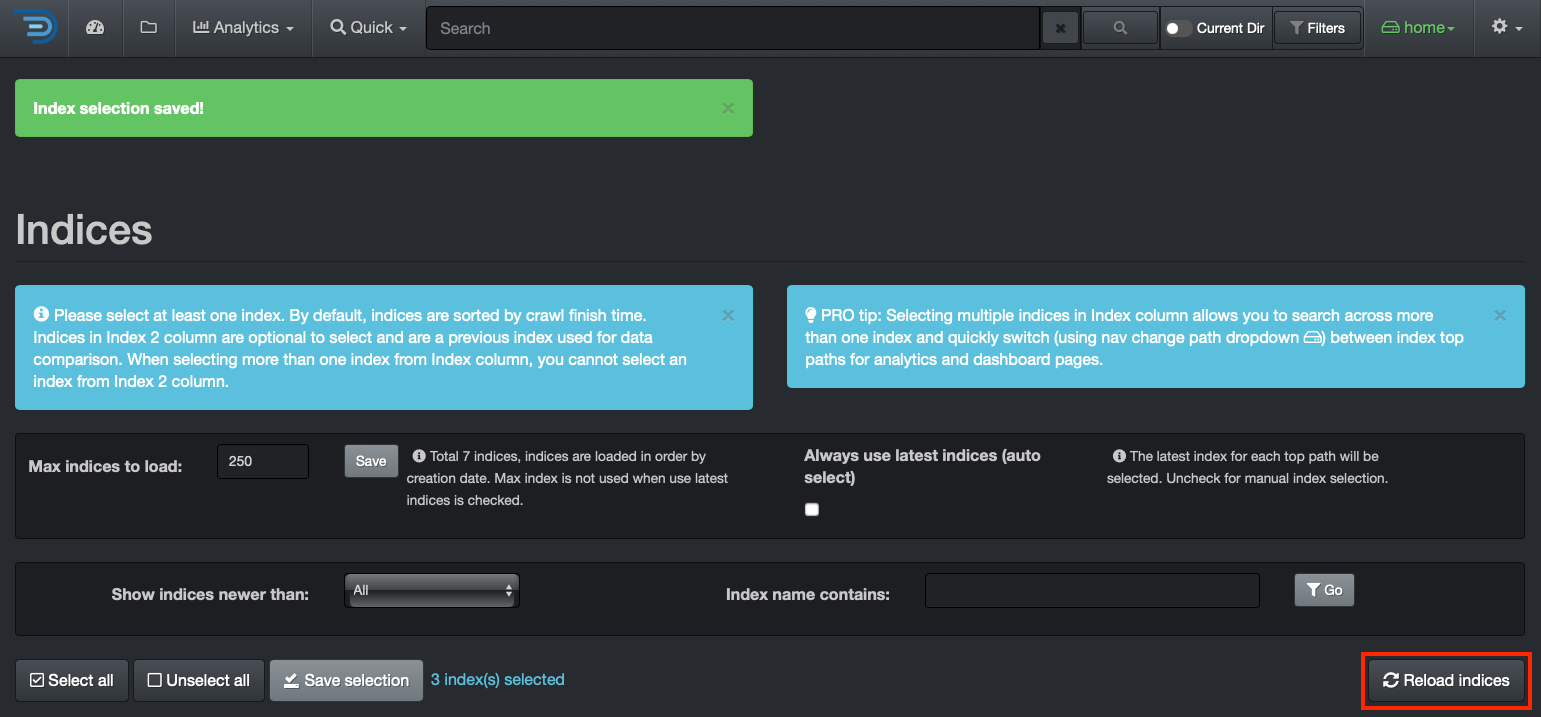

Once you have a reference for your index size, you can decide to shard if applicable. To check the size of your indices, from the user interface, go to → ⛭ → Indices:

Click here for a full-screen view of this image.

Click here for a full-screen view of this image.

Examples

- An index that is 60 GB in size: you will want to set shards to 3 and replicas* to 1 or 2 and spread across 3 ES nodes.

- An index that is 5 GB in size: you will want to set shards to 1 and replicas* to 1 or 2 and be on 1 ES node or spread across 3 ES nodes (recommended).

⚠️ Replicas help with search performance, redundancy and provide fault tolerance. When you change shard/replica numbers, you have to delete the index and re-scan.

Estimating Elasticsearch Storage Requirements

Individual Index Size

- 1 GB for every 5 million files/folders

- 20 GB for every 100 million files/folders

⚠️ The size of the files is not relevant.

Replicas/Shard Sizes

Replicas increase the size requirements by the number of replicas. For example, a 20 GB index with 2 replicas will require a total storage capacity of 60 GB since a copy of the index (all docs) is on other Elasticsearch nodes. Multiple shards do not increase the index size, as the index's docs are spread across the ES cluster nodes.

⚠️ The number of docs per share is limited to 2 billion, which is a hard Lucene limit.

Rolling Indices

- Each Diskover scan results in the creation of a new Elasticsearch index.

- Multiple indexes can be maintained to keep the history of storage indices.

- Elasticsearch overall storage requirements will depend on history index requirements.

- For rolling indices, you can multiply the amount of data generated for a storage index by the number of indices desired for retention period. For example, if you generate 2 GB for a day for a given storage index, and you want to keep 30 days of indices, 60 GB of storage is required to maintain a total of 30 indices.

Diskover-Web Server Requirements

The Diskover-Web HTML5 user interface requires a Web server platform. It provides visibility, analysis, workflows, and file actions from the indexes that reside on the Elasticsearch endpoint.

Requirements for POC and Deployment

| Proof of Concept | Production Deployment | |

|---|---|---|

| CPU | 8 to 32 cores | 8 to 32 cores |

| RAM | 8 to 16 GB | 8 to 16 GB |

| DISK | 100 GB of SSD storage (root 75 GB, home 25 GB) | 100 GB of SSD storage (root 75 GB, home 25 GB) |

Diskover Scanners Requirements

You can install Diskover scanners on a server or virtual machine. Multiple scanners can be run on a single machine or multiple machines for parallel crawling.

The scanning host uses a separate thread for each directory at level 1 of a top crawl directory. If you have many directories at level 1, you will want to increase the number of CPU cores and adjust max threads in the diskover config. This parameter, as well as many others, can be configured from the user interface, which contains help text to guide you.

Requirements for POC and Deployment

| Proof of Concept | Production Deployment | |

|---|---|---|

| CPU | 8 to 32 cores | 8 to 32 cores |

| RAM | 8 to 16 GB | 8 to 16 GB |

| DISK | 250 to 500 GB SSD | 500 GB (root 450 GB, home 25 GB) |

Skills and Knowledge Requirements

This document is intended for Service Professionals and System Administrators who install the Diskover software components. The installer should have strong familiarity with:

- Operating System on which on-premise Diskover scanner(s) are installed.

- Basic knowledge of:

- EC2 Operating System on which Diskover-Web HTML5 user interface is installed.

- Configuring a Web Server (Apache or NGINX).

⚠️ Attempting to install and configure Diskover without proper experience or training can affect system performance and security configuration.

⏱️ The initial install, configuration, and deployment of the Diskover are expected to take 1 to 3 hours, depending on the size of your environment and the time consumed with network connectivity.

Software Download

Community Edition

There are 2 ways to download the free Community Edition, the easiest being the first option.

Download from GitHub

🔴 From your GitHub account: https://github.com/diskoverdata/diskover-community/releases

🔴 Download the tar.gz/zip

Download from a Terminal

🔴 Install git on Centos:

yum install -y git

🔴 Install git on Ubuntu:

apt install git

🔴 Clone the Diskover Community Edition from the GitHub repository:

mkdir /tmp/diskover

git clone https://github.com/diskoverdata/diskover-community.git /tmp/diskover

cd /tmp/diskover

Annual Subscription Editions

We are currently moving to a new platform for software download. Meanwhile, please open a support ticket and we will send you a link, whether you need the OVA or the full version of Diskover.

Click these links for information on how to create an account and how to create a support ticket.

System Readiness

Overview

This section describes the preliminary steps to installing Diskover. Other software installations will be covered in the subsequent sections.

Linux System Readiness

This section breaks down the recommended hardening of a Linux system prior to the deployment of the Diskover software.

Disable Firewalld & Security Enhanced Linux (SELinux)

Be default, SELinux should be disabled. If you have a corporate firewall in place or VPC security groups that restrict access to Diskover machines, you can safely disable the local Linux firewall.

🔴 Quick command to change the SELinux config:

'sed -i 's/^SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config'

🔴 Disable firewalld, doing the --now will also stop the service:

systemctl disable firewalld --now

🔴 Disabling SELinux requires a reboot of the system in order to take affect, lets do that now:

reboot now

🔴 Validate SELinux is disabled, that command should return Disabled:

getenforce

DNF Package Upgrade

Before installing custom packages or any of the Diskover software, upgrade all base-level system packages installed with your Linux system. There might be cases where specific package management repositories have to be enabled on your Linux machine prior to running this installation block.

🔴 DNF upgrade:

dnf upgrade -y \

&& \

dnf install epel-release -y \

&& \

dnf install -y \

vim vim-enhanced tar htop nmap yum-utils tmux /usr/bin/sqlite3 mlocate postfix jq gcc \

net-tools bind-utils traceroute pigz screen dstat \

iotop strace tree pv atop lsof git zip unzip wget \

hdparm telnet glances sudo nss-util iftop tldr make

Enable NTP for S3 Scanning

Enabling NTP is optional but recommended if your system is not already synchronized. Without NTP enabled, attempting to scan S3 buckets may result in crawler failures due to a significant mismatch between the request time and the current system time.

🔴 Verify if NTP is set up or not:

timedatectl

In the return, you should see System Clock Synchronized.

- If set to yes, then NTP is synchronized.

- If set to no, then continue with the next step.

🔴 Enable NTP:

timedatectl set-ntp true

🔴 timedatectl leverages chronyd when you run the command above. To verify that the chronyd service came online:

systemctl status chronyd

Windows System Readiness

🚧 Instructions to follow. Meanwhile, please note that Diskover currently only supports Windows for scanners/workers.

Mac System Readiness

🚧 Instructions to follow. Meanwhile, please note that Diskover currently only supports Mac for scanners/workers.

✅ Checklist

The chapters in this guide are in the logical order to follow for installing and configuring Diskover. This checklist is a summary for quick reference.

| STEP | TASK |

|---|---|

| 1 | Elasticsearch Installation |

| 2 | Scanners/Workers Installation |

| 3 | DiskoverD Task Worker Daemon Installation |

| 4 | Celery Installation |

| 5 | RabbitMQ or Amazon MQ Installation |

| 6 | Diskover-Web Installation |

| 7 | DiskoverAdmin Installation |

| 8 | Initial Configuration |

| 9 | Create Alternate Configurations |

| 10 | Create Tasks/Launch your first scan! |

| 11 | Configure Authentication - optional |

| 12 | Configure Access Control - optional |

| 13 | Configure Backup Environment - optional but strongly suggested |

| 14 | Configure Alternate Scanners - optional |

| 15 | Configure Index Plugins - optional |

| 16 | Configure Post-Index Plugins - optional |

| 17 | Configure File Action Plugins - optional |

| 18 | Configure Tags - optional |

| 19 | Configure Analytics - optional |

| 20 | Indices Management |

Click here for a detailed list of configurable features and how to access them.

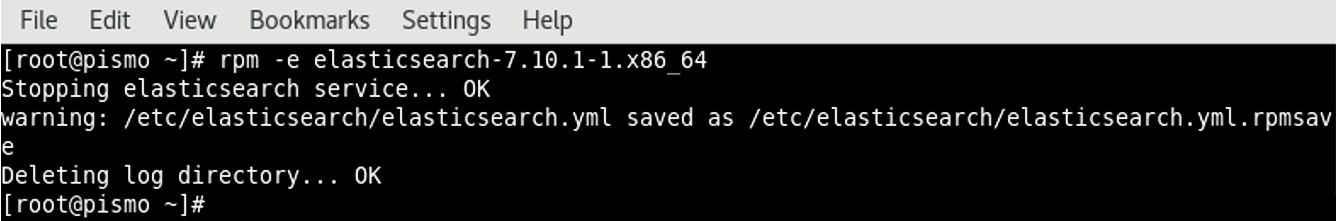

Elasticsearch Installation

Overview

This section covers the basic installation of Elasticsearch v8, commonly referred to as ES, throughout Diskover's documentation and user interface. This section covers:

- Setting up your first Elasticsearch node and we will leave ES authentication disabled by default for now.

- If you have multiple nodes in your environment, you will need to repeat this process for each node, as each node requires its dedicated system.

Once all the components are installed, you will be able to refine your Elasticsearch environment configuration. We strongly recommend following the deployment order outlined in this guide.

Here are some quick links you might need:

- Set up a cluster

- Set up multiple clusters

- Download the current release of Elasticsearch

- Download past releases of Elasticsearch

Single Node Setup without SSL

Java Open JDK Package Installation

Let's start this process by setting up your first node:

🔴 Install Java v21:

dnf install java-21-openjdk

🔴 Install Elasticsearch v8:

dnf install https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-8.15.2-x86_64.rpm

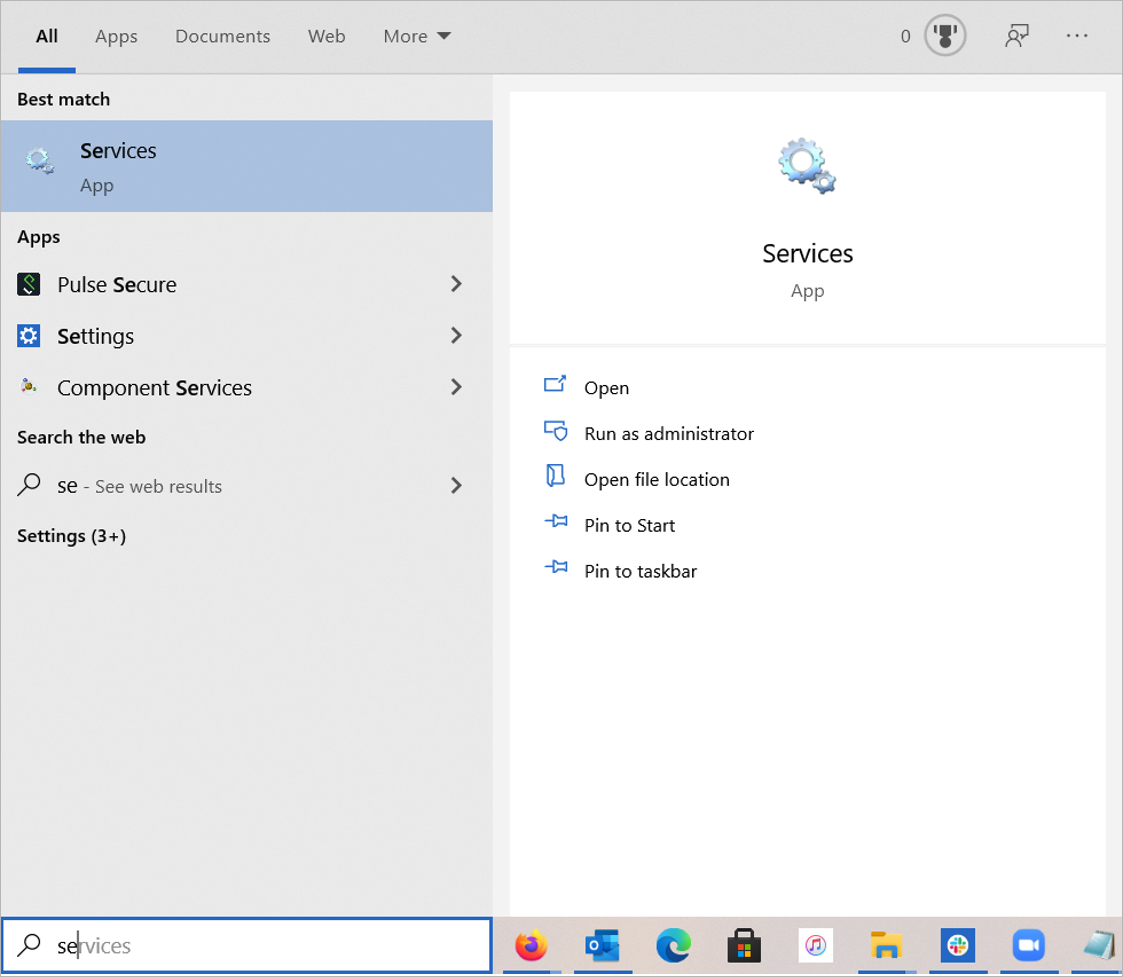

Elasticsearch Installation

🔴 Configure yum repository for ES v8:

vi /etc/yum.repos.d/elasticsearch.repo

🔴 Add the following to the file and save:

[elasticsearch]

name=Elasticsearch repository for 8.x packages

baseurl=https://artifacts.elastic.co/packages/8.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=0

autorefresh=1

type=rpm-md

🔴 Install the latest ES v8 package:

yum -y install --enablerepo=elasticsearch elasticsearch

⚠️ Elasticsearch v8 should be installed at this point.

Elasticsearch Initial Configuration

Let's perform some basic configurations to ensure our single-node ES cluster is up and running, and ready for integration with Diskover.

🔴 ES setting modifications:

vi /etc/elasticsearch/elasticsearch.yml

⚠️ Ensure the following properties are set and uncommented:

cluster.name: <name of your cluster> (Should be a distinctive name)

node.name: node-1 (Can be named anything, but should be distinctive)

path.data: /var/lib/elasticsearch (or some other custom ES data directory)

path.logs: /var/log/elasticsearch (or some other custom ES logging directory)

bootstrap.memory_lock: true (lock RAM on startup)

network.host: 0.0.0.0 (binds ES to all available IP addresses)

discovery.seed_hosts: ["ES-IP"] (If you have other ES IPs part of the cluster, they need to be comma separated like so: ["ES IP 1", "ES IP 2", "ES IP 3"])

cluster.initial_master_nodes: ["node-1"] (Names need to be what you have named the nodes above)

xpack.security.enabled: false (disable security)

xpack.security.enrollment.enabled: false (disable security enrollment on first boot)

xpack.ml.enabled: false (disable machine learning functionality - not needed)

🔴 Configure Java JVM and memory lock for ES:

vi /etc/elasticsearch/jvm.options.d/jvm.options

🔴 Ensure the JVM args are uncommented and set to half of your available RAM:

-Xms8g

-Xmx8g

🔴 ES systemd service memory settings:

mkdir /etc/systemd/system/elasticsearch.service.d

vi /etc/systemd/system/elasticsearch.service.d/elasticsearch.conf

🔴 Add the following to the file and save:

[Service]

LimitMEMLOCK=infinity

LimitNPROC=4096

LimitNOFILE=65536

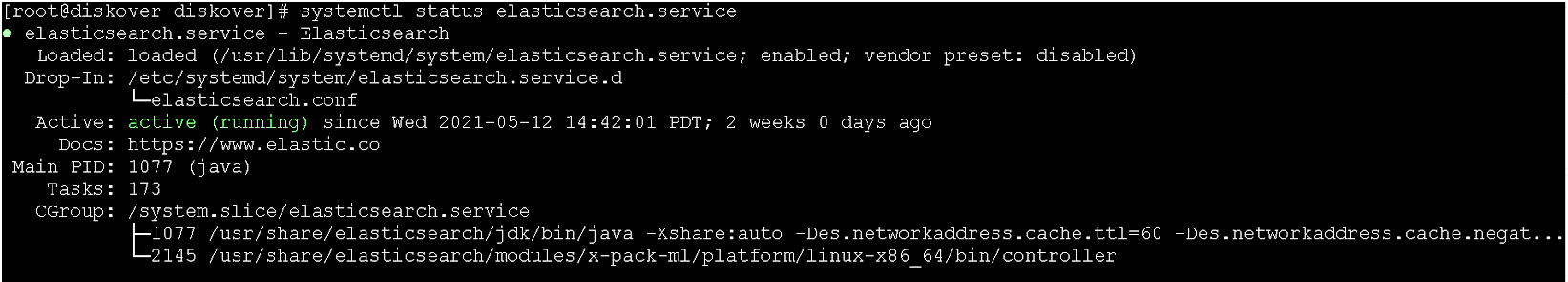

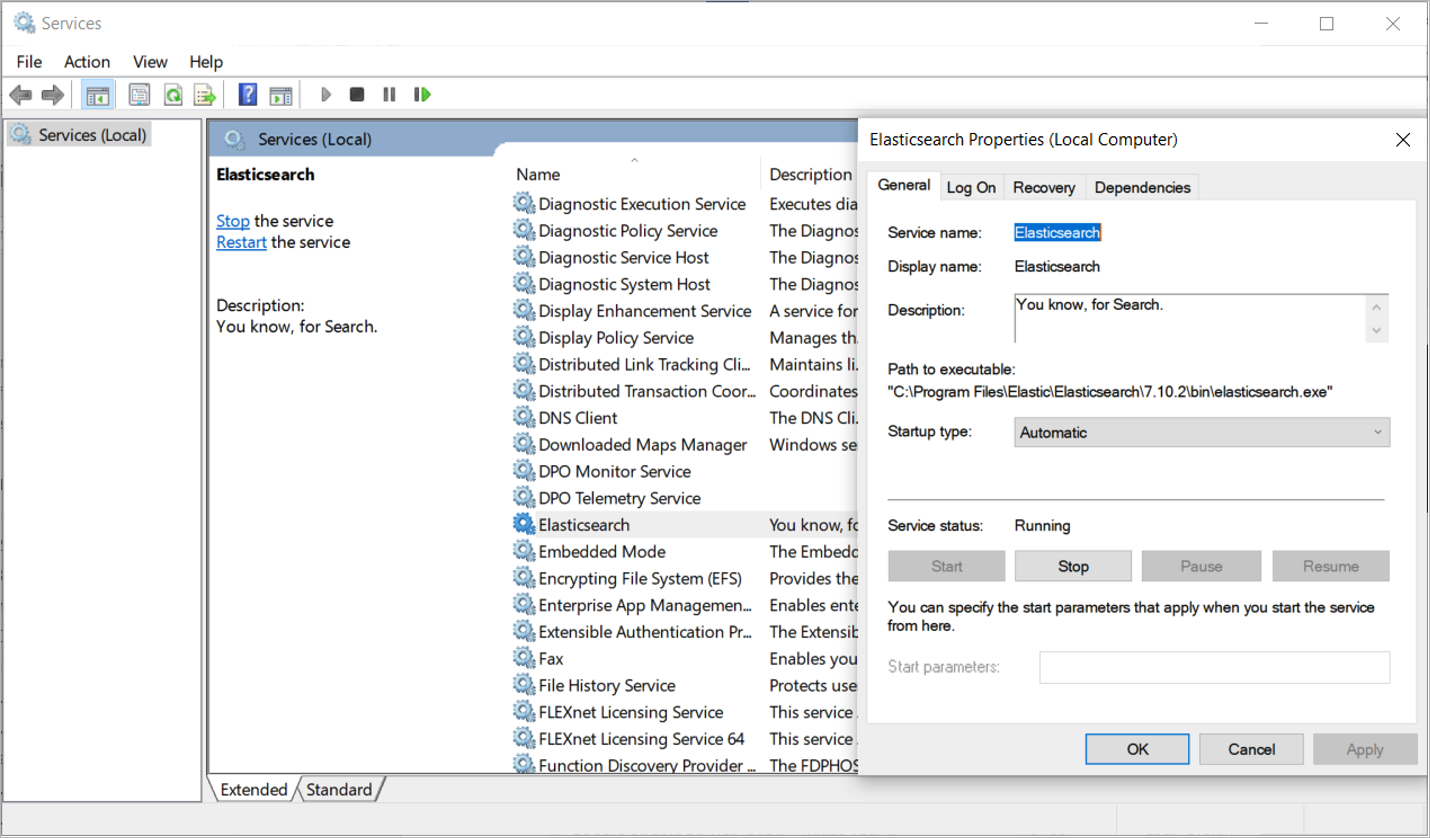

🔴 Start and enable the ES service:

systemctl enable elasticsearch

systemctl start elasticsearch

systemctl status elasticsearch

⚠️ If ES fails to lock the memory upon startup, then add the following to /etc/security/limits.conf:

elasticsearch soft memlock unlimited

elasticsearch hard memlock unlimited

Multiple Nodes Setup without SSL

If you have more than 1 node in your environment, redo all the Single Node Setup without SSL steps for each node/system.

Single Node Setup with SSL

This section will guide you through setting up an Elasticsearch cluster with a single node ensuring that SSL is enabled for secure communication.

🔴 Install Java v21:

dnf install java-21-openjdk

🔴 Install Elasticsearch v8:

dnf install https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-8.15.2-x86_64.rpm

🔴 When ES v8 finishes installing, you will need to grab the output password for the elastic user. The output will look like the following:

--------------------------- Security autoconfiguration information ------------------------------

Authentication and authorization are enabled.

TLS for the transport and HTTP layers is enabled and configured.

The generated password for the elastic built-in superuser is : y1DGG*eQFdnYPXJiPu6w

....

⚠️ If you need to reset the password, more info can be found here on that subject:

bin/elasticsearch-reset-password -u elastic

⚠️ Ensure the following is set inside the /etc/elasticsearch/elasticsearch.yml. By default, ES v8 should configure these settings automatically, but in case it doesn’t, you may need to set them manually:

🔴 Enable security features:

xpack.security.enabled: true

xpack.ml.enabled: false

xpack.security.enrollment.enabled: true

🔴 Enable encryption for HTTP API client connections, such as Kibana, Logstash, and Agents:

xpack.security.http.ssl:

enabled: true

keystore.path: certs/http.p12

🔴 Enable encryption and mutual authentication between cluster nodes:

xpack.security.transport.ssl:

enabled: true

verification_mode: certificate

keystore.path: certs/transport.p12

truststore.path: certs/transport.p12

🔴 Create a new cluster with only the current node. Additional nodes can still join the cluster later:

cluster.initial_master_nodes: ["diskover-1"]

🔴 Allow HTTP API connections from anywhere. Connections are encrypted and require user authentication:

http.host: 0.0.0.0

🔴 Allow other nodes to join the cluster from anywhere. Connections are encrypted and mutually authenticated:

transport.host: 0.0.0.0

⚠️ Be sure to comment cluster.initial_master_nodes after you have bootstrapped ES for the first time.

🔴 Verify your certs live in /etc/elasticsearch/certs/, you should have the following:

-rw-r----- 1 elasticsearch elasticsearch 1915 Oct 10 18:10 http_ca.crt

-rw-r----- 1 elasticsearch elasticsearch 10061 Oct 10 18:10 http.p12

-rw-r----- 1 elasticsearch elasticsearch 5822 Oct 10 18:10 transport.p12

🔴 Chown the /etc/elasticsearch/ directory recursively if not already done:

chown -R elasticsearch.elasticsearch /etc/elasticsearch/

🔴 Start Elasticsearch

🔴 Curl the cluster:

curl -u elastic:password https://IP or hostname:9200/_cluster/health?pretty --cacert /etc/elasticsearch/certs/http_ca.crt

Multiple Nodes Setup with SSL

This section will guide you through setting up an Elasticsearch cluster with multiple nodes ensuring that SSL is enabled for secure communication.

Prerequisites

🔴 A minimum of 3 systems, one for each ES node.

🔴 All nodes must be able to communicate with each other. The best way to test this is to install ES on the nodes, start the services, and try to telnet to each of the host:

telnet <es-ip> 9200

🔴 If this is successful, you should see the following:

[root@es1 ~]# telnet 192.168.64.19 9200

Trying 192.168.64.19...

Connected to 192.168.64.19.

Escape character is '^]'.

⚠️ If you see Connection Refused, you should check to see if SELinux and Firewalld are respectively disabled and off.

⚠️ The instructions below are for new clusters, go to Onboarding New Nodes Containing Existing Data if you are onboarding new nodes to an existing cluster.

Set up Node 1

🔴 Install Java v21:

sudo dnf install -y java-21-openjdk

🔴 Install Elasticsearch v8:

sudo dnf install -y https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-8.15.2-x86_64.rpm

🔴 Configure the JVM for Elastic vi /etc/elasticsearch/jvm.options.d/jvm.options:

-Xms8g

-Xmx8g

⚠️ You should never set the memory to more than half of what is configured for your system!

🔴 Make the directory for the custom ES systemd settings:

mkdir /etc/systemd/system/elasticsearch.service.d

🔴 Create the service config file vi /etc/systemd/system/elasticsearch.service.d/elasticsearch.conf:

[Service]

LimitMEMLOCK=infinity

LimitNPROC=4096

LimitNOFILE=65536

🔴 Change the Elastic configs to set the node and cluster name, network configs, etc.:

vi /etc/elasticsearch/elasticsearch.yml:

| Field | Description |

|---|---|

| cluster.name | It should include diskover in the name to make it easily distinguishable for the customer, for example: diskover-es |

| node.name | It can be named anything, but should include a number to identify the node, for example: node-1 |

| path.data | Set this to the desired storage location for your data. If a large amount of data is expected, it's recommended to use an external storage location. The default location is /var/lib/elasticsearch |

| path.logs | This defines the path where Elasticsearch logs will be stored. The default location is /var/log/elasticsearch |

| bootstrap.memory_lock | This should always be set to true. It will prevent Elasticsearch from trying to use the swap memory. |

| network.host | Set this to 0.0.0.0 |

| cluster.initial_master_nodes | IMPORTANT! This property will bootstrap your cluster. Without it, the service will not start up. You need to input the name of the node that you have for node.name, for example: cluster.initial_master_nodes: ["node-1"] |

| xpack.ml.enabled | This should be set to false to disable Machine Learning within ES. If you do not have this set to false, then Elasticsearch will fail upon startup |

🔴 Start the Elasticsearch service:

systemctl start elasticsearch

🔴 Create an enrollment token for the nodes you want to onboard to your cluster:

/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s node.

⚠️ This last step will output a very long token, keep this token in a safe space as we’re going to need it soon. Note that you will need the = that is included in the value.

Set up Node 2 and 3

🔴 Run through the same pre-steps to set up Node 1, but don’t worry about the password that is generated.

🔴 Change the Elastic configs to set the node and cluster name, network configs, etc.:

vi /etc/elasticsearch/elasticsearch.yml:

| Field | Description |

|---|---|

| cluster.name | This name must match the Node 1 cluster name, otherwise, these nodes will not join the correct cluster, for example: diskover-es |

| node.name | Should be incremented from the last node name, for example: Node 1: node-1, Node 2: node-2, Node 3: node-3 |

| path.data | Set this to the desired storage location for your data. If a large amount of data is expected, it's recommended to use an external storage location. The default location is /var/lib/elasticsearch. IMPORTANT! This should match the other nodes' location for parity. |

| path.logs | This defines the path where Elasticsearch logs will be stored. The default location is /var/log/elasticsearch |

| bootstrap.memory_lock | This should always be set to true. It will prevent Elasticsearch from trying to use the swap memory. |

| network.host | Set this to 0.0.0.0 |

| cluster.initial_master_nodes | Don’t worry about this property for now as we’re going to be joining a bootstrapped cluster |

| xpack.ml.enabled | This should be set to false to disable Machine Learning within ES. If you do not have this set to false, then Elasticsearch will fail upon startup |

⚠️ Do not start Elasticsearch yet!

🔴 Let's join Nodes 2 and 3 to the Node 1 cluster:

/usr/share/elasticsearch/bin/elasticsearch-reconfigure-node --enrollment-token "your token here"

🔴 Press Y to continue with the reconfiguration. This will remove the self-signed certs that ES generated when you installed it, remove all the previous settings from the keystore, etc. and place in the certs and password from Node 1, ensuring all nodes are using the same password as Node 1.

🔴 Start the Elasticsearch service:

systemctl start elasticsearch

Single Cluster Setup

Overview

While it’s not mandatory to set up a cluster, if you have 3 or more Elasticsearch nodes, setting up a cluster is highly recommended for ensuring high availability, reliability, load balancing, and fault tolerance. It’s the preferred setup for production environments.

This section will walk you through the steps to configure a cluster, enabling your nodes to work together efficiently and securely distribute data across the system.

Requirements

- Each ES node needs to be installed on its own system.

- All nodes must be able to communicate with each other. To test this, install Elasticsearch on the nodes, start the services, and use telnet to connect to each host.

telnet <es-ip> 9200

- If this is successful, you should see the following:

[root@es1 ~]# telnet 192.168.64.19 9200

Trying 192.168.64.19...

Connected to 192.168.64.19.

Escape character is '^]'.

- If you see Connection Refused, you should validate if SELinux and Firewalld are disabled and off, respectively.

Setup

🔴 Run DNF updates:

sudo dnf update -y

🔴 Install Java 8:

sudo dnf install -y java-21-openjdk

🔴 Install Elasticsearch 7:

sudo dnf install -y https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-8.15.2-x86_64.rpm

🔴 Configure the JVM for Elastic:

vi /etc/elasticsearch/jvm.options.d/jvm.options:

🔴 Set the memory heap size - memory allocation should never exceed half of your system's total configured memory:

-Xms8g

-Xmx8g

🔴 Set up the Elastic config:

vi /etc/elasticsearch/elasticsearch.yml:

| Field | Description |

|---|---|

| cluster.name | It should include diskover in the name to make it easily distinguishable for the customer, for example: diskover-es |

| node.name | It can be named anything, but should include a number to identify the node, for example: diskover-node-1 |

| path.data | Set this to the desired storage location for your data. If a large amount of data is expected, it's recommended to use an external storage location. The default location is /var/lib/elasticsearch |

| path.logs | This defines the path where Elasticsearch logs will be stored. The default location is /var/log/elasticsearch |

| bootstrap.memory_lock | This should always be set to true. It will prevent Elasticsearch from trying to use the swap memory. |

| network.host | This should be set to the IP address of the host where you're configuring Elasticsearch. |

| discovery.seed_hosts | IMPORTANT! You need to enter the IP addresses of each Elasticsearch node that will be part of the cluster, for example:discovery.seed_hosts: ["192.168.64.18", "192.168.64.19", "192.168.64.20"] |

| cluster.initial_master_nodes | IMPORTANT! You need to enter the name of each node for the node.name setting, for example:cluster.initial_master_nodes: ["diskover-node-1", "diskover-node-2", "diskover-node-3"] |

| xpack.ml.enabled | This should be set to false to disable the Machine Learning within ES. If you do not have this set to false, then Elasticsearch will fail upon startup. |

🔴 Make the directory for the custom ES systemd settings:

mkdir /etc/systemd/system/elasticsearch.service.d

🔴 Create the service config file:

vi /etc/systemd/system/elasticsearch.service.d/elasticsearch.conf:

[Service]

LimitMEMLOCK=infinity

LimitNPROC=4096

LimitNOFILE=65536

Start Elasticsearch Cluster

🔴 Reload the daemon on all ES nodes:

sudo systemctl daemon-reload

🔴 Start up Node 1 first:

sudo systemctl start elasticsearch

⚠️ You can watch the startup logs at /var/log/elasticsearch/

🔴 Once Node 1 is online, start Node 2, then once Node 2 is online, start Node 3.

Multiple Clusters Setup

In a multiple-cluster setup for Elasticsearch, you can run and manage multiple independent clusters, each with its own set of nodes and indices. This setup is typically used when you need to isolate data or workloads across different environments (such as production, testing, and development) or geographically distributed locations. Each cluster operates independently, and you can configure cross-cluster search or replication to share data or search across clusters as needed.

Please open a support ticket for assistance.

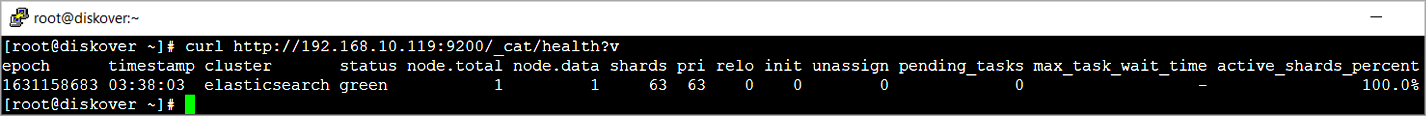

Elasticsearch Health Check without SSL

With the ES cluster installed and running, you can now run a simple curl command to check the health of your cluster.

🔴 Check the health of your Elasticsearch cluster.

⚠️ Replace the ${ESHOST} below with your ES node(s) IP address or hostname

Curl command if SSL is enabled on the cluster - the result will differ, of course, based on your own environment:

curl -XGET -u elastic:password https://${ESHOST}:9200/_cluster/health?pretty --cacert /etc/elasticsearch/certs/http_ca.crt

{

"cluster_name" : "elasticsearch",

"status" : "yellow",

"timed_out" : false,

"number_of_nodes" : 1,

"number_of_data_nodes" : 1,

"active_primary_shards" : 78,

"active_shards" : 78,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 1,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 98.73417721518987

}

Curl command if SSL is not enabled on the cluster - the result will differ, of course, based on your own environment:

curl http://${ESHOST}:9200/_cluster/health?pretty

{

"cluster_name" : "elasticsearch",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 1,

"number_of_data_nodes" : 1,

"active_primary_shards" : 0,

"active_shards" : 0,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

Elasticsearch Health Check with SSL

🔴 From now 1, curl node 2 or 3:

[root@ip-10-0-3-121 bin]# curl -XGET -u "elastic:redacted" https://10.0.4.84:9200/_cluster/health?pretty --cacert /etc/elasticsearch/certs/http_ca.crt

{

"cluster_name" : "diskover-soldev",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 3,

"active_primary_shards" : 32,

"active_shards" : 34,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"unassigned_primary_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

🔴 From now 2, curl node 2 or 3:

[root@ip-10-0-4-84 bin]# curl -XGET -u "elastic:redacted" https://10.0.3.121:9200/_cluster/health?pretty --cacert /etc/elasticsearch/certs/http_ca.crt

{

"cluster_name" : "diskover-soldev",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 3,

"active_primary_shards" : 32,

"active_shards" : 34,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

Downsizing from 3 Nodes to 1 Node

🔴 Stop all 3 nodes.

🔴 On the node you want to preserve:

vi /etc/elasticsearch/elasticsearch.yml

🔴 Then add this:

discovery.type: single-node

🔴 Delete the nodes file and _state directory that contain local metadata from the previous distributed cluster setup:

rm -rf /path/to/dataDir/{nodes,_state}

🔴 Reset the Elasticsearch password, then press y to continue:

/usr/share/elasticsearch/bin/elasticsearch-reset-password -u elastic

🔴 Trt curling the health:

[root@ip-10-0-3-121 bin]# curl -XGET -u "elastic:redacted" https://10.0.3.121:9200/_cluster/health?pretty --cacert /etc/elasticsearch/certs/http_ca.crt

{

"cluster_name" : "diskover-soldev",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 1,

"number_of_data_nodes" : 1,

"active_primary_shards" : 13,

"active_shards" : 13,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

Onboarding New Nodes Containing Existing Data

🔴 Node 1:

vi elasticsearch.yml

discovery.seed_hosts: ["Node 1 IP","Node 2 IP","Node 3 IP"]

cluster.initial_master_nodes: ["node-1","node-2","node-3"]

🔴 Restart node 1.

🔴 Add the following to node 2 and 3:

discovery.seed_hosts: ["Node 1 IP","Node 2 IP","Node 3 IP"]

🔴 Restart ES on these nodes one at a time.

🔴 Test curling the cluster health:

[root@ip-10-0-3-121 bin]# curl -XGET -u "elastic:redacted" https://10.0.4.84:9200/_cluster/health?pretty --cacert /etc/elasticsearch/certs/http_ca.crt

{

"cluster_name" : "diskover-soldev",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 3,

"active_primary_shards" : 32,

"active_shards" : 34,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"unassigned_primary_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

🔴 From node 2, curl node 1 or 3:

[root@ip-10-0-4-84 bin]# curl -XGET -u "elastic:redacted" https://10.0.3.121:9200/_cluster/health?pretty --cacert /etc/elasticsearch/certs/http_ca.crt

{

"cluster_name" : "diskover-soldev",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 3,

"active_primary_shards" : 32,

"active_shards" : 34,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

Diskover Scanners/Workers Installation

Overview

Diskover has a distributed task system where scanners/workers can be distributed among many resources. For each resource providing a task worker, services need to have a DiskoverD installed. The Task Panel will be covered after the installation and initial configuration of the main components.

Click here for the full screen view of this diagram.

-

This section will walk you through installing node workers for your Diskover environment.

-

During this process, you will need the latest Diskover 2.4x software.

-

Once all components are installed, you will be able to configure your scanning environment. We strongly recommend following the deployment order outlined in this guide.

Multiple Scanners/Workers Environment

If your environment includes multiple scanners, repeat the process in this chapter for each one of your workers. Once you have the zip file, you can SCP it to all machines that are designated to be a Diskover Worker.

🔴 On-prem | Will scp the file to the root user's home directory:

scp <path to diskover.zip> root@ipAddress:~/

🔴 AWS | Will scp the file to the user's home directory. Example using Rocky:

scp -i <path to PEM file> <path to diskover.zip> rocky@bastion-IP:~/

⚠️ Note that the user will differ depending on your OS. It is best to consult your AWS EC2 Console to get the exact user to connect to the bastion. Generally, these are the users for the following OS:

| OS | User |

|---|---|

| Rocky Linux | rocky |

| Centos 7 or 8 | centos |

| RHEL or Amazon Linux | ec2-user |

Linux Scanners/Workers

Python Installation

This section covers installing Python v3.12 and configuring it as the main Python 3 executable. Alternatively, use a PyEnv Python Environments. Additionally, some steps here, such as symlinking to the main Python 3 executable, might not be advisable if this system is used for other Python-based programs.

🔴 Install Python:

yum -y install python3.12 python3.12-devel gcc

unlink /usr/bin/python3

ln -s /usr/bin/python3.12 /usr/bin/python3

which python3

-- /usr/bin/python3

python3 -V

-- Python 3.11.11

🔴 Install PIP:

python3 -m ensurepip

python3 -m pip install --upgrade pip

Diskover Scanner Installation

🔴 Extract your zip archive:

unzip diskover-2.4.0.zip

🔴 Copy the Diskover folder:

cd diskover-2.4.0/

cp -a diskover /opt/

🔴 Install Python packages:

cd /opt/diskover

python3 -m pip install -r requirements.txt; python3 -m pip install -r requirements-aws.txt

🔴 Create diskoverd (Diskover Daemons) log directory:

mkdir -p /var/log/diskover

🔴 Create a diskoverd configuration file, allowing us to connect the worker to the Diskover-Web API Server:

mkdir -p /root/.config/diskoverd

cp /opt/diskover/configs_sample/diskoverd/config.yaml /root/.config/diskoverd/

🔴 Set the API URL for Diskover-Web:

vi /root/.config/diskoverd/config.yaml

🔴 Edit the apiurl property. You will need to replace the ${WEBHOST} below with your web nodes IP address or hostname:

apiurl: http://${WEBHOST}:8000/api.php

DiskoverD Task Worker Daemon

Now that your first worker node is installed and configured, let’s daemonize this service with systemd.

🔴 Create systemd service file:

vi /etc/systemd/system/diskoverd.service

🔴 Add the following to the file and don't forget to save:

[Unit]

Description=diskoverd task worker daemon

After=network.target

[Service]

Type=simple

User=root

WorkingDirectory=/opt/diskover/

ExecStart=/usr/bin/python3 /opt/diskover/diskoverd.py -n worker-%H

Restart=always

[Install]

WantedBy=multi-user.target

🔴 Set permissions and enable the service:

chmod 644 /etc/systemd/system/diskoverd.service

systemctl daemon-reload

systemctl enable diskoverd

⚠️ Please proceed to the next sections, as you will be unable to start the diskoverd worker service until your API server and license are installed.

Enable SSL for Task Workers

🔴 Copy the http_ca.crt to the Worker(s) server(s) and place into /etc/pki/ca-trust/source/anchors/http_ca.crt

🔴 Run the following command:

sudo update-ca-trust ; mkdir /opt/diskover/elasticsearch-certs/ ; cp http_ca.crt /opt/diskover/elasticsearch-certs/

🔴 Navigate to DiskoverAdmin → Web → Elasticsearch:

- Input your Elasticsearch IPs, and Elastic user + password.

- For the SSL certificate path, you need to put the full path of where the certificate is held on the Web, including the name of the cert: `/opt/diskover/elasticsearch-certs/http_ca.cr`

- Hitting **Test** on this page will result in a failure as the call for this test is coming from the [Web server](#install_diskover_web), so long as you can start your Worker up, you’re good to go!

Mounting NFS Filesystems

In the example below, we will be mounting a volume called vol1 from the server nas01 into the directory called /nfs/vol1

🔴 Ensure the NFS client tools are installed:

dnf install -y rpcbind nfs-utils nfs4-acls-tools

🔴 Start the required NFS client services:

systemctl start rpcbind nfs-idmap

systemctl enable rpcbind nfs-idmap

🔴 Create the directory where we will mount the filesystem:

mkdir -p /nfs/vol1

🔴 Add an entry in the /etc/fstab configuration file to ensure the volume gets mounted on reboot:

nas01:/vol1 /nfs/vol1 nfs defaults 0 0

🔴 Mount the filesystem and display its capacity:

mount /nfs/vol1

df -h /nfs/vol1

⚠️ For detailed information about configuring NFS clients, consult the RedHat NFS client documentation.

Mounting CIFS Filesystems

In the example below, we will be mounting a volume called vol1 from the server nas01 into the directory called /cifs/vol1

🔴 Ensure the CIFS packages are installed:

dnf install -y samba-client samba-common cifs-utils

🔴 Create the directory where we will mount the filesystem:

mkdir -p /cifs/vol1

🔴 Add an entry in the /etc/fstab configuration file to ensure the volume gets mounted on reboot. In the example below, change the username, password, and domain to match your environment.

systemctl start rpcbind nfs-idmap

systemctl enable rpcbind nfs-idmap

mount /nfs/vol1

df -h /nfs/vol1

\\nas01\vol1 /cifs/vol1 cifs username=winuser,password=winpassword, ˓→domain=windomain,vers=2.0 0 0

🔴 Mount the filesystem and display its capacity:

mount /cifs/vol1

df -h /cifs/vol1

⚠️ For additional information about configuring CIFS clients, visit [CentOS tips for mounting Windows shares](https://wiki.centos.org/TipsAndTricks(2f)WindowsShares.html#:~:text=Mounting%20Windows%20(or%20other%20samba,are%20used%20in%20our%20examples.&text=Word%20of%20warning:%20the%20default%20behaviour%20for%20mount.).

Windows Scanners/Workers

Installation Requirements

Installer Build Directories

This installer requires an internet connection to install Python and the NSSM service. The Windows machine running the installer must have internet access. Currently, there’s no offline install option with this method. To install without internet access, you'd need to reverse-engineer the installer script and manually download and install Python and NSSM packages on the server.

Python on Windows

By default, Windows links the python.exe and python3.exe executables to the Microsoft Store. This means that if you try to run a command like python script.pyit might prompt you to install Python from the Store, even if you've already installed it manually. The installer installs Python manually, so you'll need to disable this setting. To do so, search for Manage App Execution Aliases in the Windows search bar, then find python3 and python, and set both to No or Off.

Build Directories

Installer Build Directories

The installer creates a temporary build directory under the user account that is running the installer, or under the user account authenticated as an admin to execute the installer, depending on the situation. This temporary directory will contain the Diskover build contents.

diskover-2.4.0.zip

requirements.txt

Once the installer finishes and/or when the installer has completed but the Finish button has not been chosen, you can access the contents of this directory if ever necessary. Sample build directory temp path C:\Users\Brandon Langley\AppData\Local\Temp\is-4MFN0.tmp

Diskover Build Directories

During the initial part of the wizard, a few directories are created to host the diskover build and necessary configuration files:

C:\Program Files\Diskover\

%APPDATA%\diskoverd

Sample Path : C:\Users\Brandon Langley\AppData\Roaming\

✏️ Note that this %APPDATA% string can be put in the search bar of the Windows file browser to go to the current users' application data directory. However, as mentioned above, the user running the installers and/or the user authenticating as admin to execute the installer will be the installer using the %APPDATA% path.

The issue here is if one user runs the installer, but then in the NSSM section, we tell another user to run the service. This means that this \diskoverd configuration directory and config file within will be in the wrong user path.

Python Installation

Python Installation

The DiskoverTaskWorker-2.4.0.exe installer will be installing Python3.12.0 on the Windows machine. The installation will do the following things :

- Download the

Python3.12.0.exeinstaller within theC:\Program Files\Diskover\folder - Execute the installer with the following flags:

/quiet→ to not display the Python installer UI while the DiskoverTaskWorker installer is running.InstallAllUsers=0→ to only install thisPython3.12.0version - for the user that is running the installer.PrependPath=1→ to add thePython3.12.0executable to the Windows class path - for the user that is running the installer.

PIP Packages

A standard process using PIP during installer execution now that Python is installed. The requirements.txt file used here is the latest from the master at the time of 2.3.x being released and has been tested against Python3.12.0:

- Ensure PIP

- Upgrade PIP

- Install

requirements.txt

Diskover Configuration

Given that a lot of the Diskover configurations are now in the database, the only actual configuration file is the %APPDATA%\diskoverd\config.yaml mentioned above. This contains the only input field into the DiskoverTaskWorker wizard : Diskover Web URL. This gives the worker the ability to register with the DiskoverAdmin service and fetch all of its other configurations.

NSSM

Install Process

The installer downloads NSSM Version 2.24 from the internet and places it within C:\Program Files\Diskover\. The zip file is extracted in that same directory, and then PowerShell commands are issued to add this directory to the classpath - for the user that is running the installer. This is the path: C:\Program Files\Diskover\nssm-2.24\nssm-2.24\win64\

Building the Diskover Service

Now that NSSM is installed and added to the classpath, the installer runs a few NSSM commands and creates a few files to bind Diskover to NSSM.

🔴 A batch file is created at C:\Program Files\Diskover\diskoverd-win-service.bat

🔴 The contents of this file are the standard startup for DiskoverD:

python "C:\Program Files\diskover\diskoverd.py" -n %COMPUTERNAME%

🔴 The installer executes this command to install the Diskover service in NSSM, nssm.exe installs diskover:

C:\Program Files\Diskover\diskoverd-win-service.bat

🔴 A few commands are executed to create service startup logging:

nssm.exe set diskover AppStdout "C:\Program Files\Diskover\logs\service-start-log"

nssm.exe set diskover AppStderr "C:\Program Files\Diskover\logs\service-start-error-log"

Manual Processes After Installation

NSSM

Once the installer is complete, you'll need to bind the NSSM Diskover service to a user account.

🔴 Get current user account:

whoami

🔴 Edit the diskover service:

nssm edit diskover

Once you run this command, you will go to the Log On tab and select This Account, then simply put the output of the whoami command and insert the login credentials below. Finally click Edit Service to save the configurations. This means that the user data input will be the one that executes the Diskover Task Worker service on this Windows Machine.

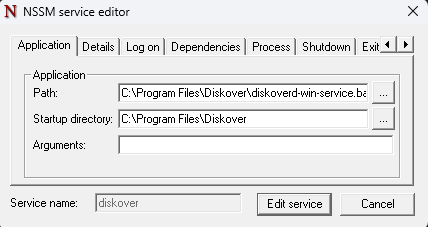

This is a working example of what your service details should look like in NSSM:

Service Startup | Logging

Now that we have that figured out, let’s start the service and tail the log.

🔴 Start diskover:

nssm start diskover

🔴 Service Startup Logs:

Get-Content -Path "C:\Program Files\Diskover\logs\service-start-error.log" -Tail 25 -Wait

🔴 Diskover Logs:

Get-Content -Path "C:\Program Files\Diskover\logs\diskoverd_subproc_$HOSTNAME.log" -Tail 25 -Wait

Troubleshooting | Nice to Know

Manual Scan

Once you have everything going, you can tail the log and run a manual scan:

python "C:\Program Files\Diskover\diskover.py" -i diskover-win-test "C:\Program Files\Diskover"

User Authentication Issues

When configuring NSSM to work with a user, we have seen issues where the Windows machine is bound to an AD domain, and the user attempts to go through the Log On portion of the NSSM setup and use that domain. It seems that the NSSM service (as configured) is not able to properly look up the SID values for the domain-bound users. Thus, there might be errors starting the Diskover Task Worker service.

When this occurs, you can choose to use the Local System Account. Given this occurs, it is possible that the diskoverd\config.yaml was installed in the %APPDATA% folder for the user that is running the installer. This will need to be move to the proper %APPDATA%folder for the Local System Account.

Diskover Admin - DiskoverD Configuration

If you are connecting this back to a single stack OVA that likely has the DiskoverD configuration for the ElasticSearch connection set to localhost you will need to change that to the OVA’s IPV4 address so that the Windows Task Worker can fetch that configuration for ES and be able to connect properly.

Mac Scanners/Workers

🚧 We're hard at work preparing these instructions. Meanwhile, click here to open a support ticket, and we'll gladly assist you with this step of your deployment.

Celery Installation

Overview

This Celery component will need to be installed on each of your scanner/worker nodes.

⚠️ Additional Celery documentation

Celery for Linux

🔴 Install Celery:

python3 -m pip install celery

which celery

-- /usr/local/bin/celery

🔴 Copy in the default Celery config file:

cp /opt/diskover/diskover_celery/etc/celery.conf /etc/

🔴 Create systemd service file:

cp /opt/diskover/diskover_celery/etc/celery.service /etc/systemd/system/

🔴 Create Celery log/run directories:

mkdir /var/log/celery; chmod 777 /var/log/celery

mkdir /var/run/celery; chmod 777 /var/run

🔴 Set permissions and enable the service:

chmod 644 /etc/systemd/system/celery.service

systemctl daemon-reload

systemctl enable celery

🔴 Run the Celery service manually to see if any errors pop up:

cd /opt/diskover/

celery -A diskover_celery.worker worker

⚠️ When you see something like this, you know your Celery service has come online:

2024-10-04 15:22:55,192 - celery.worker.consumer.connection - INFO - - Connected to amqp://diskover:**@rabbitmq-IP:5672//

2024-10-04 15:22:56,450 - celery.apps.worker - INFO - - celery@worker-node-hostname ready.

🔴 Start and enable the celery service:

systemctl start celery

systemctl enable celery

systemctl start celery

🔴 If for some reason the celery service doesn't start, check the celery logs:

cd /var/log/celery/

⚠️ The API server must be installed before starting the Celery service.

Celery for Windows

🚧 We're hard at work preparing these instructions. Meanwhile, click here to open a support ticket, and we'll gladly assist you with this step of your deployment.

Celery for Mac

🚧 We're hard at work preparing these instructions. Meanwhile, click here to open a support ticket, and we'll gladly assist you with this step of your deployment.

RabbitMQ and Amazon MQ Server Installation

Overview

RabbitMQ or Amazon MQ serves as the messaging bus/queue system that communicates with all Celery systems on your Diskover Worker nodes. We recommend installing this service on a dedicated standalone host.

Once all components are installed, you will be able to configure your messaging environment. We strongly recommend following the deployment order outlined in this guide.

⚠️ Additional guidelines for RabbitMQ management:

RabbitMQ for Linux

🔴 Configure yum repositories:

curl -s https://packagecloud.io/install/repositories/rabbitmq/rabbitmq-server/script.rpm.sh | bash

curl -s https://packagecloud.io/install/repositories/rabbitmq/erlang/script.rpm.sh | bash

🔴 Install rabbitmq-server and erlang. Note that installing these packages may require different steps depending on the Linux distribution:

yum -y install rabbitmq-server erlang

🔴 Ensure the service starts and enable it:

systemctl start rabbitmq-server.service

🔴 If the above step failed, make sure the hosts hostame is pingable:

systemctl status rabbitmq-server.service

systemctl enable rabbitmq-server.service

🔴 Configure RabbitMQ for use with Diskover:

rabbitmq-plugins enable rabbitmq_management

rabbitmqctl change_password guest darkdata (This will password not be used - it is only to secure the guest account)

rabbitmqctl add_user diskover darkdata (Feel free to choose your own username/password)

rabbitmqctl set_user_tags diskover administrator (If you changed users, set it properly here and replace 'diskover')

rabbitmqctl set_permissions -p / <user> ".*" ".*" ".*" (If you changed users, set it properly here and replace 'diskover')

🔴 Restart the service:

systemctl restart rabbitmq-server

systemctl status rabbitmq-server

⚠️ This completes the RabbitMQ configuration for Diskover. You should now be able to access the RabbitMQ Management Portal:

http://$rabbitMQHost:15672/#/

RabbitMQ for Windows

🚧 We're hard at work preparing these instructions. Meanwhile, click here to open a support ticket, and we'll gladly assist you with this step of your deployment.

RabbitMQ for Mac

🚧 We're hard at work preparing these instructions. Meanwhile, click here to open a support ticket, and we'll gladly assist you with this step of your deployment.

Amazon MQ

🚧 We're hard at work preparing these instructions. Meanwhile, click here to open a support ticket, and we'll gladly assist you with this step of your deployment.

Diskover-Web Installation

Overview

This section covers all the necessary steps to set up your Diskover-Web user interface, including the new DiskoverAdmin panel available with Diskover v2.4x.

Once all components are installed, you will be able to configure your Diskover-Web environment. We strongly recommend following the deployment order outlined in this guide.

NGINX and PHP Installation

Let's install NGINX and all the necessary PHP packages.

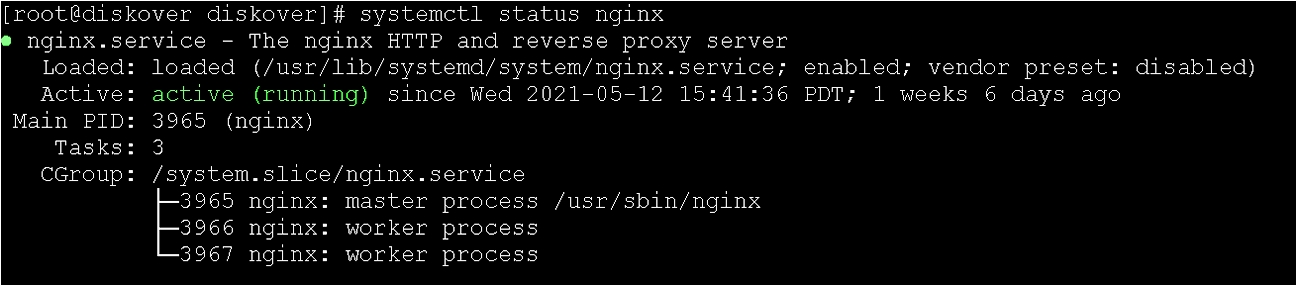

🔴 Install NGINX:

yum -y install nginx

🔴 Enable and start the NGINX service:

systemctl enable nginx

systemctl start nginx

systemctl status nginx

🔴 Enable epel and remi repositories. Change the 8s to 9s if using you're using RHEL/Rocky Linux 9:

yum -y install https://dl.fedoraproject.org/pub/epel/epel-release-latest-8.noarch.rpm

yum -y install https://rpms.remirepo.net/enterprise/remi-release-8.rpm

🔴 Install PHP 8 packages:

yum -y install php84 php84-php-common php84-php-fpm php84-php-opcache \

php84-php-cli php84-php-gd php84-php-mysqlnd php84-php-ldap php84-php-pecl-zip \

php84-php-xml php84-php-mbstring php84-php-json php84-php-sqlite3

🔴 Copy in php.ini:

find / -mount -name php.ini-production

-- /opt/remi/php84/root/usr/share/dovi /etc/php84-php-common/php.ini-productio

find / -mount -name php.ini

-- /etc/opt/remi/php84/php.ini

cp /opt/remi/php84/root/usr/share/doc/php84-php-common/php.ini-production /etc/opt/remi/php84/php.ini

⚠️ This command may differ depending on your PHP8 install directory. To find your PHP8 install directory:

php -i | grep 'Configuration File'

🔴 Edit php-fpm configuration:

vi /etc/opt/remi/php84/php-fpm.d/www.conf

⚠️ This command may differ depending on your PHP8 install directory. Please ensure the following properties are set and uncommented:

user = nginx

group = nginx

listen = /var/opt/remi/php84/run/php-fpm/www.sock (take note of this .sock location, you will need it later)

listen.owner = nginx

listen.group = nginx

;listen.acl_users = apache (ensure this is commented out with the ;)

🔴 PHP directories ownership:

chown -R root:nginx /var/opt/remi/php84/lib/php (this command may differ depending on your PHP8 install directory)

mkdir /var/run/php-fpm

chown -R nginx:nginx /var/run/php-fpm

🔴 Create systemd service file and save:

vi /etc/systemd/system/php-fpm.service

🔴 Add the following to the file and note that this ExecStart command may differ depending on your PHP8 install directory:

[Unit]

Description=PHP FastCGI process manager

After=local-fs.target network.target nginx.service

[Service]

PIDFile=/opt/php/php-fpm.pid

ExecStart=/opt/remi/php84/root/usr/sbin/php-fpm --fpm-config /etc/opt/remi/php84/php-fpm.conf --nodaemonize

Type=simple

[Install]

WantedBy=multi-user.target

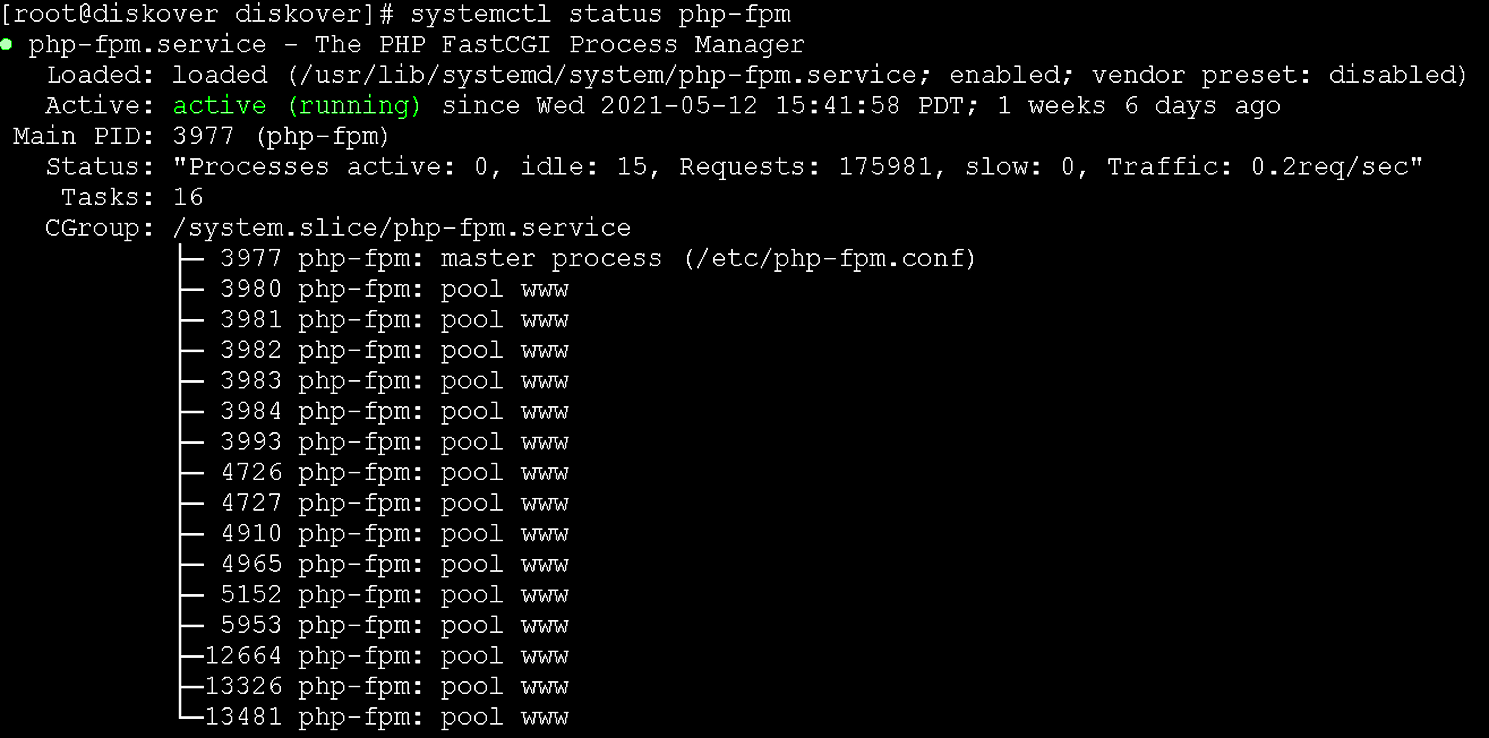

🔴 Set permissions, enable, and start the service:

chmod 644 /etc/systemd/system/php-fpm.service

systemctl daemon-reload

systemctl enable php-fpm

systemctl start php-fpm

systemctl status php-fpm

🔴 Build the NGINX configuration file:

vi /etc/nginx/conf.d/diskover-web.conf

🔴 Add the following to the file - replacing the value in fastcgi_pass with the location of your www.sock from the php configuration file a few steps up, and then save:

server {

listen 8000;

server_name diskover-web;

root /var/www/diskover-web/public;

index index.php index.html index.htm;

error_log /var/log/nginx/error.log;

access_log /var/log/nginx/access.log;

location / {

try_files $uri $uri/ /index.php?$args =404;

}

location ~ \.php(/|$) {

fastcgi_split_path_info ^(.+\.php)(/.+)$;

set $path_info $fastcgi_path_info;

fastcgi_param PATH_INFO $path_info;

try_files $fastcgi_script_name =404;

fastcgi_pass unix:/var/opt/remi/php84/run/php-fpm/www.sock;

#fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

fastcgi_read_timeout 900;

fastcgi_buffers 16 16k;

fastcgi_buffer_size 32k;

}

}

Diskover-Web Installation

Let's install Diskover-Web now that we have our NGINX and PHP packages installed and configured. You need to ensure that you have the latest Diskover 2.4 zip archive. Once you have the zip file, you can SCP it to the machine that is designated for Diskover-Web.

🔴 On-prem | Will scp the file to the root user's home directory:

scp <path to diskover.zip> root@ipAddress:~/

🔴 AWS | Will scp the file to the user's home directory. Example using Rocky:

scp -i <path to PEM file> <path to diskover.zip> rocky@bastion-IP:~/

⚠️ Note that the user will differ depending on your OS. It is best to consult your AWS EC2 Console to get the exact user to connect to the bastion. Generally, these are the users for the following OS:

| OS | User |

|---|---|

| Rocky Linux | rocky |

| Centos 7 or 8 | centos |

| RHEL or Amazon Linux | ec2-user |

🔴 Extract your zip archive:

unzip diskover-2.4.0.zip

🔴 Copy the diskover-web folder:

cd diskover-2.4.0/

cp -a diskover-web /var/www/

🔴 Copy the default sample reports:

cd /var/www/diskover-web/public

for f in *.txt.sample; do cp $f "${f%.*}"; done

chmod 660 *.txt

🔴 Copy the task panel defaults:

cd /var/www/diskover-web/public/tasks/

for f in *.json.sample; do cp $f "${f%.*}"; done

chmod 660 *.json

🔴 Set permissions for diskover-web:

chown -R nginx:nginx /var/www/diskover-web

🔴 Restart and check services health:

systemctl restart nginx php-fpm; systemctl status nginx php-fpm

⚠️ Occasionally you will see this error Another FPM instance seems to already listen on /var/opt/remi/php84/run/php-fpm/www.sock, if you do:

rm /var/opt/remi/php84/run/php-fpm/www.sock

systemctl restart php-fpm; systemctl status php-fpm

Enable SSL for Diskover-Web

🔴 Copy the http_ca.crt to the Web server and place into:

/etc/pki/ca-trust/source/anchors/http_ca.crt

🔴 Run the following command:

sudo update-ca-trust

🔴 Edit the php.ini file so that we can have PHP use this cert location to communicate with ES vi /etc/opt/remi/php84/php.ini:

openssl.cafile=/etc/pki/tls/certs/ca-bundle.crt

openssl.capath=/etc/pki/tls/certs

🔴 Run the following commands:

mkdir /var/www/diskover-web/src/diskover/elasticsearch-certs/ ; cp /etc/pki/ca-trust/source/anchors/http_ca.crt /var/www/diskover-web/src/diskover/elasticsearch-certs/ ; chown -R nginx.nginx /var/www/diskover-web/src/diskover/elasticsearch-certs/

🔴 Navigate to DiskoverAdmin → Web → Elasticsearch:

- Input your Elasticsearch IPs, and Elastic user + password.

- For the SSL certificate path, you need to put the full path of where the certificate is held on the Web, including the name of the cert: `/var/www/diskover-web/src/diskover/elasticsearch-certs/http_ca.crt`

- Hit **Test** at the bottom to ensure Diskover can communicate with your cluster.

DiskoverAdmin Installation

Overview

Diskover-Web is nothing without its new Administrator! The DiskoverAdmin configuration management user interface will allow you to further configure your Diskover system once it’s up and running.

⚠️ Note that DiskoverAdmin must be installed on the same host as Diskover-Web.

Start Here

During this process, you will need the latest Diskover 2.4x zip archive. Note that this is subject to change to RPMs in the near future. Once you have the zip file, you can SCP it to the machine that is designated for DiskoverAdmin.

🔴 On-prem | Will scp the file to the root user's home directory:

scp <path to diskover.zip> root@ipAddress:~/

🔴 AWS | Will scp the file to the user's home directory. Example using Rocky:

scp -i <path to PEM file> <path to diskover.zip> rocky@bastion-IP:~/

⚠️ Note that the user will differ depending on your OS. It is best to consult your AWS EC2 Console to get the exact user to connect to the bastion. Generally, these are the users for the following OS:

| OS | User |

|---|---|

| Rocky Linux | rocky |

| Centos 7 or 8 | centos |

| RHEL or Amazon Linux | ec2-user |

Python Installation

Python should already be installed as instructed in the Diskover Scanners/Workers Installation section. Alternatively, you could use a PyEnv (Python Environments).

⚠️ Note that some steps here, such as symlinking to the main Python3 executable, might not be advisable if this system is used for other Python-based programs.

DiskoverAdmin Installation

🔴 Extract your zip archive:

unzip diskover-2.4.0.zip

🔴 Copy the diskover-admin folder:

cd diskover-2.4.0/

cp -a diskover-admin /var/www/

🔴 Adjust the file ownership:

chown -R nginx.nginx /var/www/diskover-admin/

🔴 PIP installs:

cd /var/www/diskover-admin/etc/

python3 -m pip install -r requirements.txt

🔴 NGINX configuration - Copy the location block output of this cat command:

cat /var/www/diskover-admin/etc/diskover-web.conf

🔴 Paste the contents here. This needs to be in its own location block:

vi /etc/nginx/conf.d/diskover-web.conf

🔴 Set NGINX proxy params:

cp /var/www/diskover-admin/etc/proxy_params /etc/nginx/

🔴 Restart and check services health:

systemctl restart nginx php-fpm; systemctl status nginx php-fpm

Daemons

Now that DsikoverAdmin is installed and configured, let’s daemonize this service with systemd.

🔴 Copy default service file:

cp /var/www/diskover-admin/etc/diskover-admin.service /etc/systemd/system/

🔴 Start the Diskover-Admin service:

systemctl daemon-reload

systemctl enable diskover-admin

systemctl start diskover-admin

systemctl status diskover-admin

⚠️ A happy status looks like this:

Starting Uvicorn instance to serve /diskover-admin...

INFO: Uvicorn running on unix socket /var/www/diskover-admin/run/diskover-admin.sock (Press CTRL+C to quit)

INFO: Started parent process [10559]

Started Uvicorn instance to serve /diskover-admin.

Initial Configuration

Overview

This section describes the foundational setup to get things running without much complexity. Keep in mind that you can always go back and adjust any of your settings at any time.

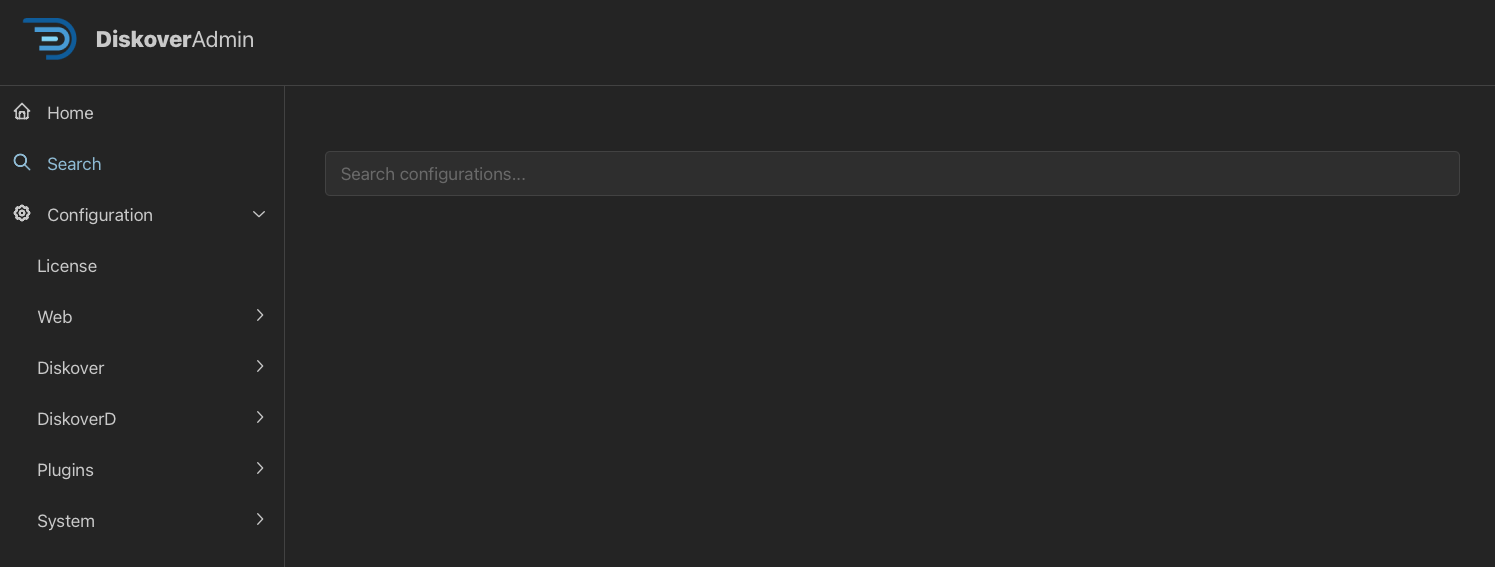

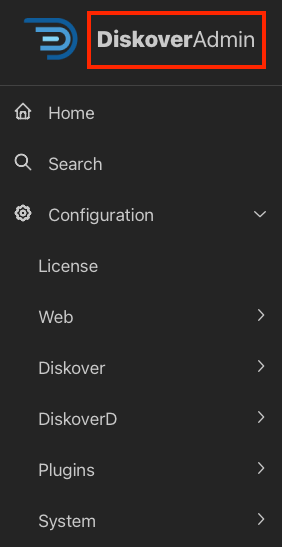

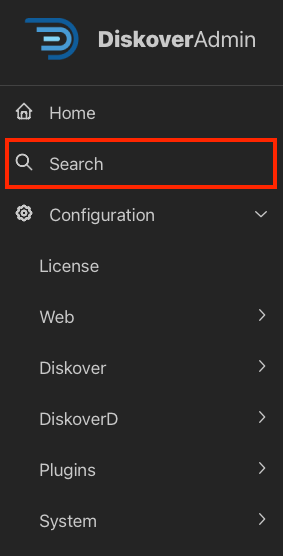

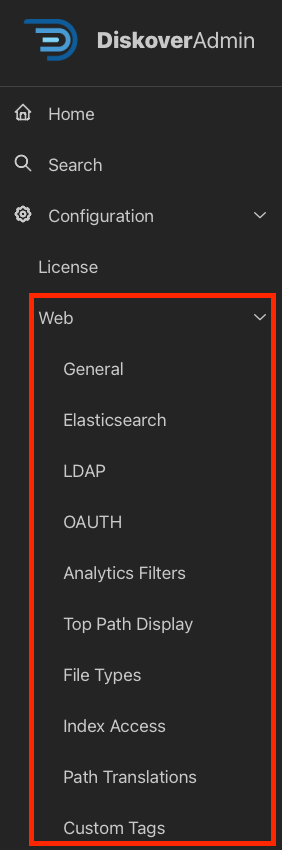

Access DiskoverAdmin

You have reached THE big moment. Paste this link in a browser to access DiskoverAdmin and complete the configuration process for all Diskover's components and plugins.

http://diskover-web:8000/diskover_admin/config/

DiskoverAdmin Wizard

The DiskoverAdmin service allows for the fine-tuning of Diskover's core components and plugins. The setup wizard will guide you through the first part of the initial configuration of the DiskoverAdmin services, which can be further customized later.

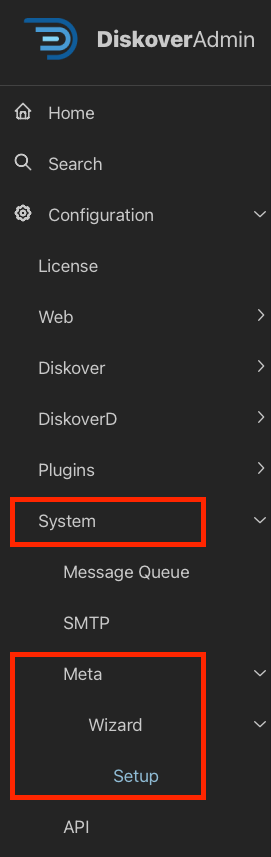

🔴 Access the Wizard by selecting System → Meta → Wizard → Setup:

Elasticsearch Connection Configuration for Diskover-Web

⚠️ Note that Diskover-Web and the scanners can point to two different Elasticsearch hosts, hence the next steps.

🔴 Input the IP/AWS endpoint/Elastic Cloud endpoint where your Elasticsearch is running in the host field. If you have a clustered ES setup, click + Add Item to list your other IPs/endpoints.

🔴 Keep the port at 9200 unless your cluster runs on another port.

🔴 Enable HTTPS if your Elasticsearch uses an encrypted protocol. Otherwise, keep it unchecked for HTTP.

🔴 If you select HTTPS, enter your Elasticsearch username & password.

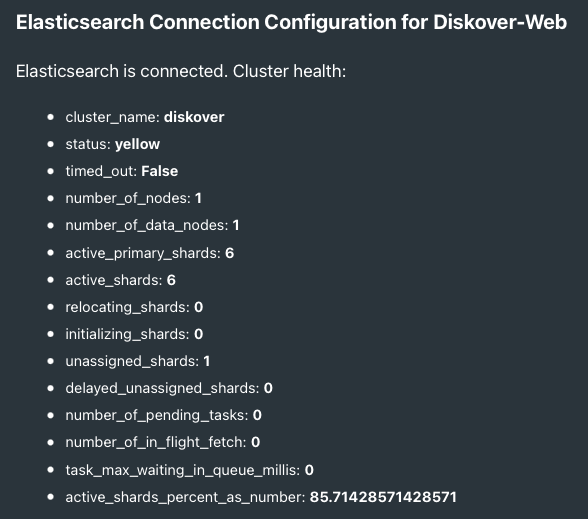

🔴 Click Test to see if Diskover can connect to your Elasticsearch system. The page will refresh and output the health of your cluster at the top of the page (number of shards, nodes, etc.):

🔴 If the test is successful, click Save & Continue, otherwise review the information you entered.

Elasticsearch Connection Configuration for Scanners

🔴 Copy connection settings from Diskover-Web?:

- If your Diskover-Web and scanners point to the same ES host, click Yes.

- If your Diskover-Web and scanners point to different ES hosts, click No, go through each field, and click:

- Test to test your connection.

- Save & Continue once done.

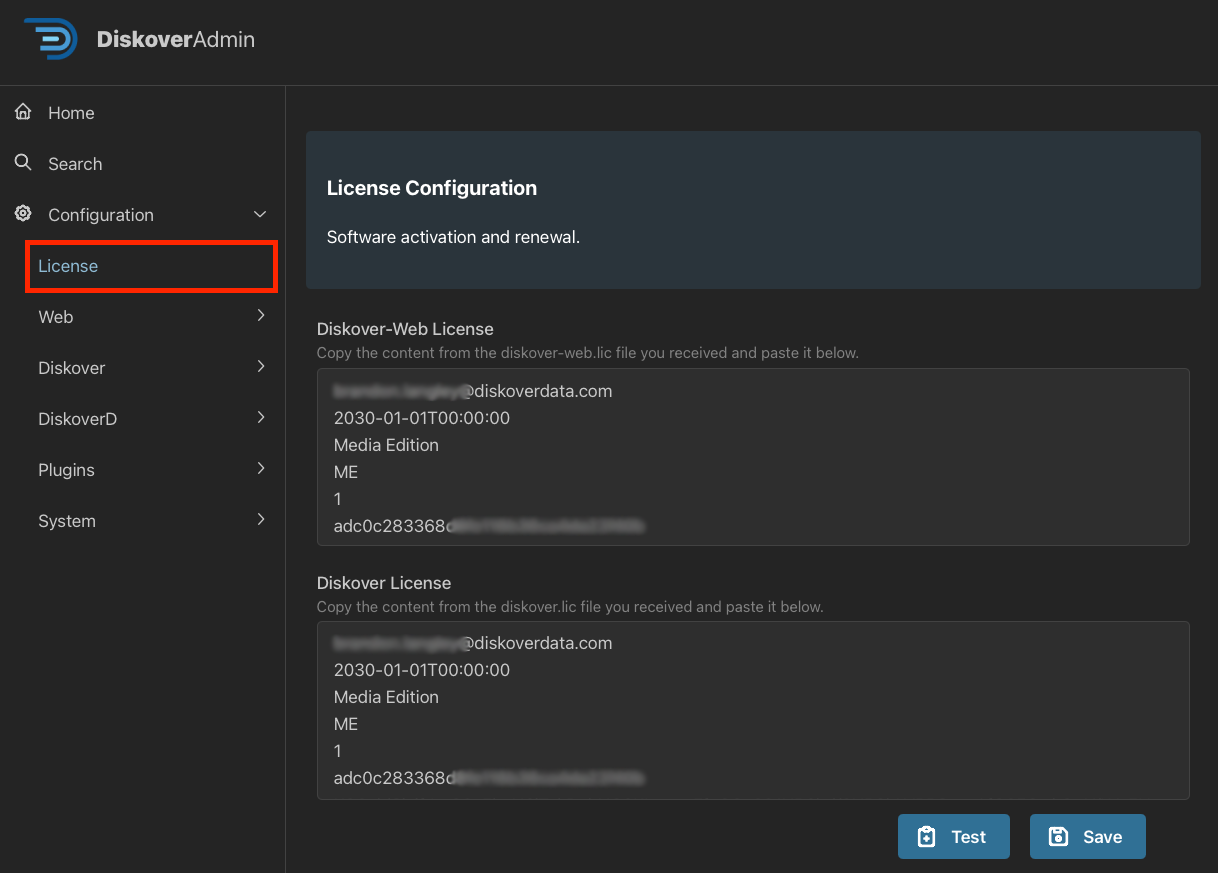

License

This is the point where you need to send your license request and the wizard partially automates this task for you. Note that if you skip this part for now, you can send a license request at any time.

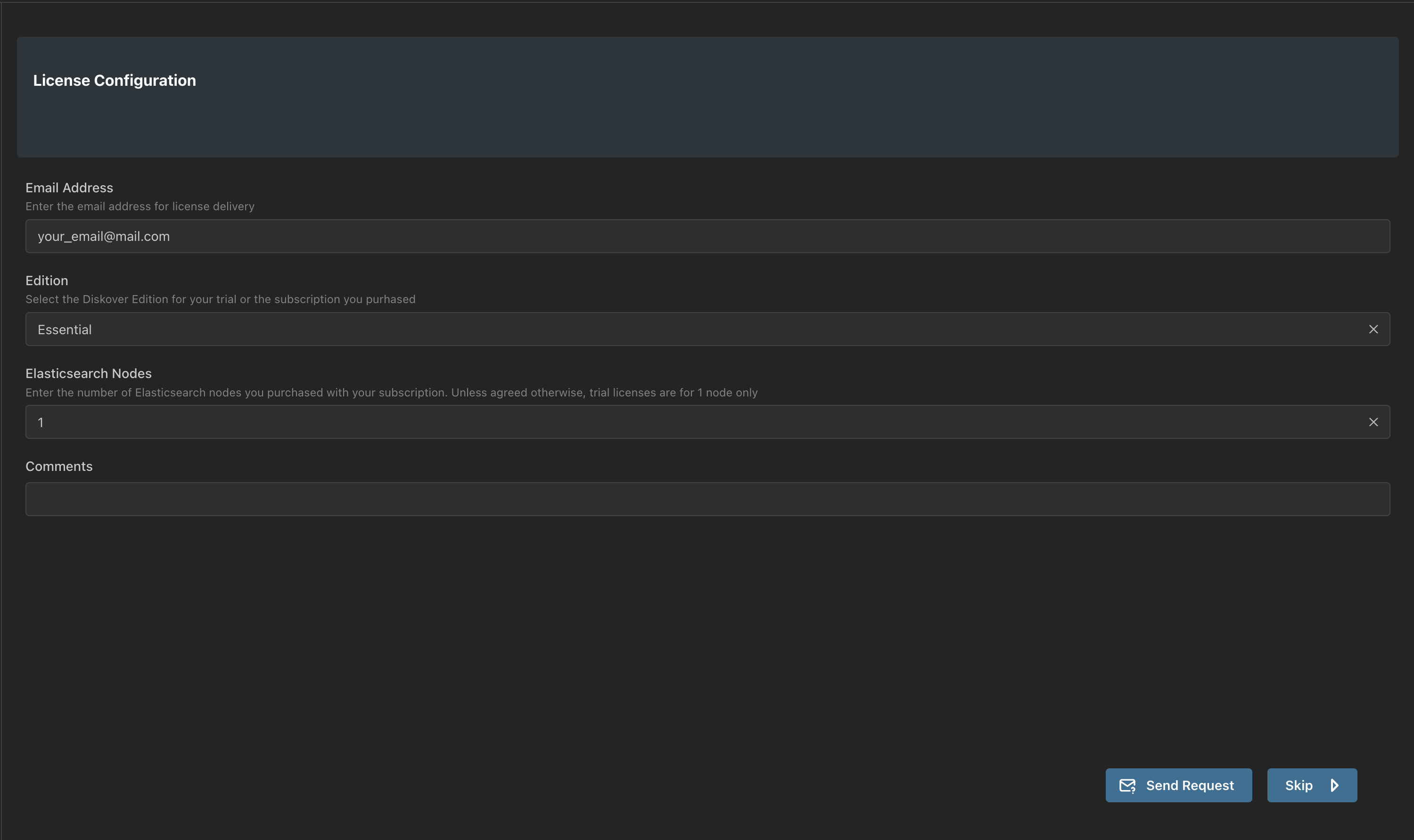

🔴 Click on the Request License button and fill out the required fields:

- Email Address: please use your corporate email.

- Edition: the solution you subscribed to or want to try for your POC.

- Elasticsearch Nodes: the number of nodes included in your subscription plan - POCs are for 1 node only.

- Comments: anything that can help us, like your company name.

✏️ Your license is be attached to your hardware ID, which will be automatically generated and sent to us during this process.

🔴 Click Send Request.

🔴 You should receive your license within 24 hours, usually much less than 24 hours or a little more if you send your request during a weekend. You have 2 choices at this point:

- Pause and wait to receive the license to continue.

- Click Skip and come back once you receive the license.

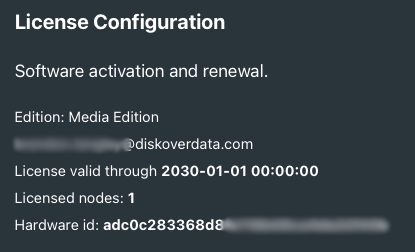

🔴 Once you receive the license, copy/paste the keys as instructed on the License Configuration page.

🔴 Click Test if you want to validate your license, example below, then click Save & Continue.

Time Zone

🔴 Using the dropdown list, select your Time Zone. More customization can be done later regarding time zones, click Save & Continue.

🔴 Click the box to enable your time zone selection, click Save & Continue.

Message Queue Configuration | RabbitMQ or Amazon MQ

This section is only needed if you are planning to use File Action plugins.

🔴 Follow the instructions on this page, click Test to check the connection, and then Save & Continue, or click Skip. You can configure or edit at any time.

⚠️ The basic setup using the wizard is not completed. CONGRATS!

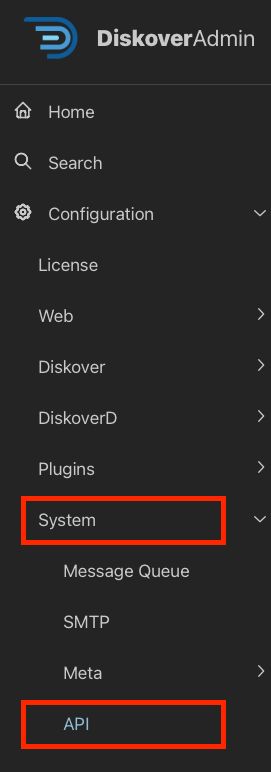

API

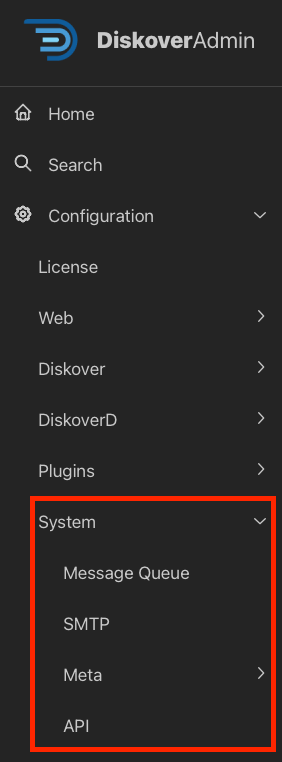

🔴 Now navigate to System → API.

🔴 The API Host needs to be the IP address where Diskover-Web is running.

🔴 Specify an API Port if different than the default of 8000.

Diskover Scanners/Workers

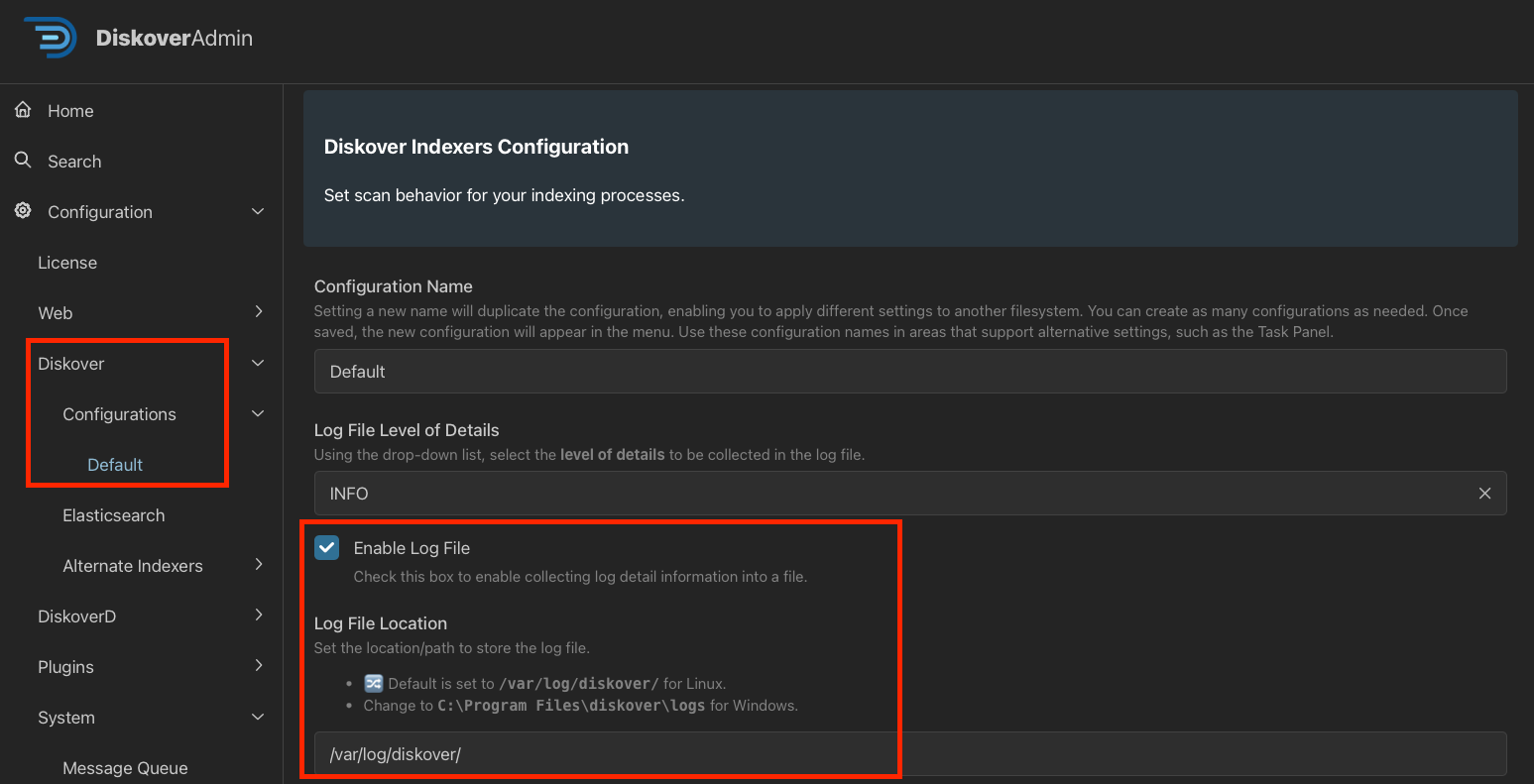

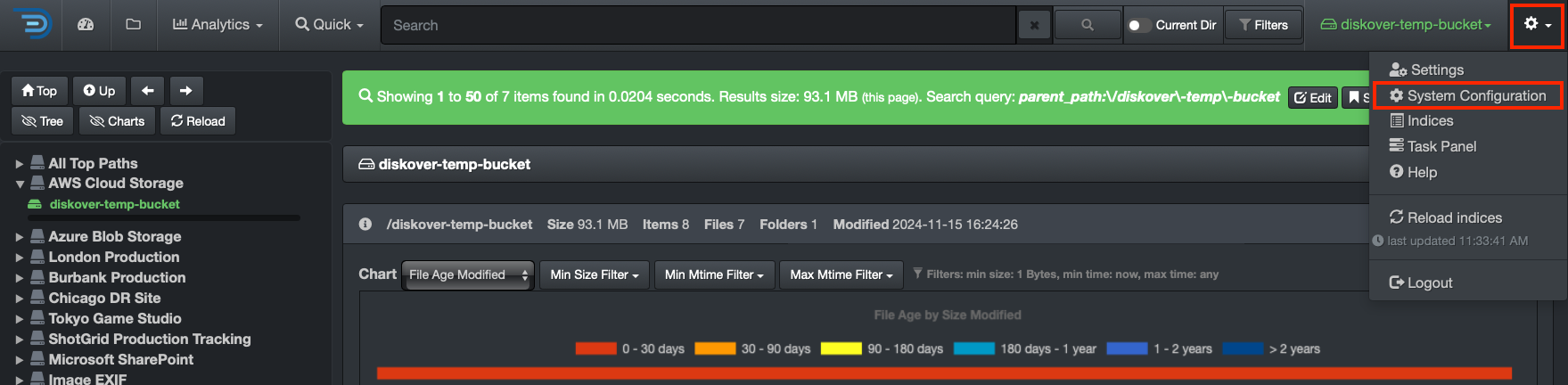

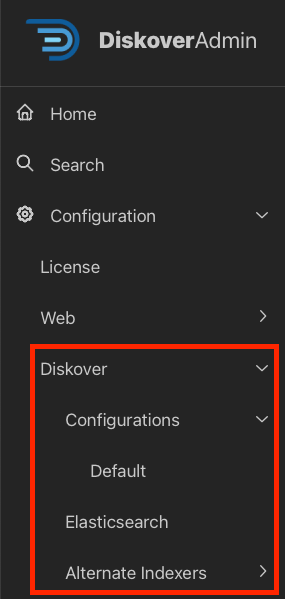

🔴 Navigate to Diskover → Configurations → Default.

🔴 Check Enable Log File and modify the Log File Location as needed:

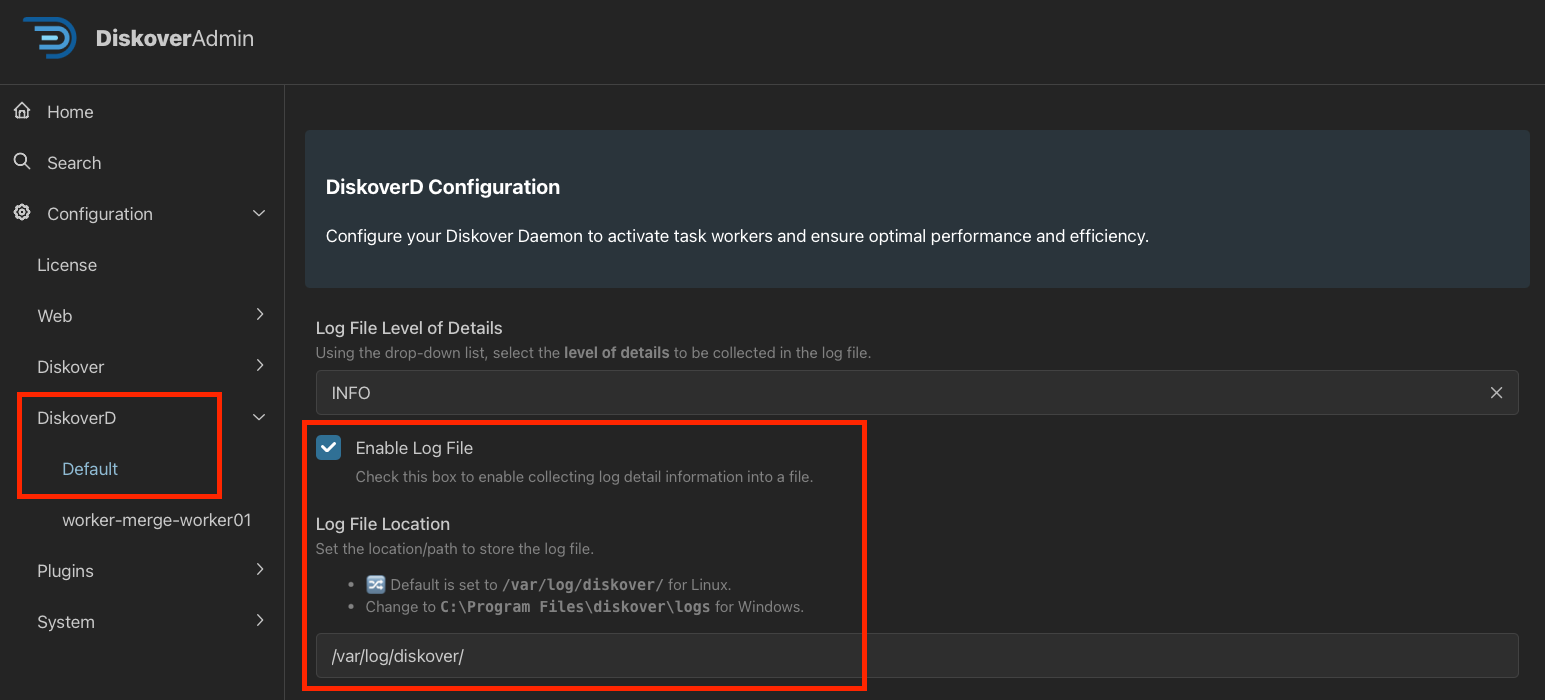

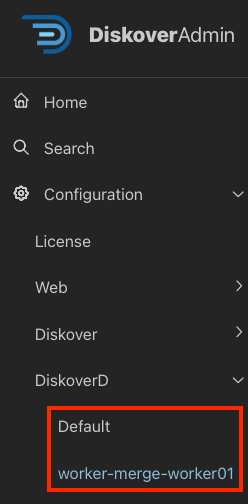

DiskoverD

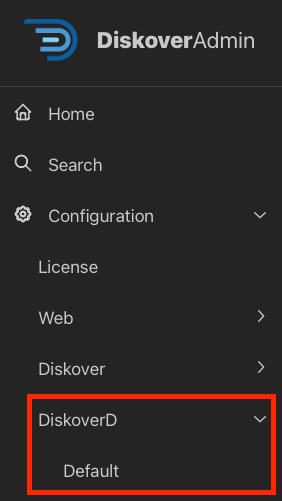

🔴 Navigate to DiskoverD → Default.

🔴 Check Enable Log File and modify the Log File Location as needed:

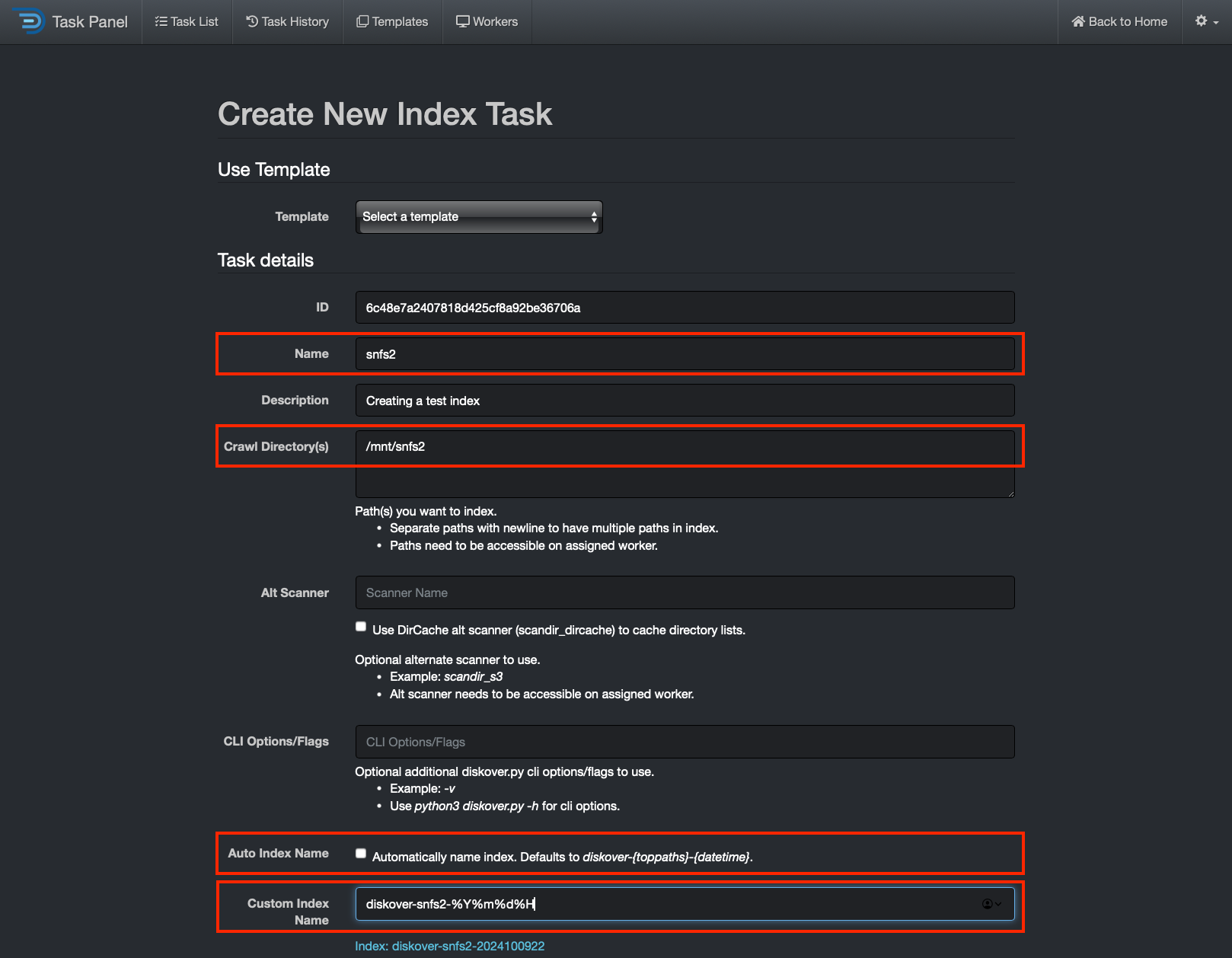

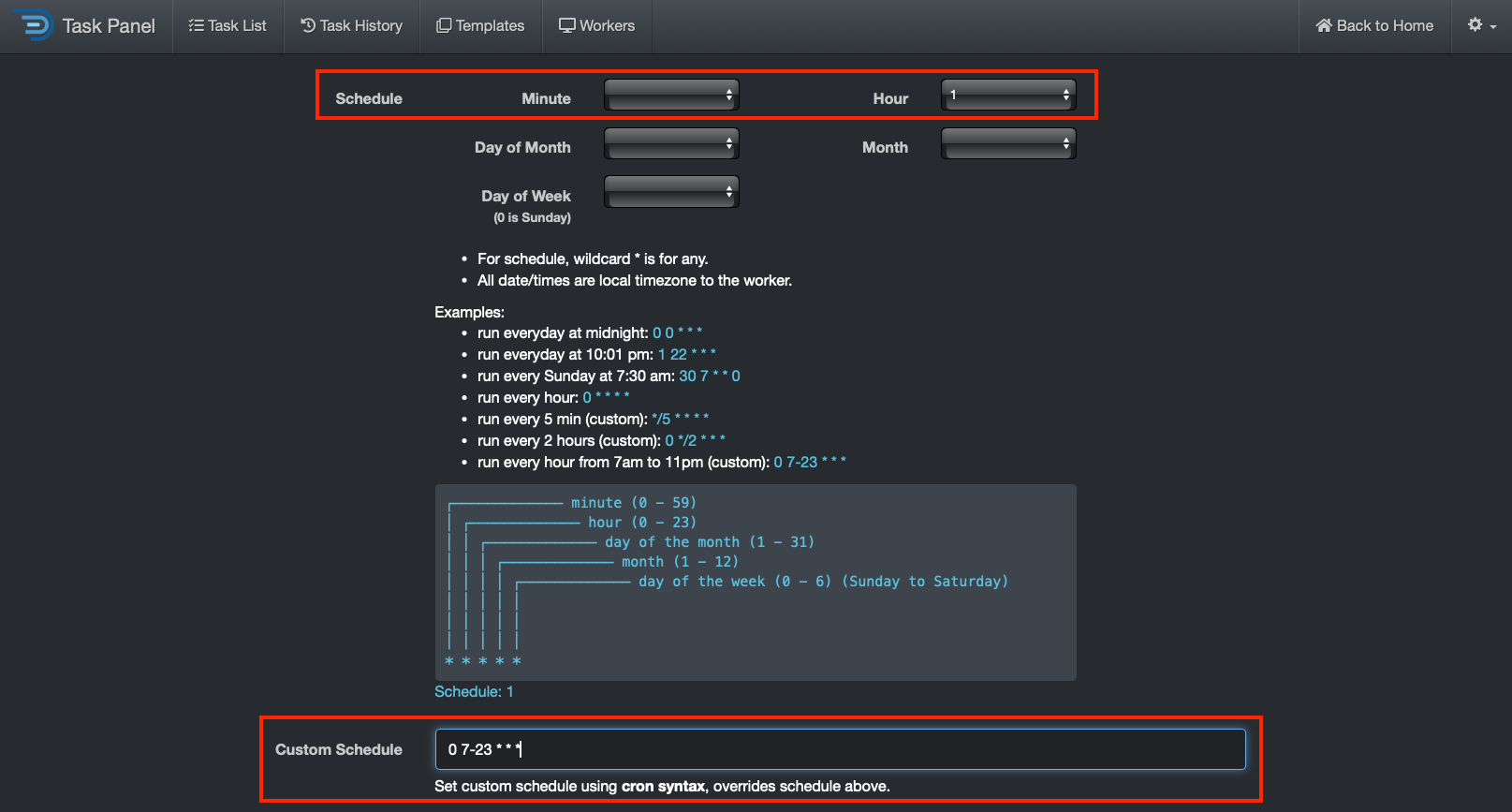

Schedule Your First Scan

You are now ready to schedule and then run your first scan! Go to the next section, Tasks Management via Task Panel, for the details.

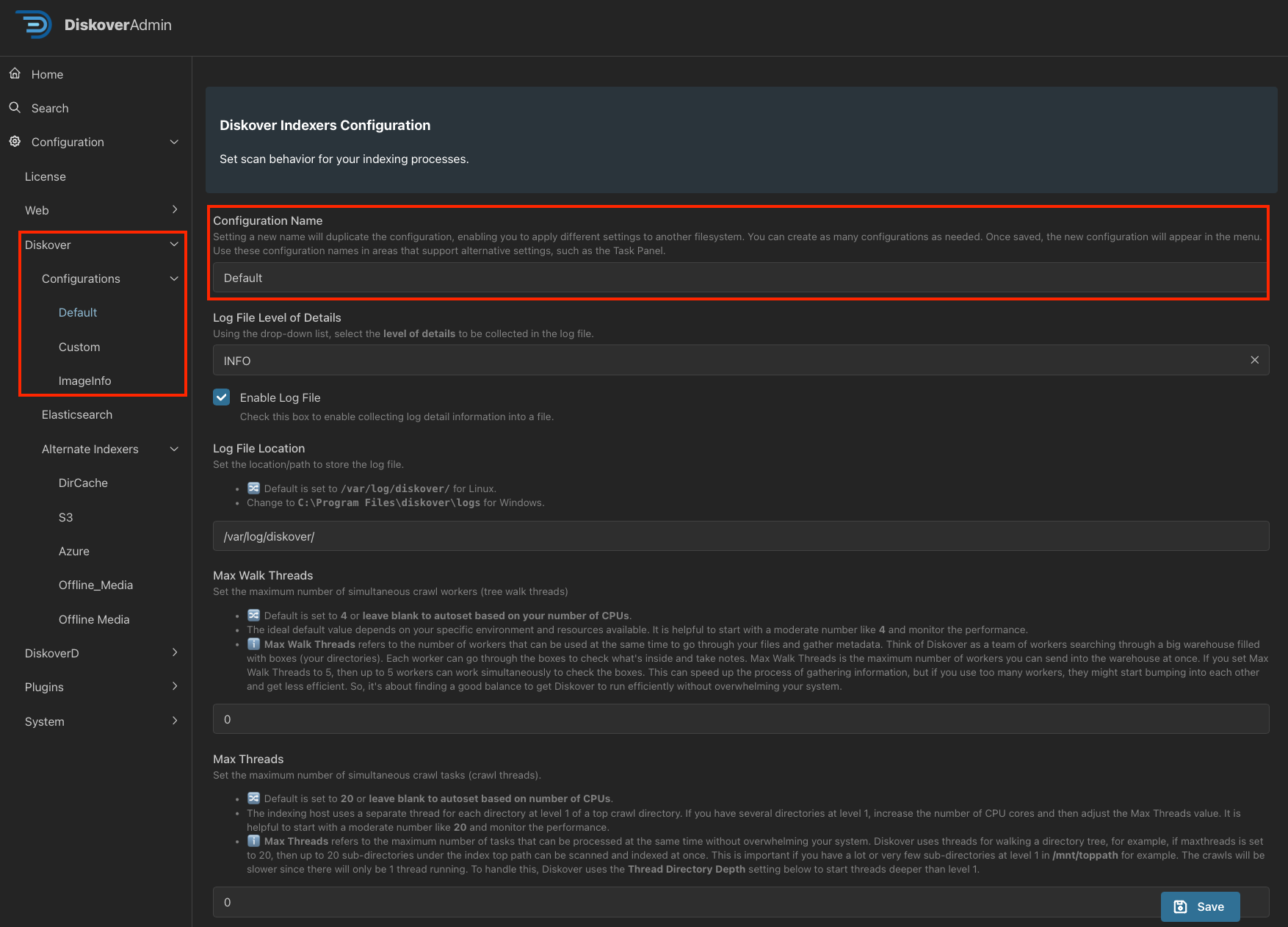

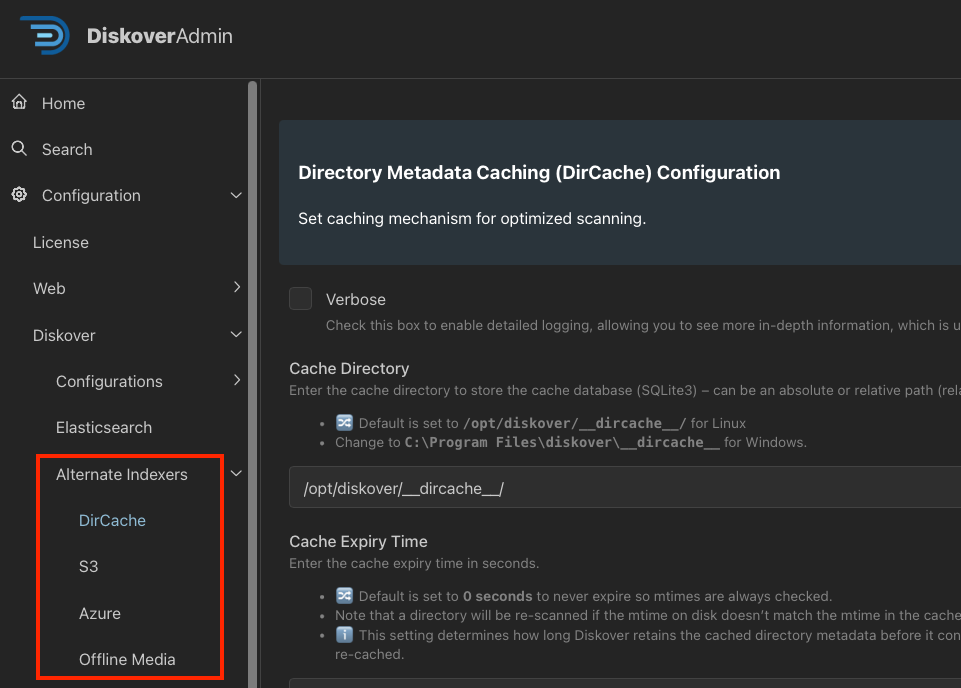

Create an Alternate Scanning Configuration

Overview

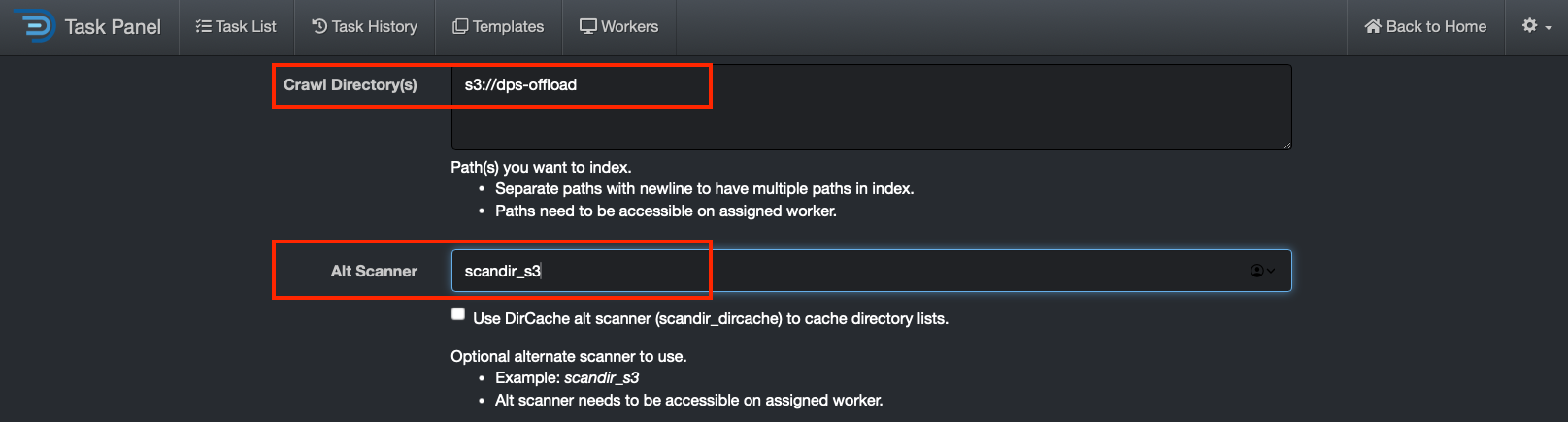

This section will walk you through how to set up an alternate scanning configuration that you can use in a task.

Alternate Config Setup

🔴 If you are in the main Diskover user interface, navigate to the DiskoverAdmin panel.

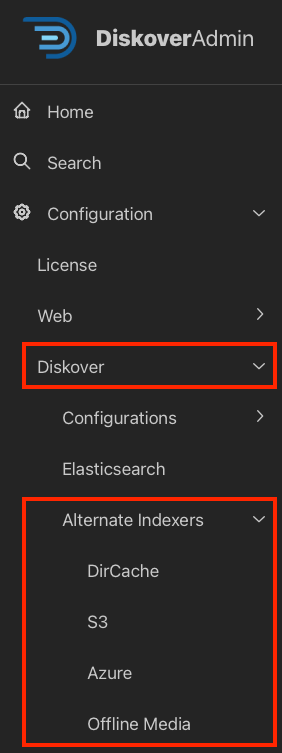

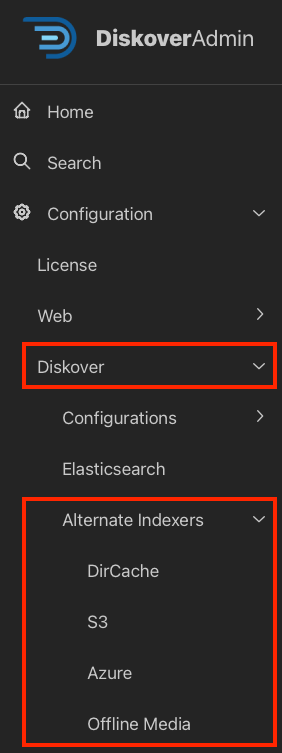

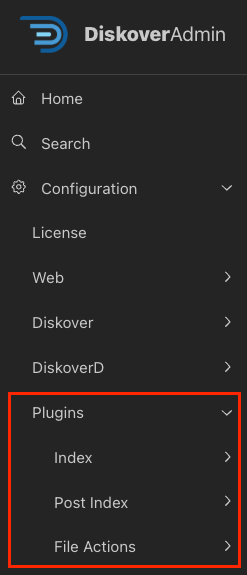

🔴 Go to Configuration → Diskover → Configurations → Default.

- You can change the name from Default to a name of your choosing.

- Hit Save and refresh the page.

- You should now see the new alternate configuration that you’ve created. You can see alternate configs in the example below that were created using Custom and ImageInfo.

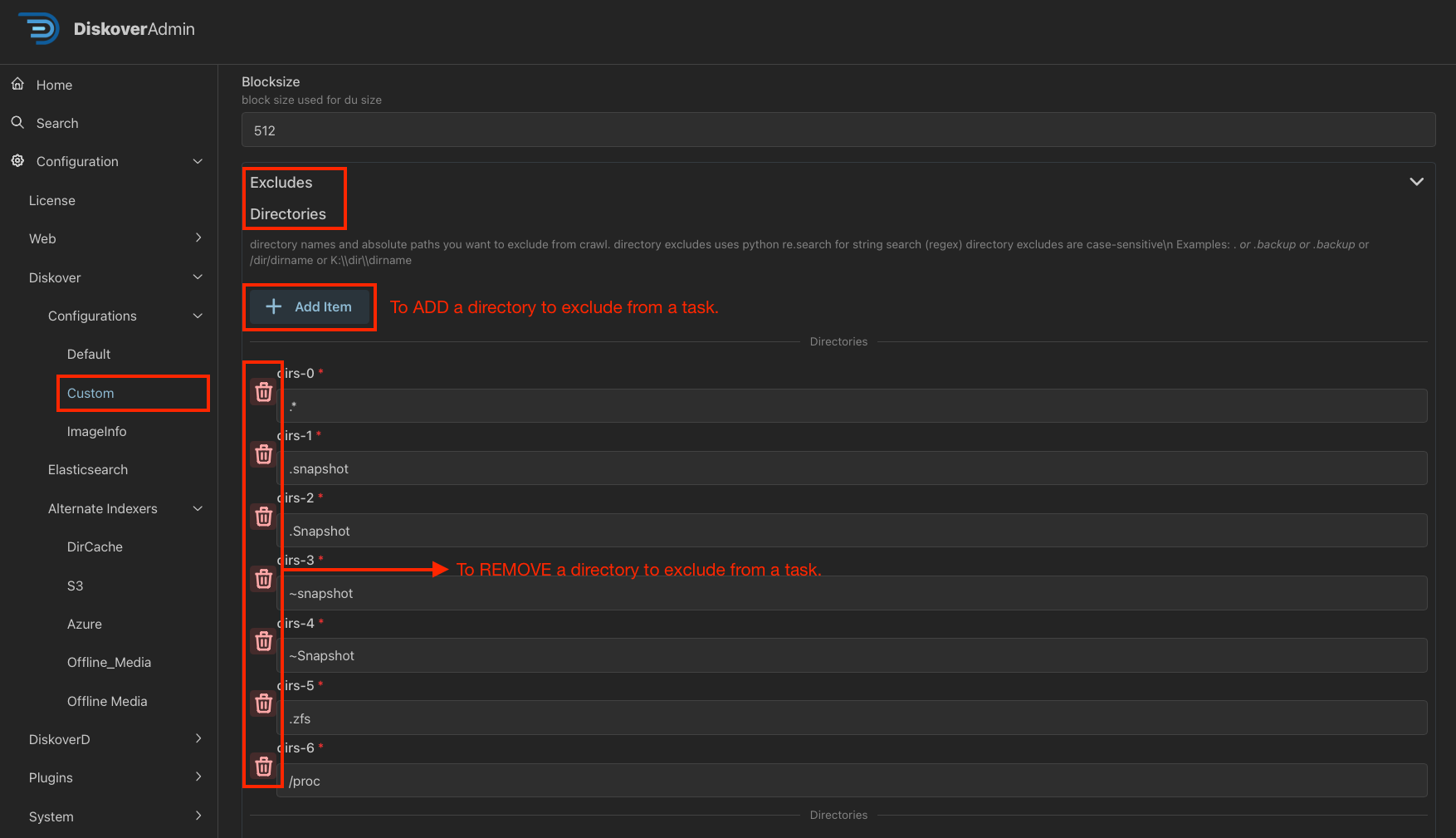

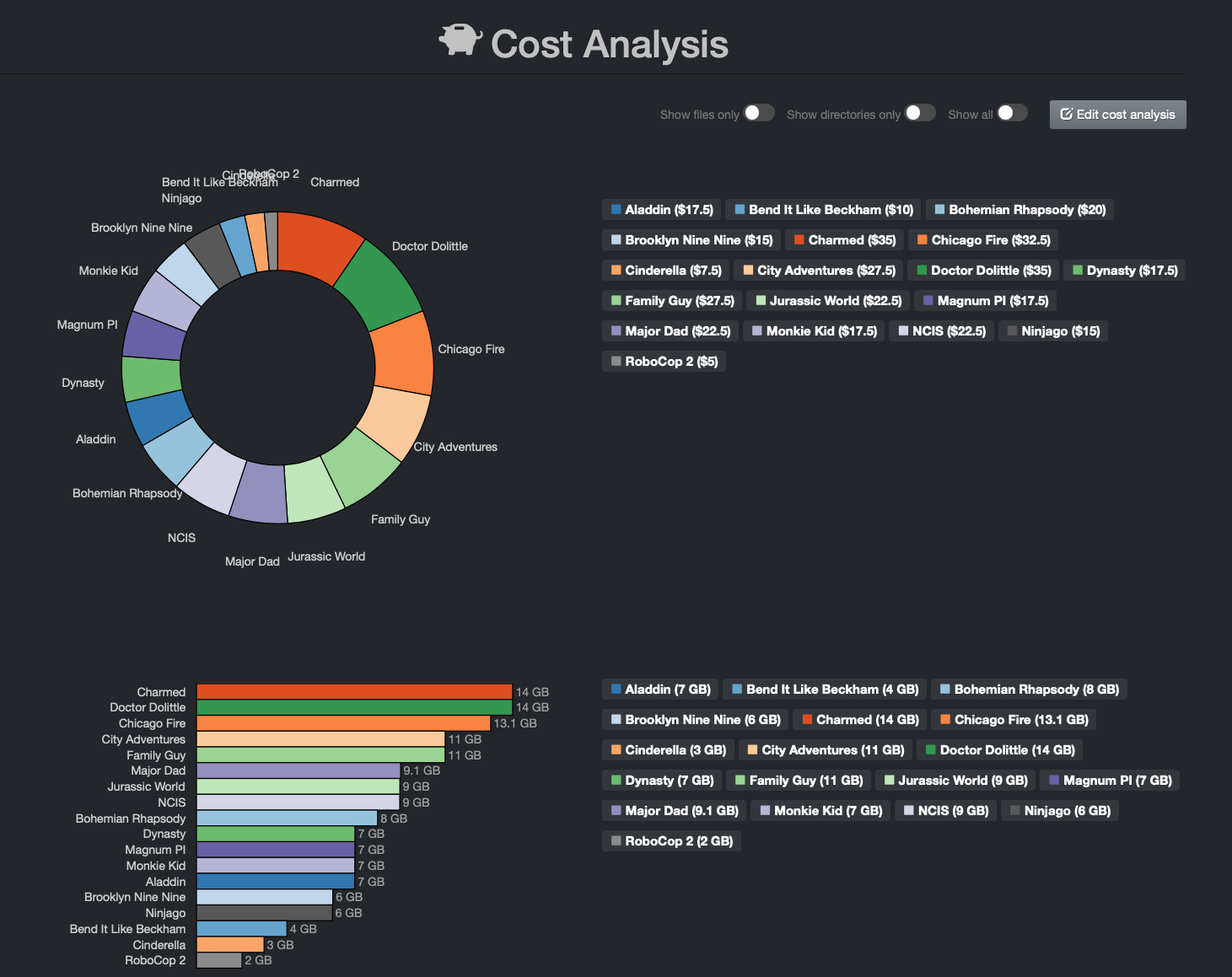

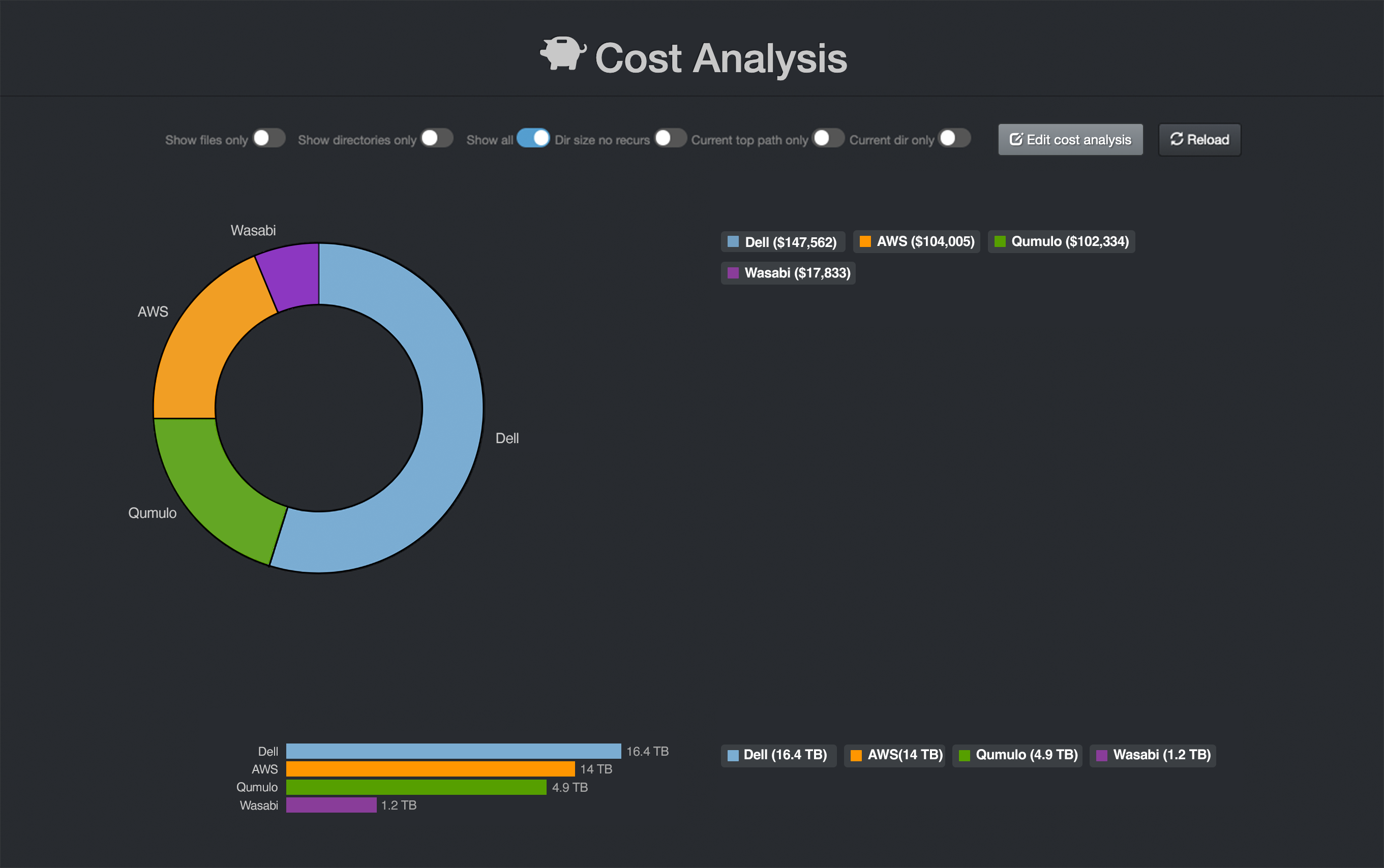

🔴 Click into the alternate configuration - we used the Custom alternate config for this example. Follow the help text on the config page to customize several parameters, such as:

- Include/exclude particular directories upon scanning - see example below

- Rules based on times

- Set up AutoTags

- Set up storage costs

- And more

- Don't forget to Save once done!

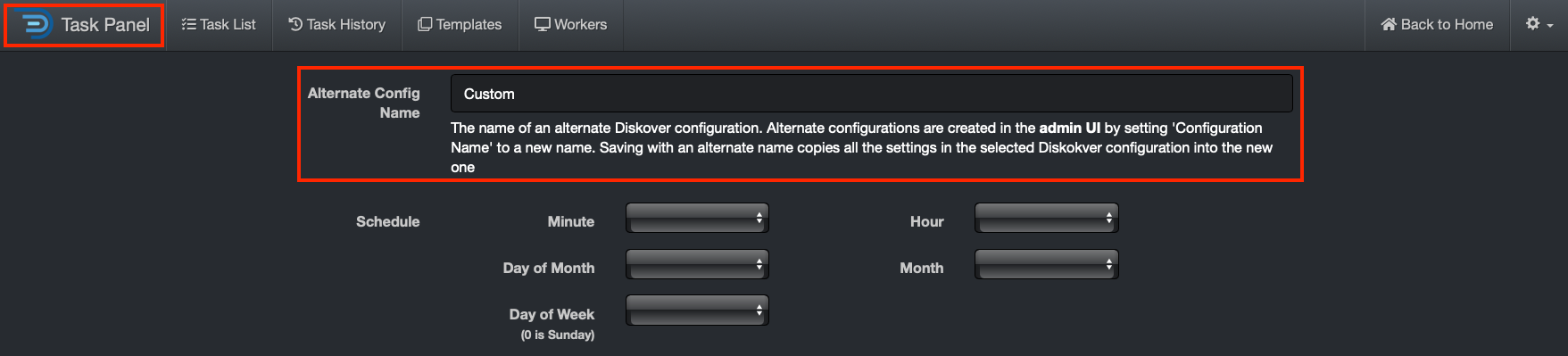

🔴 Now navigate to the Task Panel. When creating or modifying an existing task, scroll down to Alternate Config Name and use the name exactly as you created it on the DiskoverAdmin page - we used the Custom alternate config again for this example.

Alternate Config Testing

🔴 You should now be able to run that task, and we’re using the alternate configuration name Custom in the /var/log/diskover/diskoverd_subproc logs:

2024-11-11 13:55:24,804 - diskoverd_subproc - INFO - [do_work_thread_3] run command: python3 /opt/diskover/diskover.py --task "root" -f -i diskover-root-202411112055 --configurationname Diskover.Configurations.Custom --altscanner scandir_dircache /

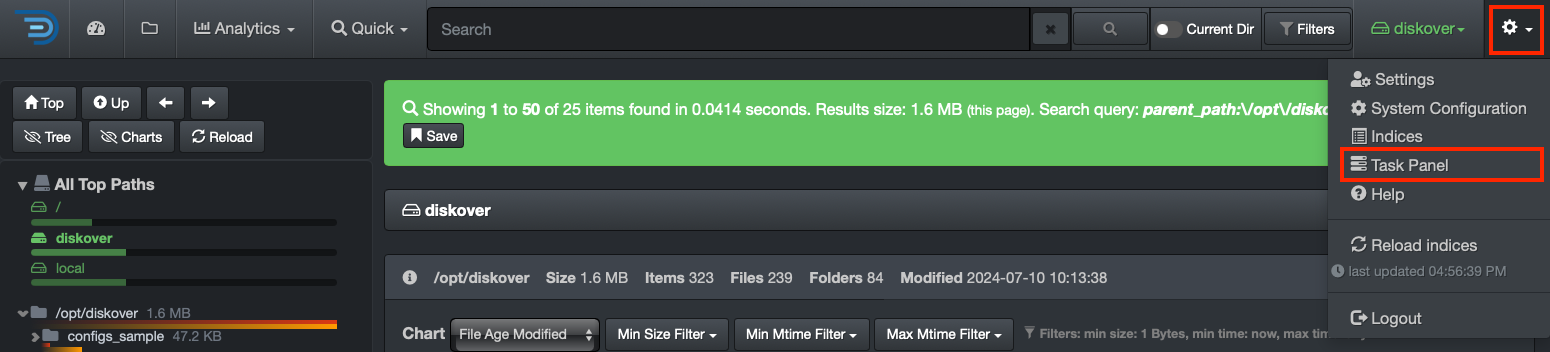

Tasks Management via Task Panel

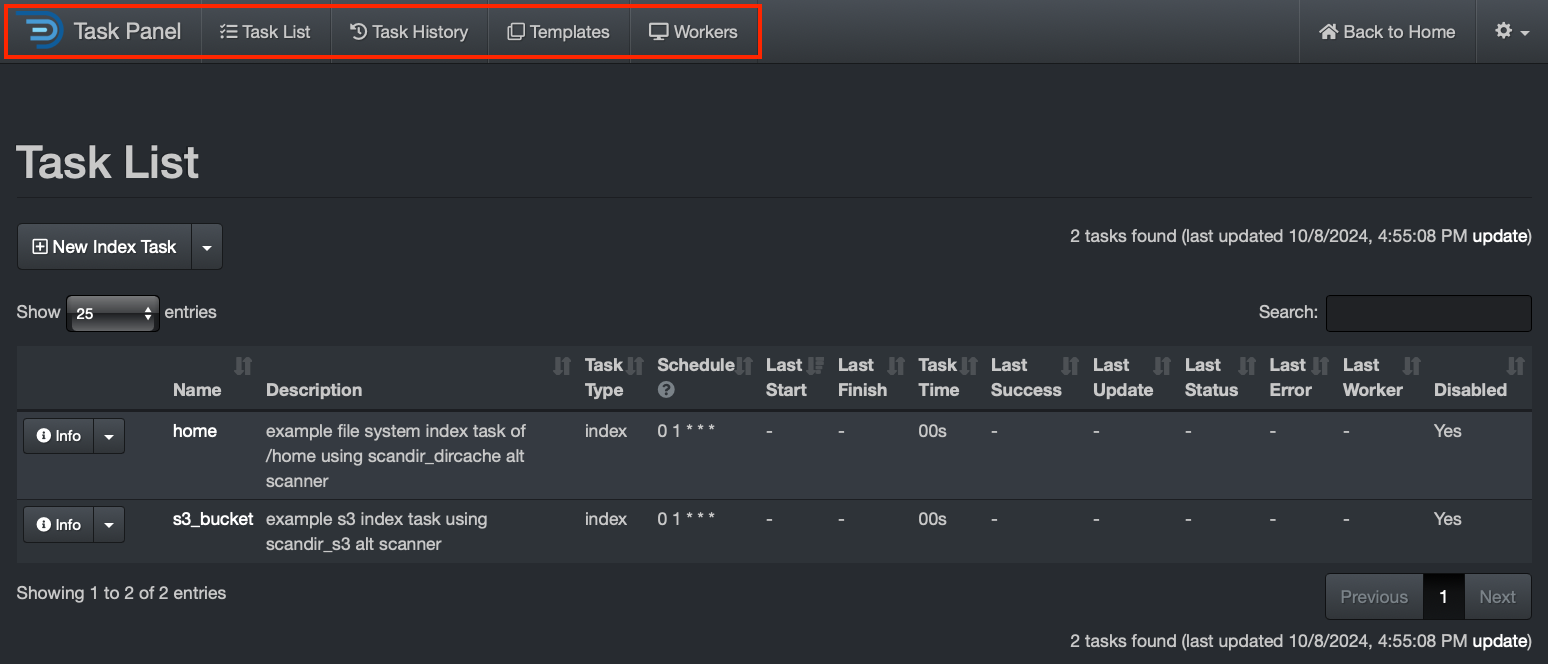

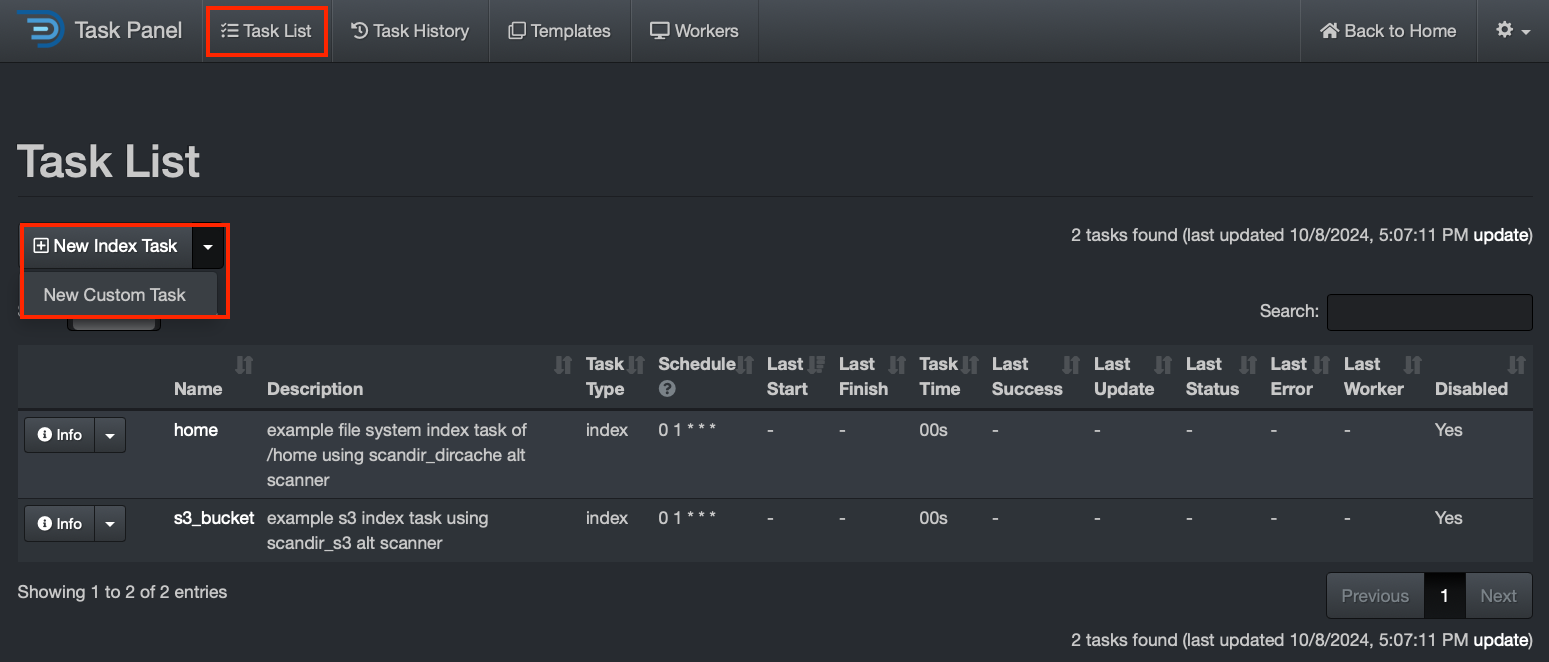

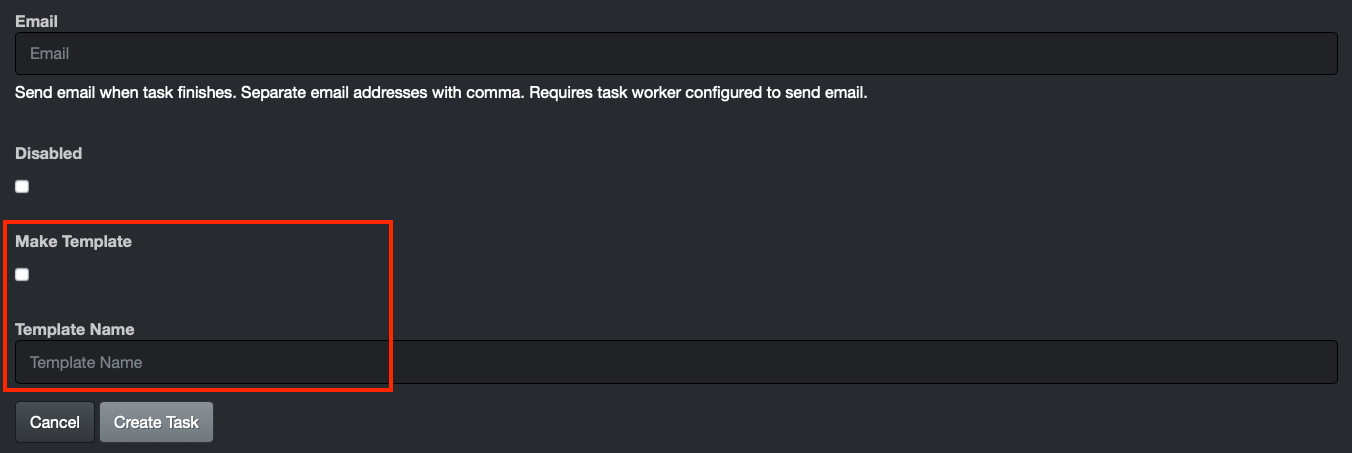

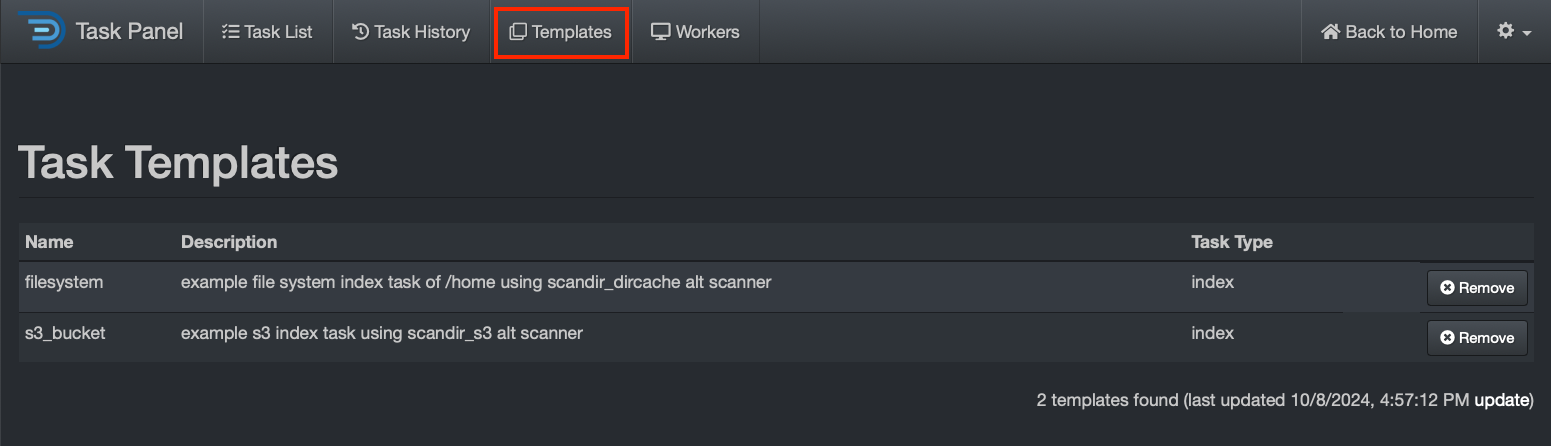

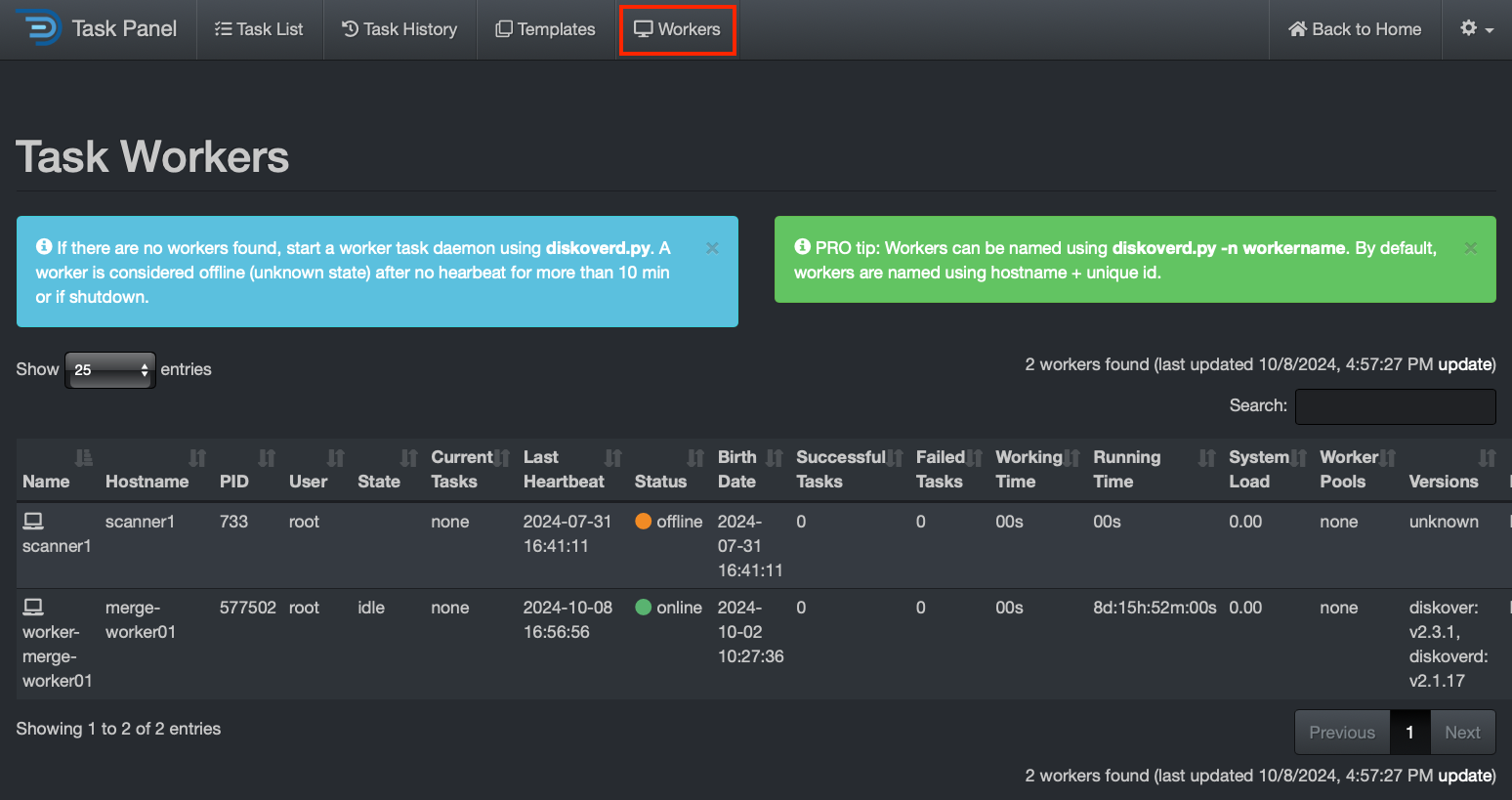

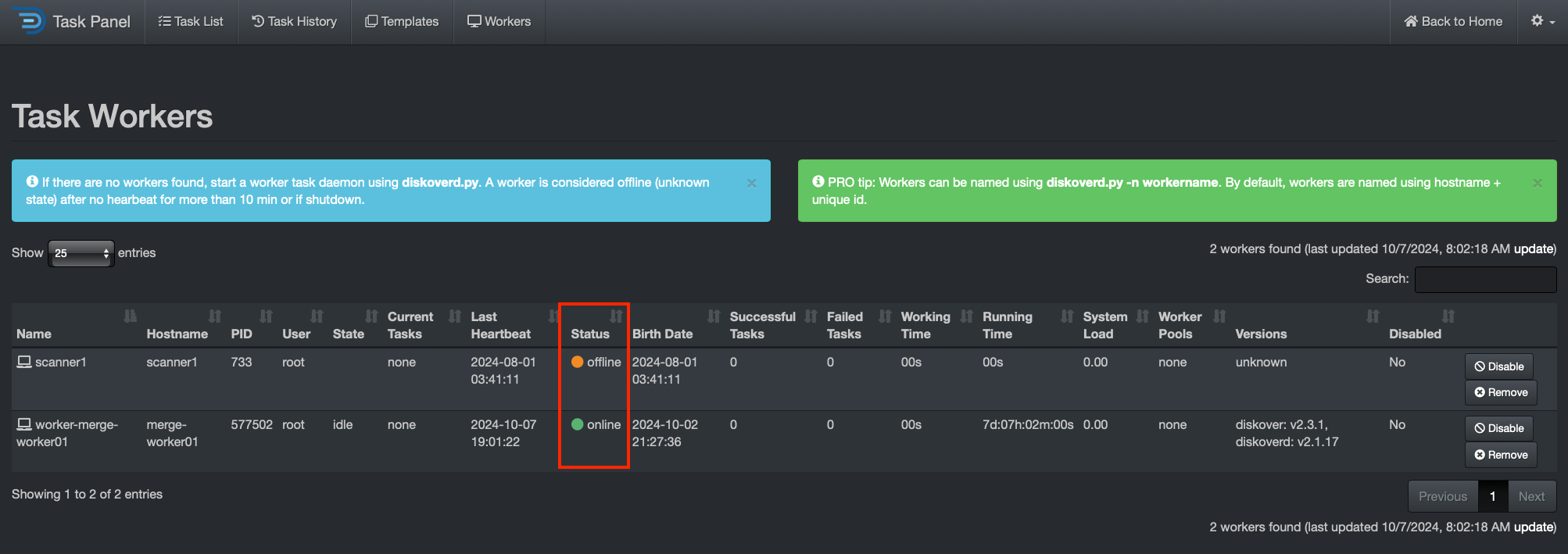

Task Panel Overview

Need Help?

Diskover offers professional services to assist with setting up tasks, dataflows, and workflows - contact us for details.

Use Cases

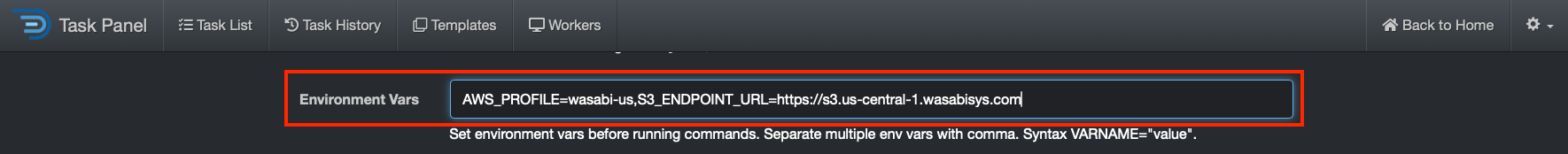

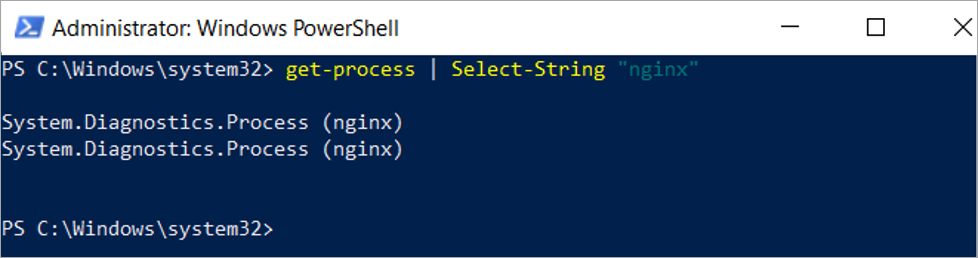

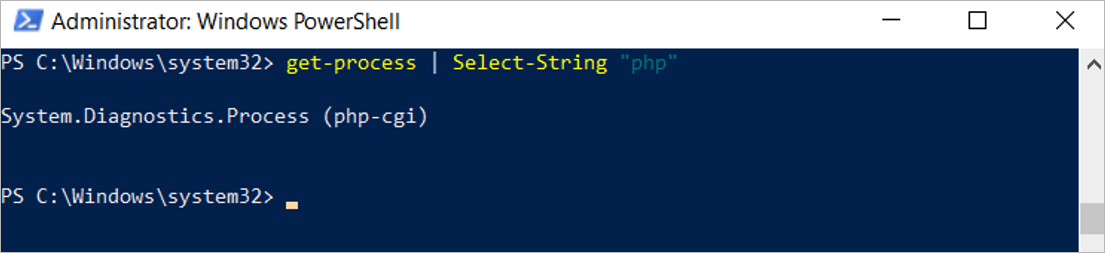

The Task Panel can be used to schedule scanning tasks or run any custom task, such as data curation via the AutoClean plugin, copying files, running duplicate file findings, checking permissions on directories, etc.